Mohammad S. E. Sendi

Algorithm-Agnostic Explainability for Unsupervised Clustering

May 17, 2021

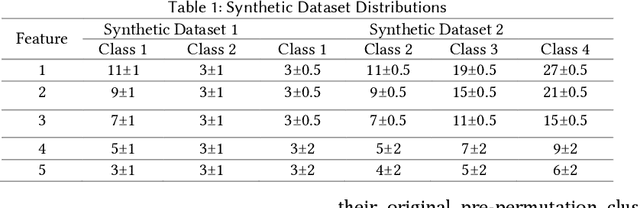

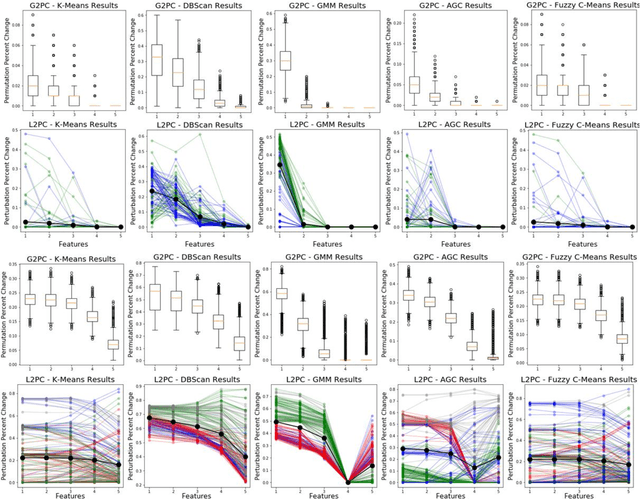

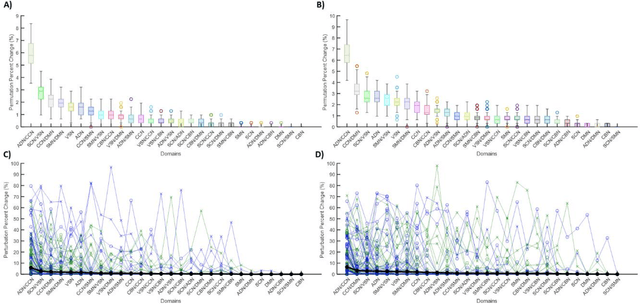

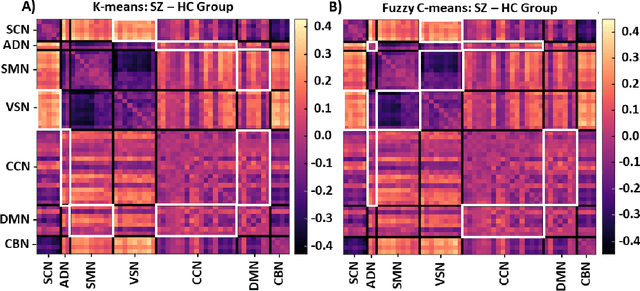

Abstract:Supervised machine learning explainability has greatly expanded in recent years. However, the field of unsupervised clustering explainability has lagged behind. Here, we, to the best of our knowledge, demonstrate for the first time how model-agnostic methods for supervised machine learning explainability can be adapted to provide algorithm-agnostic unsupervised clustering explainability. We present two novel algorithm-agnostic explainability methods, global permutation percent change (G2PC) feature importance and local perturbation percent change (L2PC) feature importance, that can provide insight into many clustering methods on a global level by identifying the relative importance of features to a clustering algorithm and on a local level by identifying the relative importance of features to the clustering of individual samples. We demonstrate the utility of the methods for explaining five popular clustering algorithms on low-dimensional, ground-truth synthetic datasets and on high-dimensional functional network connectivity (FNC) data extracted from a resting state functional magnetic resonance imaging (rs-fMRI) dataset of 151 subjects with schizophrenia (SZ) and 160 healthy controls (HC). Our proposed explainability methods robustly identify the relative importance of features across multiple clustering methods and could facilitate new insights into many applications. We hope that this study will greatly accelerate the development of the field of clustering explainability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge