Mohammad Mamun

On Statistical Learning of Branch and Bound for Vehicle Routing Optimization

Oct 17, 2023

Abstract:Recently, machine learning of the branch and bound algorithm has shown promise in approximating competent solutions to NP-hard problems. In this paper, we utilize and comprehensively compare the outcomes of three neural networks--graph convolutional neural network (GCNN), GraphSAGE, and graph attention network (GAT)--to solve the capacitated vehicle routing problem. We train these neural networks to emulate the decision-making process of the computationally expensive Strong Branching strategy. The neural networks are trained on six instances with distinct topologies from the CVRPLIB and evaluated on eight additional instances. Moreover, we reduced the minimum number of vehicles required to solve a CVRP instance to a bin-packing problem, which was addressed in a similar manner. Through rigorous experimentation, we found that this approach can match or improve upon the performance of the branch and bound algorithm with the Strong Branching strategy while requiring significantly less computational time. The source code that corresponds to our research findings and methodology is readily accessible and available for reference at the following web address: https://isotlaboratory.github.io/ml4vrp

DeepTaskAPT: Insider APT detection using Task-tree based Deep Learning

Aug 31, 2021

Abstract:APT, known as Advanced Persistent Threat, is a difficult challenge for cyber defence. These threats make many traditional defences ineffective as the vulnerabilities exploited by these threats are insiders who have access to and are within the network. This paper proposes DeepTaskAPT, a heterogeneous task-tree based deep learning method to construct a baseline model based on sequences of tasks using a Long Short-Term Memory (LSTM) neural network that can be applied across different users to identify anomalous behaviour. Rather than applying the model to sequential log entries directly, as most current approaches do, DeepTaskAPT applies a process tree based task generation method to generate sequential log entries for the deep learning model. To assess the performance of DeepTaskAPT, we use a recently released synthetic dataset, DARPA Operationally Transparent Computing (OpTC) dataset and a real-world dataset, Los Alamos National Laboratory (LANL) dataset. Both of them are composed of host-based data collected from sensors. Our results show that DeepTaskAPT outperforms similar approaches e.g. DeepLog and the DeepTaskAPT baseline model demonstrate its capability to detect malicious traces in various attack scenarios while having high accuracy and low false-positive rates. To the best of knowledge this is the very first attempt of using recently introduced OpTC dataset for cyber threat detection.

Machine Learning in Precision Medicine to Preserve Privacy via Encryption

Feb 05, 2021

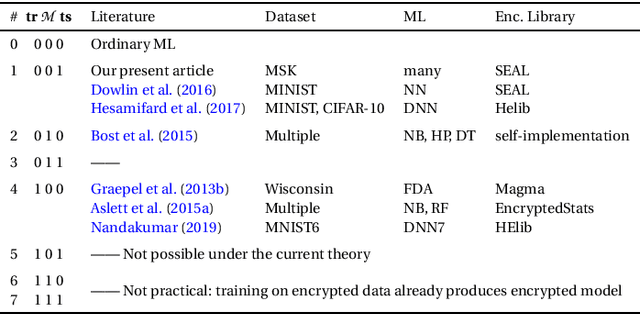

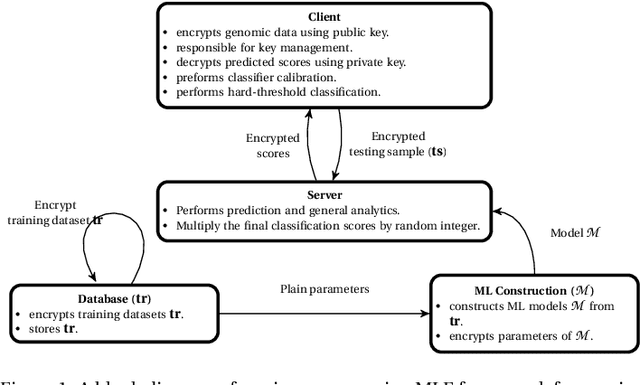

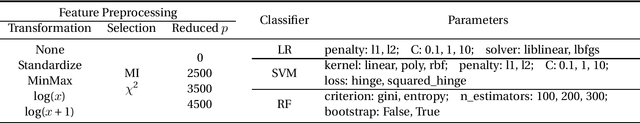

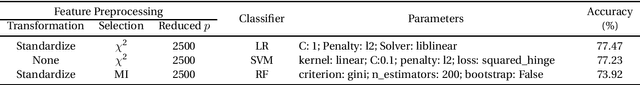

Abstract:Precision medicine is an emerging approach for disease treatment and prevention that delivers personalized care to individual patients by considering their genetic makeups, medical histories, environments, and lifestyles. Despite the rapid advancement of precision medicine and its considerable promise, several underlying technological challenges remain unsolved. One such challenge of great importance is the security and privacy of precision health-related data, such as genomic data and electronic health records, which stifle collaboration and hamper the full potential of machine-learning (ML) algorithms. To preserve data privacy while providing ML solutions, this article makes three contributions. First, we propose a generic machine learning with encryption (MLE) framework, which we used to build an ML model that predicts cancer from one of the most recent comprehensive genomics datasets in the field. Second, our framework's prediction accuracy is slightly higher than that of the most recent studies conducted on the same dataset, yet it maintains the privacy of the patients' genomic data. Third, to facilitate the validation, reproduction, and extension of this work, we provide an open-source repository that contains the design and implementation of the framework, all the ML experiments and code, and the final predictive model deployed to a free cloud service.

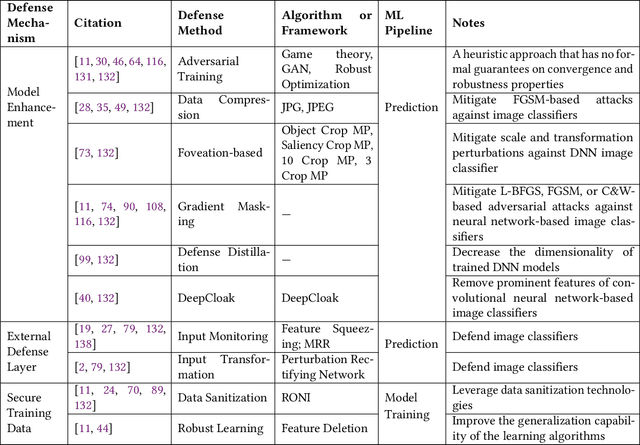

Towards a Robust and Trustworthy Machine Learning System Development

Jan 08, 2021

Abstract:Machine Learning (ML) technologies have been widely adopted in many mission critical fields, such as cyber security, autonomous vehicle control, healthcare, etc. to support intelligent decision-making. While ML has demonstrated impressive performance over conventional methods in these applications, concerns arose with respect to system resilience against ML-specific security attacks and privacy breaches as well as the trust that users have in these systems. In this article, firstly we present our recent systematic and comprehensive survey on the state-of-the-art ML robustness and trustworthiness technologies from a security engineering perspective, which covers all aspects of secure ML system development including threat modeling, common offensive and defensive technologies, privacy-preserving machine learning, user trust in the context of machine learning, and empirical evaluation for ML model robustness. Secondly, we then push our studies forward above and beyond a survey by describing a metamodel we created that represents the body of knowledge in a standard and visualized way for ML practitioners. We further illustrate how to leverage the metamodel to guide a systematic threat analysis and security design process in a context of generic ML system development, which extends and scales up the classic process. Thirdly, we propose future research directions motivated by our findings to advance the development of robust and trustworthy ML systems. Our work differs from existing surveys in this area in that, to the best of our knowledge, it is the first of its kind of engineering effort to (i) explore the fundamental principles and best practices to support robust and trustworthy ML system development; and (ii) study the interplay of robustness and user trust in the context of ML systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge