Mohammad Ibrahim Sarker

3DFCNN: Real-Time Action Recognition using 3D Deep Neural Networks with Raw Depth Information

Jun 13, 2020

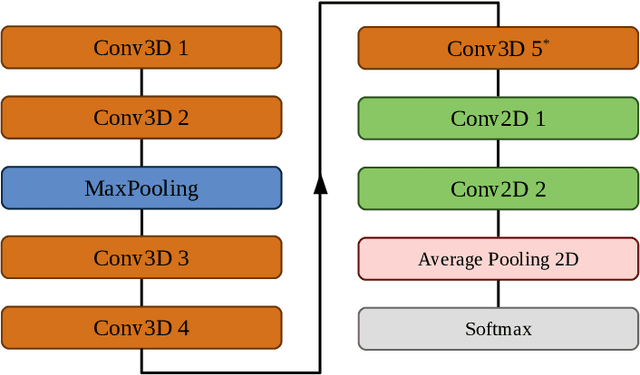

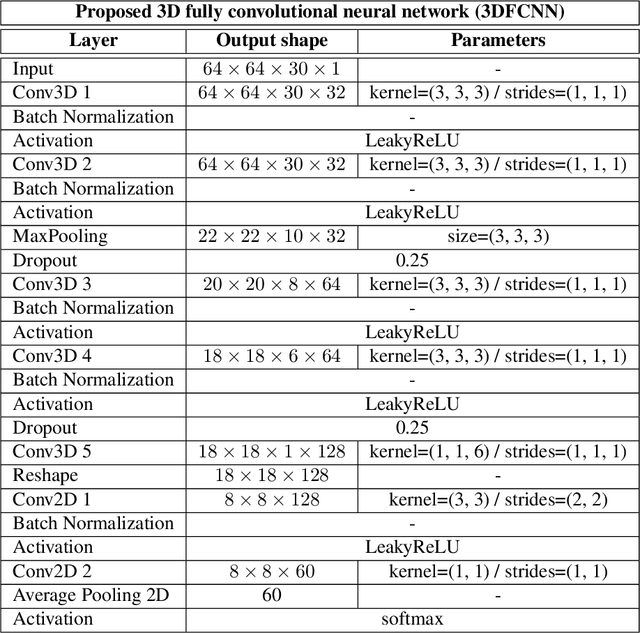

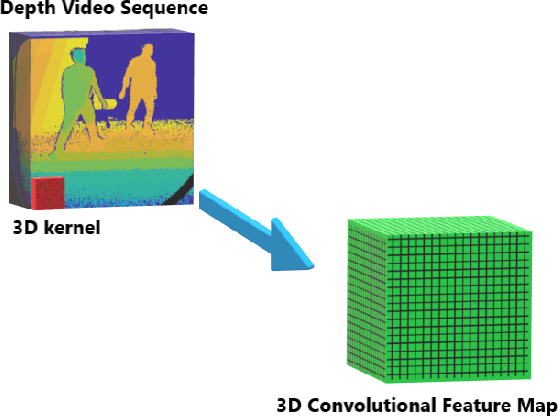

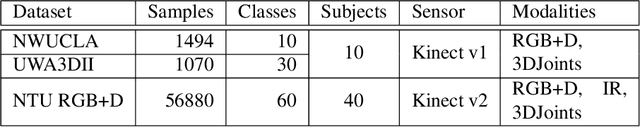

Abstract:Human actions recognition is a fundamental task in artificial vision, that has earned a great importance in recent years due to its multiple applications in different areas. %, such as the study of human behavior, security or video surveillance. In this context, this paper describes an approach for real-time human action recognition from raw depth image-sequences, provided by an RGB-D camera. The proposal is based on a 3D fully convolutional neural network, named 3DFCNN, which automatically encodes spatio-temporal patterns from depth sequences without %any costly pre-processing. Furthermore, the described 3D-CNN allows %automatic features extraction and actions classification from the spatial and temporal encoded information of depth sequences. The use of depth data ensures that action recognition is carried out protecting people's privacy% allows recognizing the actions carried out by people, protecting their privacy%\sout{of them} , since their identities can not be recognized from these data. %\st{ from depth images.} 3DFCNN has been evaluated and its results compared to those from other state-of-the-art methods within three widely used %large-scale NTU RGB+D datasets, with different characteristics (resolution, sensor type, number of views, camera location, etc.). The obtained results allows validating the proposal, concluding that it outperforms several state-of-the-art approaches based on classical computer vision techniques. Furthermore, it achieves action recognition accuracy comparable to deep learning based state-of-the-art methods with a lower computational cost, which allows its use in real-time applications.

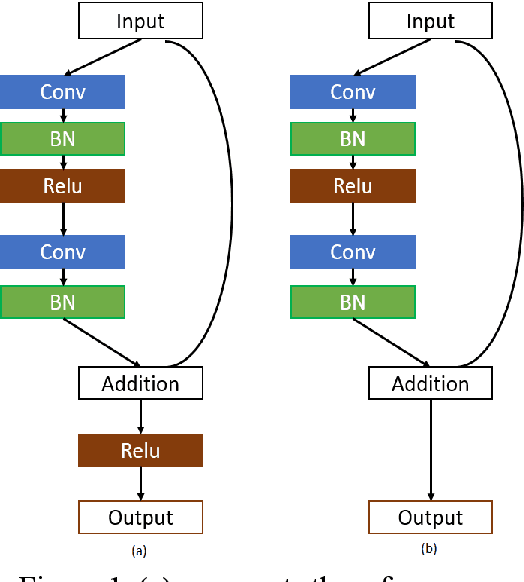

Inception Architecture and Residual Connections in Classification of Breast Cancer Histology Images

Dec 10, 2019

Abstract:This paper presents results of applying Inception v4 deep convolutional neural network to ICIAR-2018 Breast Cancer Classification Grand Challenge, part a. The Challenge task is to classify breast cancer biopsy results, presented in form of hematoxylin and eosin stained images. Breast cancer classification is of primary interest to the medical practitioners and thus binary classification of breast cancer images have been under investigation by many researchers, but multi-class categorization of histology breast images have been challenging due to the subtle differences among the categories. In this work extensive data augmentation is conducted to reduce overfitting and effectiveness of committee of several Inception v4 networks is studied. We report 89% accuracy on 4 class classification task and 93.7% on carcinoma/non-carcinoma two class classification task using our test set of 80 images.

Corn leaf detection using Region based convolutional neural network

Jun 05, 2019

Abstract:The field of machine learning has become an increasingly budding area of research as more efficient methods are needed in the quest to handle more complex image detection challenges. To solve the problems of agriculture is more and more important because food is the fundamental of life. However, the detection accuracy in recent corn field systems are still far away from the demands in practice due to a number of different weeds. This paper presents a model to handle the problem of corn leaf detection in given digital images collected from farm field. Based on results of experiments conducted with several state-of-the-art models adopted by CNN, a region-based method has been proposed as a faster and more accurate method of corn leaf detection. Being motivated with such unique attributes of ResNet, we combine it with region based network (such as faster rcnn), which is able to automatically detect corn leaf in heavy weeds occlusion. The method is evaluated on the dataset from farm and we make an annotation ourselves. Our proposed method achieves significantly outperform in corn detection system.

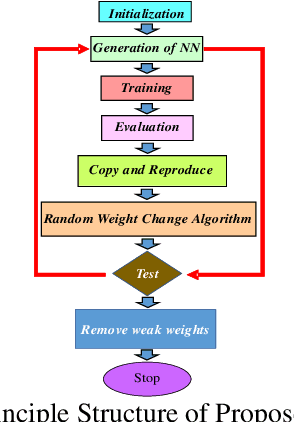

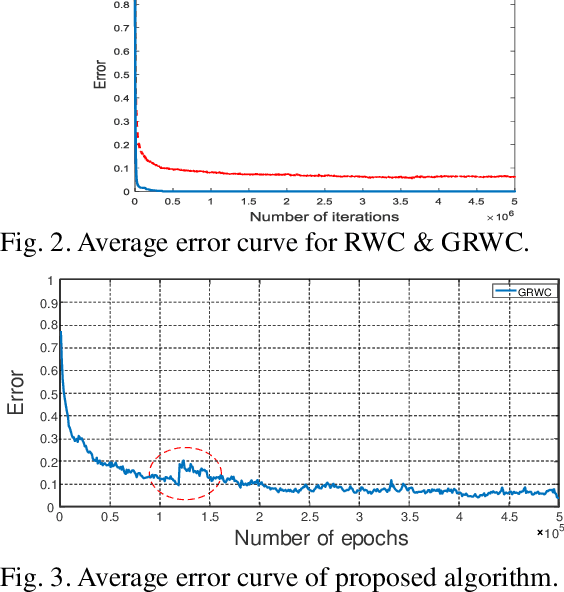

Optimizing method for Neural Network based on Genetic Random Weight Change Learning Algorithm

Jun 05, 2019

Abstract:Random weight change (RWC) algorithm is extremely component and robust for the hardware implementation of neural networks. RWC and Genetic algorithm (GA) are well known methodologies used for optimizing and learning the neural network (NN). Individually, each of these two algorithms has its strength and weakness along with separate objectives. However, recently, researchers combine these two algorithms for better learning and optimization of NN. In this paper, we proposed a methodology by combining the RWC and GA, namely Genetic Random Weight Change (GRWC), as well as demonstrate a seminal way to reduce the complexity of the neural network by removing weak weights of GRWC. In contrast to RWC and GA, GRWC contains an effective optimization procedure which is worthy at exploring a large and complex space in intellectual strategies influenced by the GA/RWC synergy. The learning behavior of the proposed algorithm was tested on MNIST dataset and it was able to prove its performance.

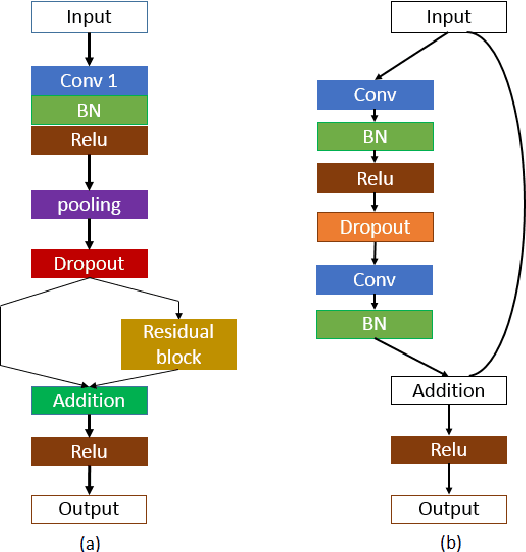

Farm land weed detection with region-based deep convolutional neural networks

Jun 05, 2019

Abstract:Machine learning has become a major field of research in order to handle more and more complex image detection problems. Among the existing state-of-the-art CNN models, in this paper a region-based, fully convolutional network, for fast and accurate object detection has been proposed based on the experimental results. Among the region based networks, ResNet is regarded as the most recent CNN architecture which has obtained the best results at ImageNet Large-Scale Visual Recognition Challenge (ILSVRC) in 2015. Deep residual networks (ResNets) can make the training process faster and attain more accuracy compared to their equivalent conventional neural networks. Being motivated with such unique attributes of ResNet, this paper evaluates the performance of fine-tuned ResNet for object classification of our weeds dataset. The dataset of farm land weeds detection is insufficient to train such deep CNN models. To overcome this shortcoming, we perform dropout techniques along with deep residual network for reducing over-fitting problem as well as applying data augmentation with the proposed ResNet to achieve a significant outperforming result from our weeds dataset. We achieved better object detection performance with Region-based Fully Convolutional Networks (R-FCN) technique which is latched with our proposed ResNet-101.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge