Mohamed Kari

Explainable OOHRI: Communicating Robot Capabilities and Limitations as Augmented Reality Affordances

Jan 21, 2026Abstract:Human interaction is essential for issuing personalized instructions and assisting robots when failure is likely. However, robots remain largely black boxes, offering users little insight into their evolving capabilities and limitations. To address this gap, we present explainable object-oriented HRI (X-OOHRI), an augmented reality (AR) interface that conveys robot action possibilities and constraints through visual signifiers, radial menus, color coding, and explanation tags. Our system encodes object properties and robot limits into object-oriented structures using a vision-language model, allowing explanation generation on the fly and direct manipulation of virtual twins spatially aligned within a simulated environment. We integrate the end-to-end pipeline with a physical robot and showcase diverse use cases ranging from low-level pick-and-place to high-level instructions. Finally, we evaluate X-OOHRI through a user study and find that participants effectively issue object-oriented commands, develop accurate mental models of robot limitations, and engage in mixed-initiative resolution.

EEG2Vec: Learning Affective EEG Representations via Variational Autoencoders

Jul 16, 2022

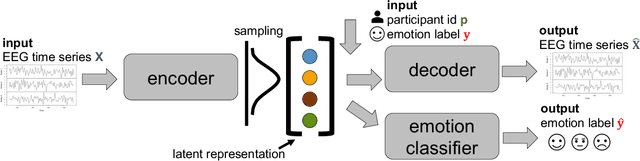

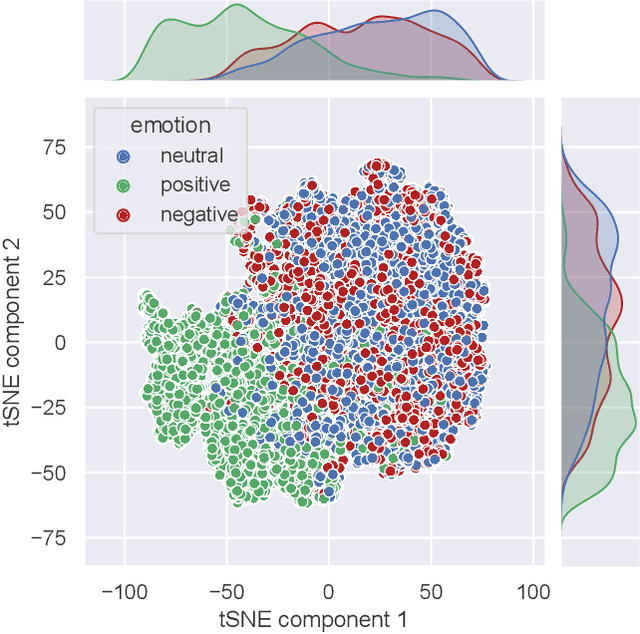

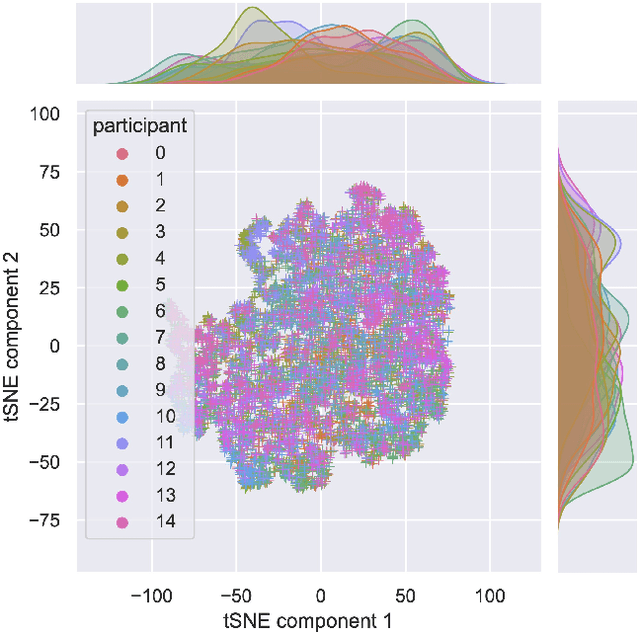

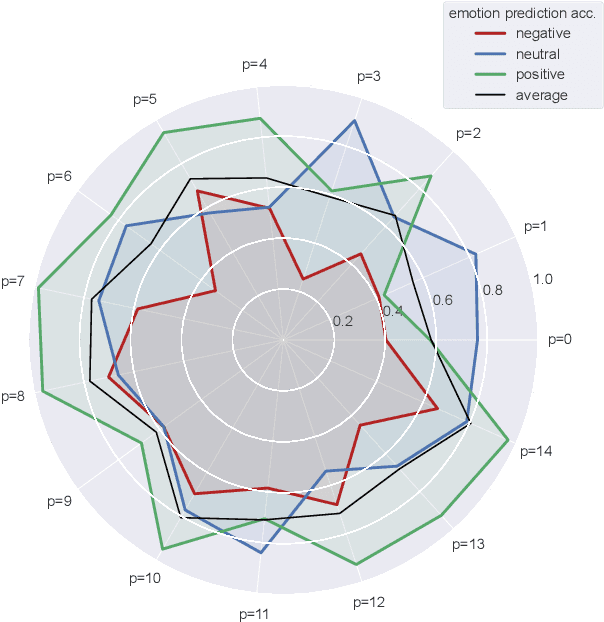

Abstract:There is a growing need for sparse representational formats of human affective states that can be utilized in scenarios with limited computational memory resources. We explore whether representing neural data, in response to emotional stimuli, in a latent vector space can serve to both predict emotional states as well as generate synthetic EEG data that are participant- and/or emotion-specific. We propose a conditional variational autoencoder based framework, EEG2Vec, to learn generative-discriminative representations from EEG data. Experimental results on affective EEG recording datasets demonstrate that our model is suitable for unsupervised EEG modeling, classification of three distinct emotion categories (positive, neutral, negative) based on the latent representation achieves a robust performance of 68.49%, and generated synthetic EEG sequences resemble real EEG data inputs to particularly reconstruct low-frequency signal components. Our work advances areas where affective EEG representations can be useful in e.g., generating artificial (labeled) training data or alleviating manual feature extraction, and provide efficiency for memory constrained edge computing applications.

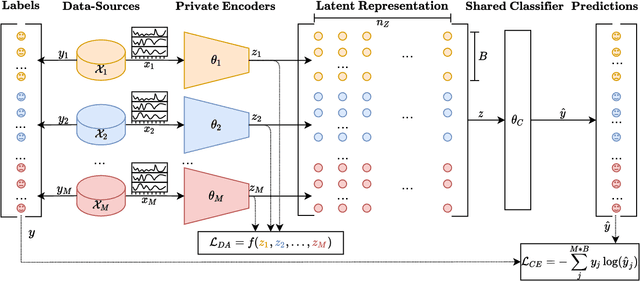

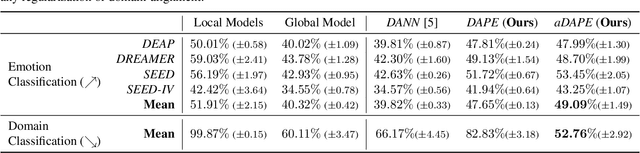

Domain-Invariant Representation Learning from EEG with Private Encoders

Jan 27, 2022

Abstract:Deep learning based electroencephalography (EEG) signal processing methods are known to suffer from poor test-time generalization due to the changes in data distribution. This becomes a more challenging problem when privacy-preserving representation learning is of interest such as in clinical settings. To that end, we propose a multi-source learning architecture where we extract domain-invariant representations from dataset-specific private encoders. Our model utilizes a maximum-mean-discrepancy (MMD) based domain alignment approach to impose domain-invariance for encoded representations, which outperforms state-of-the-art approaches in EEG-based emotion classification. Furthermore, representations learned in our pipeline preserve domain privacy as dataset-specific private encoding alleviates the need for conventional, centralized EEG-based deep neural network training approaches with shared parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge