Mohamed Eldesouki

Arabic Diacritic Recovery Using a Feature-Rich biLSTM Model

Feb 04, 2020

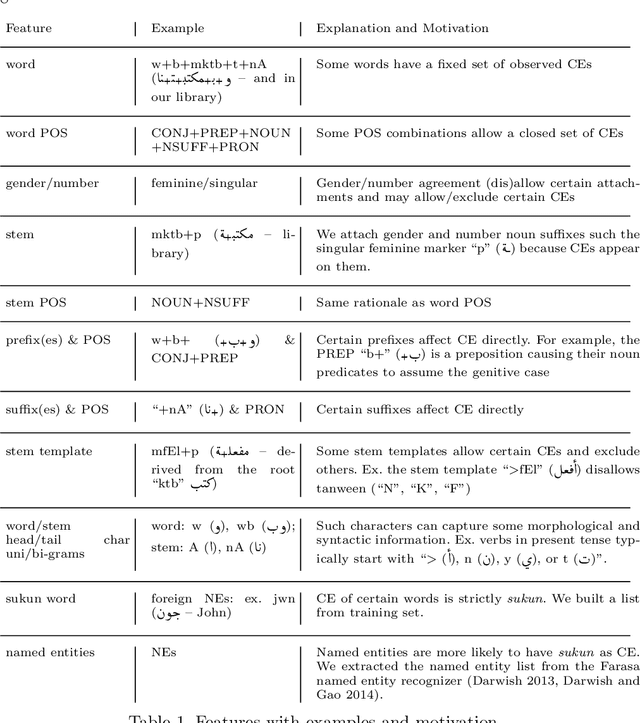

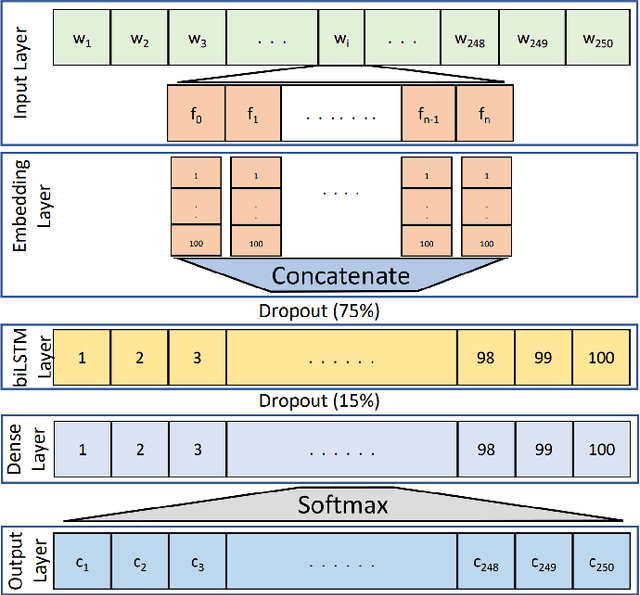

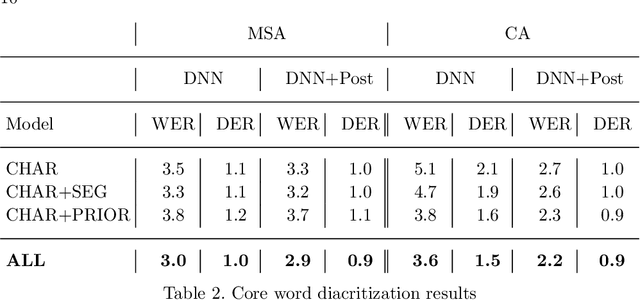

Abstract:Diacritics (short vowels) are typically omitted when writing Arabic text, and readers have to reintroduce them to correctly pronounce words. There are two types of Arabic diacritics: the first are core-word diacritics (CW), which specify the lexical selection, and the second are case endings (CE), which typically appear at the end of the word stem and generally specify their syntactic roles. Recovering CEs is relatively harder than recovering core-word diacritics due to inter-word dependencies, which are often distant. In this paper, we use a feature-rich recurrent neural network model that uses a variety of linguistic and surface-level features to recover both core word diacritics and case endings. Our model surpasses all previous state-of-the-art systems with a CW error rate (CWER) of 2.86\% and a CE error rate (CEER) of 3.7% for Modern Standard Arabic (MSA) and CWER of 2.2% and CEER of 2.5% for Classical Arabic (CA). When combining diacritized word cores with case endings, the resultant word error rate is 6.0% and 4.3% for MSA and CA respectively. This highlights the effectiveness of feature engineering for such deep neural models.

Arabic Multi-Dialect Segmentation: bi-LSTM-CRF vs. SVM

Aug 19, 2017

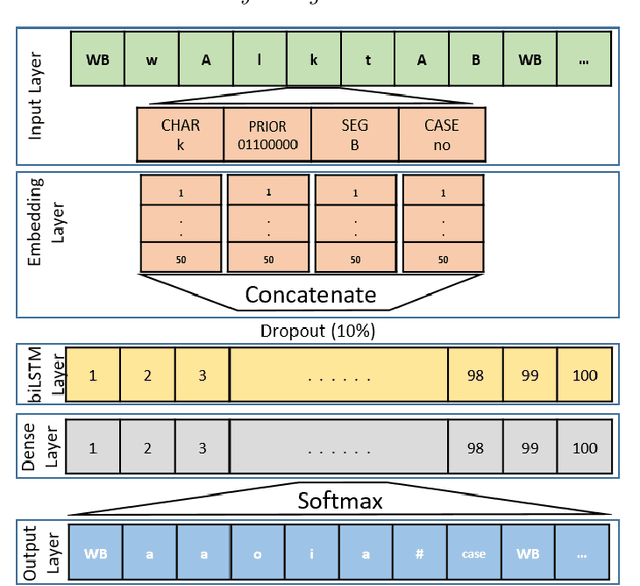

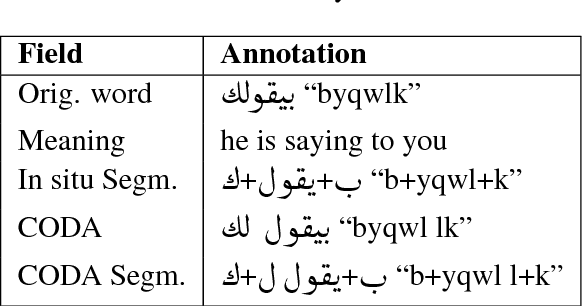

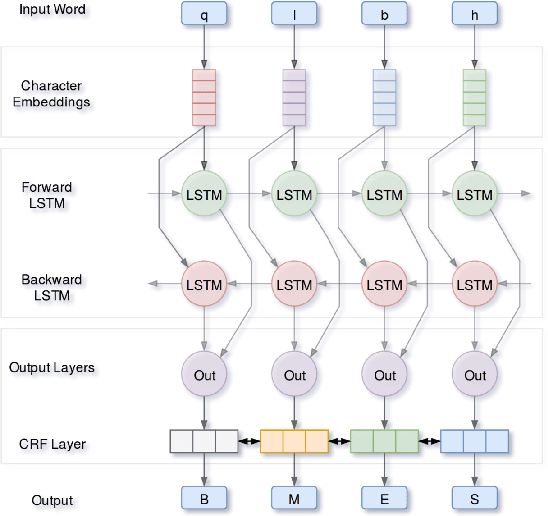

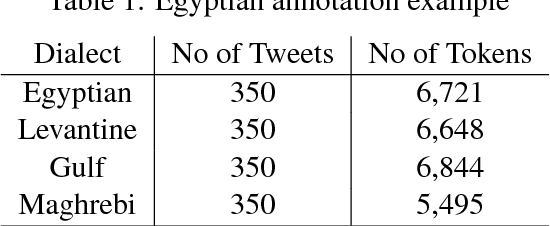

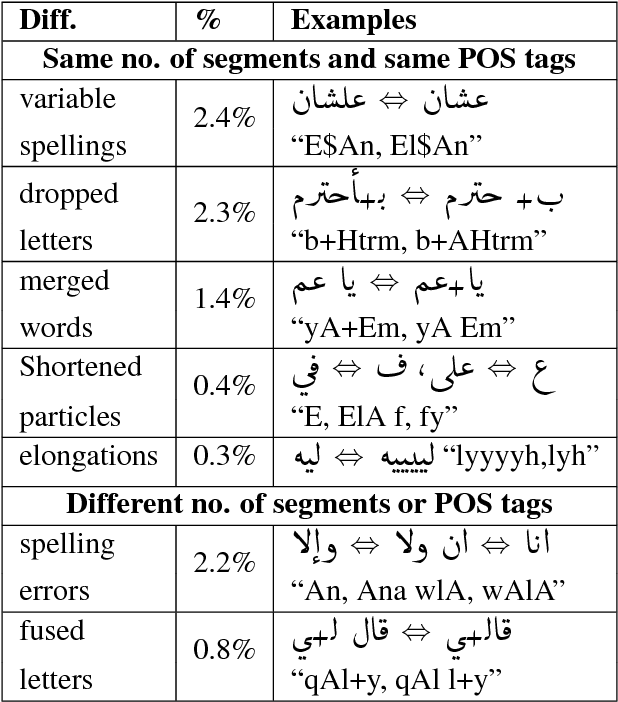

Abstract:Arabic word segmentation is essential for a variety of NLP applications such as machine translation and information retrieval. Segmentation entails breaking words into their constituent stems, affixes and clitics. In this paper, we compare two approaches for segmenting four major Arabic dialects using only several thousand training examples for each dialect. The two approaches involve posing the problem as a ranking problem, where an SVM ranker picks the best segmentation, and as a sequence labeling problem, where a bi-LSTM RNN coupled with CRF determines where best to segment words. We are able to achieve solid segmentation results for all dialects using rather limited training data. We also show that employing Modern Standard Arabic data for domain adaptation and assuming context independence improve overall results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge