Mohamed Amine Kerkouri

Modeling Beyond MOS: Quality Assessment Models Must Integrate Context, Reasoning, and Multimodality

May 26, 2025Abstract:This position paper argues that Mean Opinion Score (MOS), while historically foundational, is no longer sufficient as the sole supervisory signal for multimedia quality assessment models. MOS reduces rich, context-sensitive human judgments to a single scalar, obscuring semantic failures, user intent, and the rationale behind quality decisions. We contend that modern quality assessment models must integrate three interdependent capabilities: (1) context-awareness, to adapt evaluations to task-specific goals and viewing conditions; (2) reasoning, to produce interpretable, evidence-grounded justifications for quality judgments; and (3) multimodality, to align perceptual and semantic cues using vision-language models. We critique the limitations of current MOS-centric benchmarks and propose a roadmap for reform: richer datasets with contextual metadata and expert rationales, and new evaluation metrics that assess semantic alignment, reasoning fidelity, and contextual sensitivity. By reframing quality assessment as a contextual, explainable, and multimodal modeling task, we aim to catalyze a shift toward more robust, human-aligned, and trustworthy evaluation systems.

Shifts in Doctors' Eye Movements Between Real and AI-Generated Medical Images

Apr 21, 2025Abstract:Eye-tracking analysis plays a vital role in medical imaging, providing key insights into how radiologists visually interpret and diagnose clinical cases. In this work, we first analyze radiologists' attention and agreement by measuring the distribution of various eye-movement patterns, including saccades direction, amplitude, and their joint distribution. These metrics help uncover patterns in attention allocation and diagnostic strategies. Furthermore, we investigate whether and how doctors' gaze behavior shifts when viewing authentic (Real) versus deep-learning-generated (Fake) images. To achieve this, we examine fixation bias maps, focusing on first, last, short, and longest fixations independently, along with detailed saccades patterns, to quantify differences in gaze distribution and visual saliency between authentic and synthetic images.

Quantization Effects on Neural Networks Perception: How would quantization change the perceptual field of vision models?

Mar 15, 2024Abstract:Neural network quantization is an essential technique for deploying models on resource-constrained devices. However, its impact on model perceptual fields, particularly regarding class activation maps (CAMs), remains a significant area of investigation. In this study, we explore how quantization alters the spatial recognition ability of the perceptual field of vision models, shedding light on the alignment between CAMs and visual saliency maps across various architectures. Leveraging a dataset of 10,000 images from ImageNet, we rigorously evaluate six diverse foundational CNNs: VGG16, ResNet50, EfficientNet, MobileNet, SqueezeNet, and DenseNet. We uncover nuanced changes in CAMs and their alignment with human visual saliency maps through systematic quantization techniques applied to these models. Our findings reveal the varying sensitivities of different architectures to quantization and underscore its implications for real-world applications in terms of model performance and interpretability. The primary contribution of this work revolves around deepening our understanding of neural network quantization, providing insights crucial for deploying efficient and interpretable models in practical settings.

Shifting Focus: From Global Semantics to Local Prominent Features in Swin-Transformer for Knee Osteoarthritis Severity Assessment

Mar 15, 2024

Abstract:Conventional imaging diagnostics frequently encounter bottlenecks due to manual inspection, which can lead to delays and inconsistencies. Although deep learning offers a pathway to automation and enhanced accuracy, foundational models in computer vision often emphasize global context at the expense of local details, which are vital for medical imaging diagnostics. To address this, we harness the Swin Transformer's capacity to discern extended spatial dependencies within images through the hierarchical framework. Our novel contribution lies in refining local feature representations, orienting them specifically toward the final distribution of the classifier. This method ensures that local features are not only preserved but are also enriched with task-specific information, enhancing their relevance and detail at every hierarchical level. By implementing this strategy, our model demonstrates significant robustness and precision, as evidenced by extensive validation of two established benchmarks for Knee OsteoArthritis (KOA) grade classification. These results highlight our approach's effectiveness and its promising implications for the future of medical imaging diagnostics. Our implementation is available on https://github.com/mtliba/KOA_NLCS2024

Insights into Classifying and Mitigating LLMs' Hallucinations

Nov 14, 2023Abstract:The widespread adoption of large language models (LLMs) across diverse AI applications is proof of the outstanding achievements obtained in several tasks, such as text mining, text generation, and question answering. However, LLMs are not exempt from drawbacks. One of the most concerning aspects regards the emerging problematic phenomena known as "Hallucinations". They manifest in text generation systems, particularly in question-answering systems reliant on LLMs, potentially resulting in false or misleading information propagation. This paper delves into the underlying causes of AI hallucination and elucidates its significance in artificial intelligence. In particular, Hallucination classification is tackled over several tasks (Machine Translation, Question and Answer, Dialog Systems, Summarisation Systems, Knowledge Graph with LLMs, and Visual Question Answer). Additionally, we explore potential strategies to mitigate hallucinations, aiming to enhance the overall reliability of LLMs. Our research addresses this critical issue within the HeReFaNMi (Health-Related Fake News Mitigation) project, generously supported by NGI Search, dedicated to combating Health-Related Fake News dissemination on the Internet. This endeavour represents a concerted effort to safeguard the integrity of information dissemination in an age of evolving AI technologies.

Automatic diagnosis of knee osteoarthritis severity using Swin transformer

Jul 10, 2023Abstract:Knee osteoarthritis (KOA) is a widespread condition that can cause chronic pain and stiffness in the knee joint. Early detection and diagnosis are crucial for successful clinical intervention and management to prevent severe complications, such as loss of mobility. In this paper, we propose an automated approach that employs the Swin Transformer to predict the severity of KOA. Our model uses publicly available radiographic datasets with Kellgren and Lawrence scores to enable early detection and severity assessment. To improve the accuracy of our model, we employ a multi-prediction head architecture that utilizes multi-layer perceptron classifiers. Additionally, we introduce a novel training approach that reduces the data drift between multiple datasets to ensure the generalization ability of the model. The results of our experiments demonstrate the effectiveness and feasibility of our approach in predicting KOA severity accurately.

An Inter-observer consistent deep adversarial training for visual scanpath prediction

Nov 14, 2022

Abstract:The visual scanpath is a sequence of points through which the human gaze moves while exploring a scene. It represents the fundamental concepts upon which visual attention research is based. As a result, the ability to predict them has emerged as an important task in recent years. In this paper, we propose an inter-observer consistent adversarial training approach for scanpath prediction through a lightweight deep neural network. The adversarial method employs a discriminative neural network as a dynamic loss that is better suited to model the natural stochastic phenomenon while maintaining consistency between the distributions related to the subjective nature of scanpaths traversed by different observers. Through extensive testing, we show the competitiveness of our approach in regard to state-of-the-art methods.

Deep-based quality assessment of medical images through domain adaptation

Oct 19, 2022

Abstract:Predicting the quality of multimedia content is often needed in different fields. In some applications, quality metrics are crucial with a high impact, and can affect decision making such as diagnosis from medical multimedia. In this paper, we focus on such applications by proposing an efficient and shallow model for predicting the quality of medical images without reference from a small amount of annotated data. Our model is based on convolution self-attention that aims to model complex representation from relevant local characteristics of images, which itself slide over the image to interpolate the global quality score. We also apply domain adaptation learning in unsupervised and semi-supervised manner. The proposed model is evaluated through a dataset composed of several images and their corresponding subjective scores. The obtained results showed the efficiency of the proposed method, but also, the relevance of the applying domain adaptation to generalize over different multimedia domains regarding the downstream task of perceptual quality prediction. \footnote{Funded by the TIC-ART project, Regional fund (Region Centre-Val de Loire)}

A domain adaptive deep learning solution for scanpath prediction of paintings

Sep 22, 2022

Abstract:Cultural heritage understanding and preservation is an important issue for society as it represents a fundamental aspect of its identity. Paintings represent a significant part of cultural heritage, and are the subject of study continuously. However, the way viewers perceive paintings is strictly related to the so-called HVS (Human Vision System) behaviour. This paper focuses on the eye-movement analysis of viewers during the visual experience of a certain number of paintings. In further details, we introduce a new approach to predicting human visual attention, which impacts several cognitive functions for humans, including the fundamental understanding of a scene, and then extend it to painting images. The proposed new architecture ingests images and returns scanpaths, a sequence of points featuring a high likelihood of catching viewers' attention. We use an FCNN (Fully Convolutional Neural Network), in which we exploit a differentiable channel-wise selection and Soft-Argmax modules. We also incorporate learnable Gaussian distributions onto the network bottleneck to simulate visual attention process bias in natural scene images. Furthermore, to reduce the effect of shifts between different domains (i.e. natural images, painting), we urge the model to learn unsupervised general features from other domains using a gradient reversal classifier. The results obtained by our model outperform existing state-of-the-art ones in terms of accuracy and efficiency.

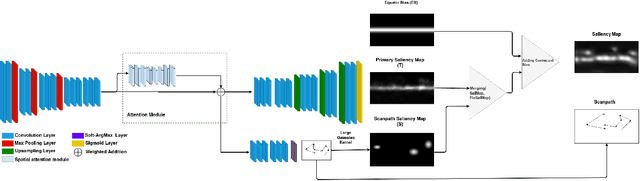

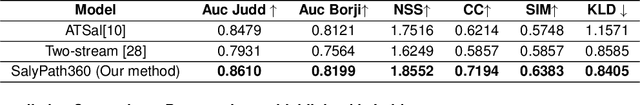

SalyPath360: Saliency and Scanpath Prediction Framework for Omnidirectional Images

Jan 01, 2022

Abstract:This paper introduces a new framework to predict visual attention of omnidirectional images. The key setup of our architecture is the simultaneous prediction of the saliency map and a corresponding scanpath for a given stimulus. The framework implements a fully encoder-decoder convolutional neural network augmented by an attention module to generate representative saliency maps. In addition, an auxiliary network is employed to generate probable viewport center fixation points through the SoftArgMax function. The latter allows to derive fixation points from feature maps. To take advantage of the scanpath prediction, an adaptive joint probability distribution model is then applied to construct the final unbiased saliency map by leveraging the encoder decoder-based saliency map and the scanpath-based saliency heatmap. The proposed framework was evaluated in terms of saliency and scanpath prediction, and the results were compared to state-of-the-art methods on Salient360! dataset. The results showed the relevance of our framework and the benefits of such architecture for further omnidirectional visual attention prediction tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge