Misha Teplitskiy

The Noisy Path from Source to Citation: Measuring How Scholars Engage with Past Research

Feb 27, 2025Abstract:Academic citations are widely used for evaluating research and tracing knowledge flows. Such uses typically rely on raw citation counts and neglect variability in citation types. In particular, citations can vary in their fidelity as original knowledge from cited studies may be paraphrased, summarized, or reinterpreted, possibly wrongly, leading to variation in how much information changes from cited to citing paper. In this study, we introduce a computational pipeline to quantify citation fidelity at scale. Using full texts of papers, the pipeline identifies citations in citing papers and the corresponding claims in cited papers, and applies supervised models to measure fidelity at the sentence level. Analyzing a large-scale multi-disciplinary dataset of approximately 13 million citation sentence pairs, we find that citation fidelity is higher when authors cite papers that are 1) more recent and intellectually close, 2) more accessible, and 3) the first author has a lower H-index and the author team is medium-sized. Using a quasi-experiment, we establish the "telephone effect" - when citing papers have low fidelity to the original claim, future papers that cite the citing paper and the original have lower fidelity to the original. Our work reveals systematic differences in citation fidelity, underscoring the limitations of analyses that rely on citation quantity alone and the potential for distortion of evidence.

The Wisdom of Polarized Crowds

Nov 29, 2017

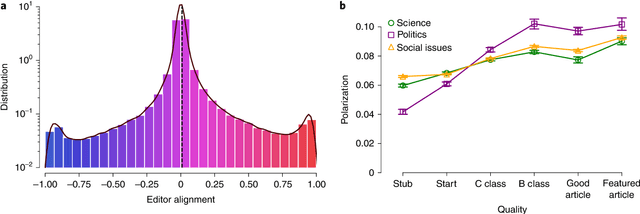

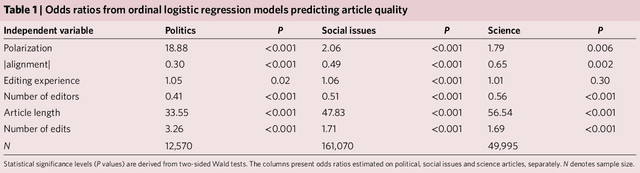

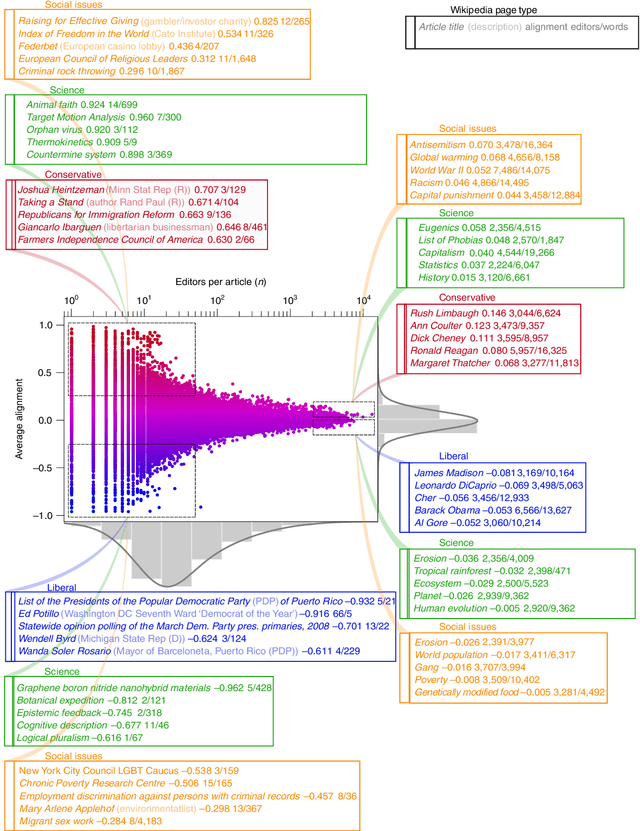

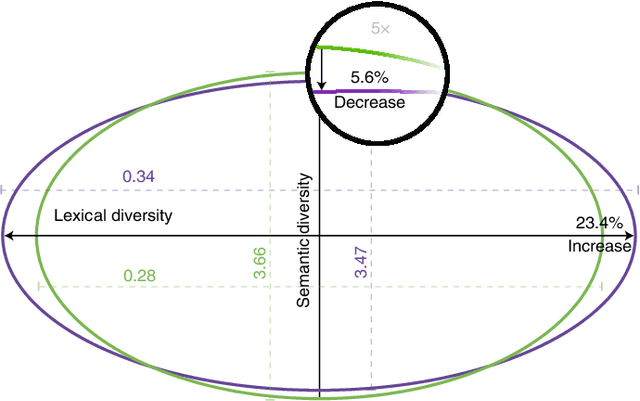

Abstract:As political polarization in the United States continues to rise, the question of whether polarized individuals can fruitfully cooperate becomes pressing. Although diversity of individual perspectives typically leads to superior team performance on complex tasks, strong political perspectives have been associated with conflict, misinformation and a reluctance to engage with people and perspectives beyond one's echo chamber. It is unclear whether self-selected teams of politically diverse individuals will create higher or lower quality outcomes. In this paper, we explore the effect of team political composition on performance through analysis of millions of edits to Wikipedia's Political, Social Issues, and Science articles. We measure editors' political alignments by their contributions to conservative versus liberal articles. A survey of editors validates that those who primarily edit liberal articles identify more strongly with the Democratic party and those who edit conservative ones with the Republican party. Our analysis then reveals that polarized teams---those consisting of a balanced set of politically diverse editors---create articles of higher quality than politically homogeneous teams. The effect appears most strongly in Wikipedia's Political articles, but is also observed in Social Issues and even Science articles. Analysis of article "talk pages" reveals that politically polarized teams engage in longer, more constructive, competitive, and substantively focused but linguistically diverse debates than political moderates. More intense use of Wikipedia policies by politically diverse teams suggests institutional design principles to help unleash the power of politically polarized teams.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge