Mingyi Liu

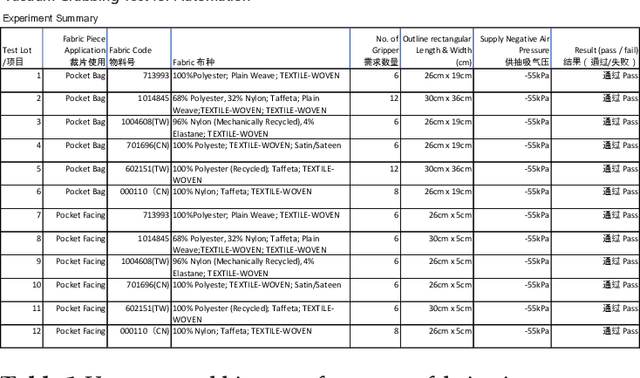

Design and Experimental Study of Vacuum Suction Grabbing Technology to Grasp Fabric Piece

Aug 18, 2024

Abstract:The primary objective of this study was to design the grabbing technique used to determine the vacuum suction gripper and its design parameters for the pocket welting operation in apparel manufacturing. It presents the application of vacuum suction in grabbing technology, a technique that has revolutionized the handling and manipulation to grasp the various fabric materials in a range of garment industries. Vacuum suction, being non-intrusive and non-invasive, offers several advantages compared to traditional grabbing methods. It is particularly useful in scenarios where soft woven fabric and air-impermeable fabric items need to be handled with utmost care. The paper delves into the working principles of vacuum suction, its various components, and the underlying physics involved. Furthermore, it explores the various applications of vacuum suction in the garment industry into the automation exploration. The paper also highlights the challenges and limitations of vacuum suction technology and suggests potential areas for further research and development.

Who Should I Engage with At What Time? A Missing Event Aware Temporal Graph Neural Network

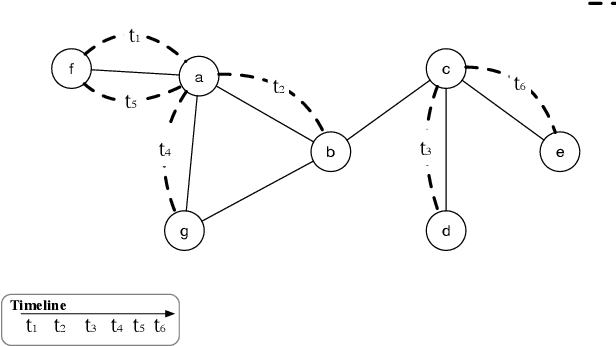

Jan 20, 2023Abstract:Temporal graph neural network has recently received significant attention due to its wide application scenarios, such as bioinformatics, knowledge graphs, and social networks. There are some temporal graph neural networks that achieve remarkable results. However, these works focus on future event prediction and are performed under the assumption that all historical events are observable. In real-world applications, events are not always observable, and estimating event time is as important as predicting future events. In this paper, we propose MTGN, a missing event-aware temporal graph neural network, which uniformly models evolving graph structure and timing of events to support predicting what will happen in the future and when it will happen.MTGN models the dynamic of both observed and missing events as two coupled temporal point processes, thereby incorporating the effects of missing events into the network. Experimental results on several real-world temporal graphs demonstrate that MTGN significantly outperforms existing methods with up to 89% and 112% more accurate time and link prediction. Code can be found on https://github.com/HIT-ICES/TNNLS-MTGN.

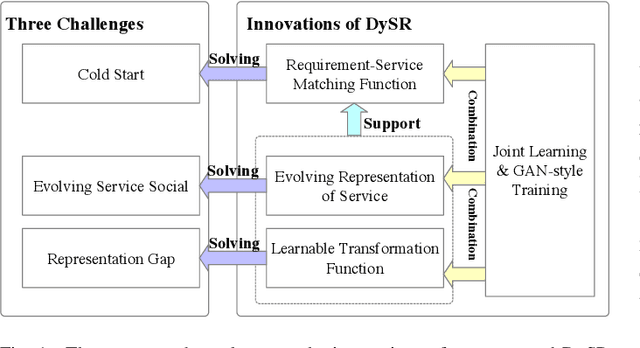

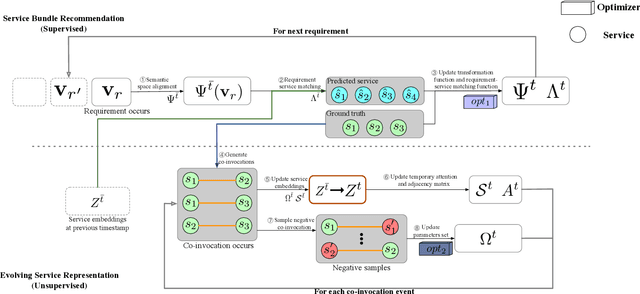

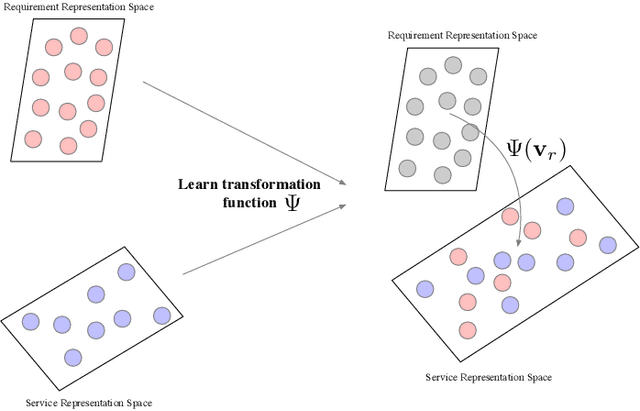

DySR: A Dynamic Representation Learning and Aligning based Model for Service Bundle Recommendation

Aug 07, 2021

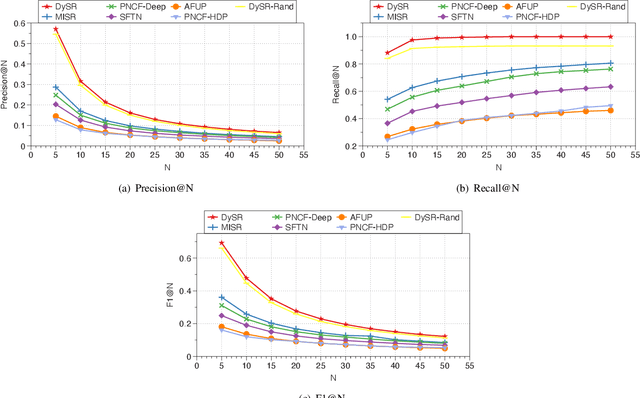

Abstract:An increasing number and diversity of services are available, which result in significant challenges to effective reuse service during requirement satisfaction. There have been many service bundle recommendation studies and achieved remarkable results. However, there is still plenty of room for improvement in the performance of these methods. The fundamental problem with these studies is that they ignore the evolution of services over time and the representation gap between services and requirements. In this paper, we propose a dynamic representation learning and aligning based model called DySR to tackle these issues. DySR eliminates the representation gap between services and requirements by learning a transformation function and obtains service representations in an evolving social environment through dynamic graph representation learning. Extensive experiments conducted on a real-world dataset from ProgrammableWeb show that DySR outperforms existing state-of-the-art methods in commonly used evaluation metrics, improving $F1@5$ from $36.1\%$ to $69.3\%$.

Learning Representation over Dynamic Graph using Aggregation-Diffusion Mechanism

Jun 03, 2021

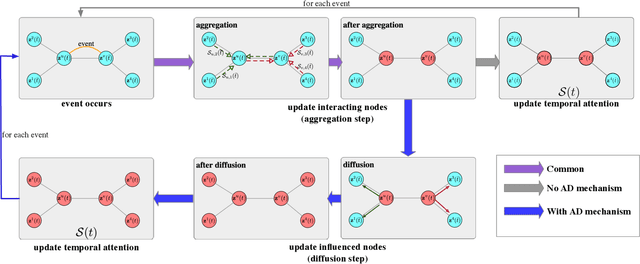

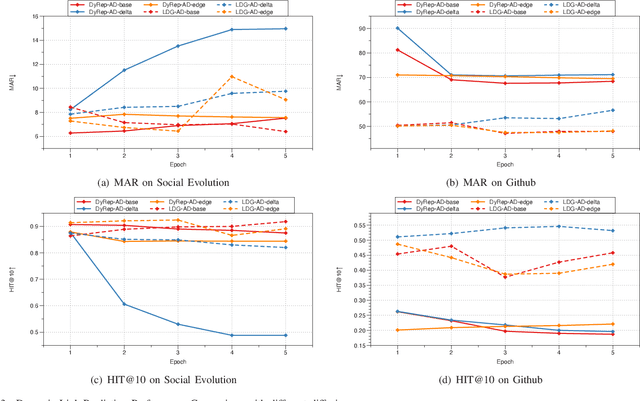

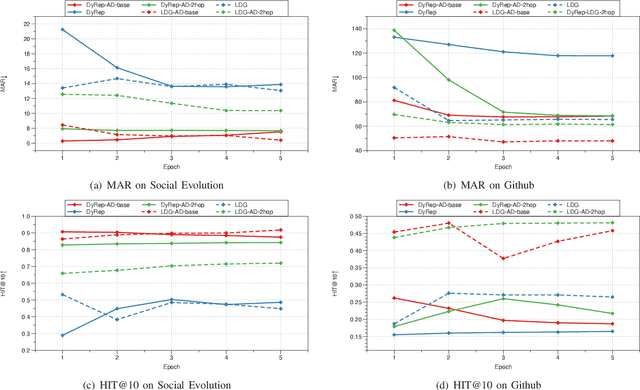

Abstract:Representation learning on graphs that evolve has recently received significant attention due to its wide application scenarios, such as bioinformatics, knowledge graphs, and social networks. The propagation of information in graphs is important in learning dynamic graph representations, and most of the existing methods achieve this by aggregation. However, relying only on aggregation to propagate information in dynamic graphs can result in delays in information propagation and thus affect the performance of the method. To alleviate this problem, we propose an aggregation-diffusion (AD) mechanism that actively propagates information to its neighbor by diffusion after the node updates its embedding through the aggregation mechanism. In experiments on two real-world datasets in the dynamic link prediction task, the AD mechanism outperforms the baseline models that only use aggregation to propagate information. We further conduct extensive experiments to discuss the influence of different factors in the AD mechanism.

LTP: A New Active Learning Strategy for CRF-Based Named Entity Recognition

Jan 08, 2020

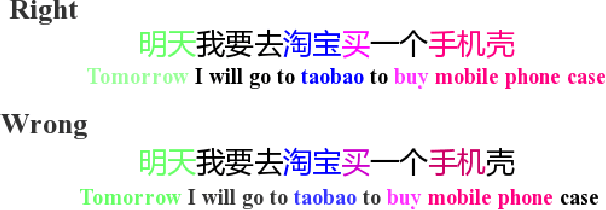

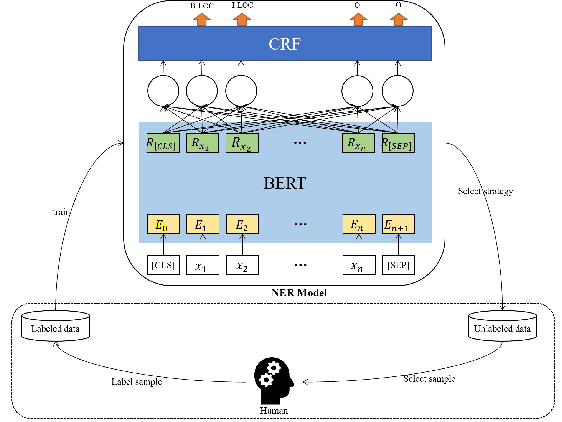

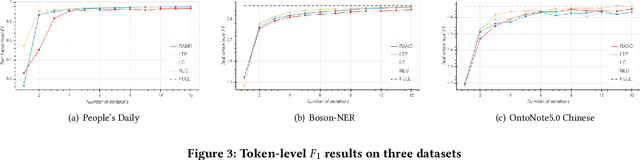

Abstract:In recent years, deep learning has achieved great success in many natural language processing tasks including named entity recognition. The shortcoming is that a large amount of manually-annotated data is usually required. Previous studies have demonstrated that both transfer learning and active learning could elaborately reduce the cost of data annotation in terms of their corresponding advantages, but there is still plenty of room for improvement. We assume that the convergence of the two methods can complement with each other, so that the model could be trained more accurately with less labelled data, and active learning method could enhance transfer learning method to accurately select the minimum data samples for iterative learning. However, in real applications we found this approach is challenging because the sample selection of traditional active learning strategy merely depends on the final probability value of its model output, and this makes it quite difficult to evaluate the quality of the selected data samples. In this paper, we first examine traditional active learning strategies in a specific case of BERT-CRF that has been widely used in named entity recognition. Then we propose an uncertainty-based active learning strategy called Lowest Token Probability (LTP) which considers not only the final output but also the intermediate results. We test LTP on multiple datasets, and the experiments show that LTP performs better than traditional strategies (incluing LC and NLC) on both token-level $F_1$ and sentence-level accuracy, especially in complex imbalanced datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge