Mingshan Xie

Fermi-Bose Machine

Apr 21, 2024

Abstract:Distinct from human cognitive processing, deep neural networks trained by backpropagation can be easily fooled by adversarial examples. To design a semantically meaningful representation learning, we discard backpropagation, and instead, propose a local contrastive learning, where the representation for the inputs bearing the same label shrink (akin to boson) in hidden layers, while those of different labels repel (akin to fermion). This layer-wise learning is local in nature, being biological plausible. A statistical mechanics analysis shows that the target fermion-pair-distance is a key parameter. Moreover, the application of this local contrastive learning to MNIST benchmark dataset demonstrates that the adversarial vulnerability of standard perceptron can be greatly mitigated by tuning the target distance, i.e., controlling the geometric separation of prototype manifolds.

Equivalence between algorithmic instability and transition to replica symmetry breaking in perceptron learning systems

Nov 26, 2021

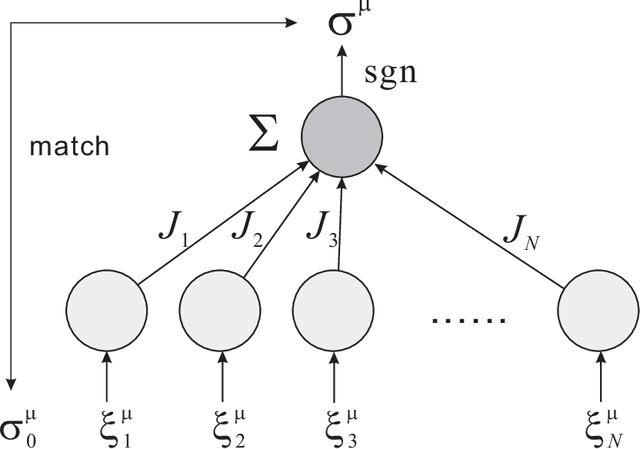

Abstract:Binary perceptron is a fundamental model of supervised learning for the non-convex optimization, which is a root of the popular deep learning. Binary perceptron is able to achieve a classification of random high-dimensional data by computing the marginal probabilities of binary synapses. The relationship between the algorithmic instability and the equilibrium analysis of the model remains elusive. Here, we establish the relationship by showing that the instability condition around the algorithmic fixed point is identical to the instability for breaking the replica symmetric saddle point solution of the free energy function. Therefore, our analysis provides insights towards bridging the gap between non-convex learning dynamics and statistical mechanics properties of more complex neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge