Milad Ganjalizadeh

Communication-Efficient Orchestrations for URLLC Service via Hierarchical Reinforcement Learning

Jul 25, 2023Abstract:Ultra-reliable low latency communications (URLLC) service is envisioned to enable use cases with strict reliability and latency requirements in 5G. One approach for enabling URLLC services is to leverage Reinforcement Learning (RL) to efficiently allocate wireless resources. However, with conventional RL methods, the decision variables (though being deployed at various network layers) are typically optimized in the same control loop, leading to significant practical limitations on the control loop's delay as well as excessive signaling and energy consumption. In this paper, we propose a multi-agent Hierarchical RL (HRL) framework that enables the implementation of multi-level policies with different control loop timescales. Agents with faster control loops are deployed closer to the base station, while the ones with slower control loops are at the edge or closer to the core network providing high-level guidelines for low-level actions. On a use case from the prior art, with our HRL framework, we optimized the maximum number of retransmissions and transmission power of industrial devices. Our extensive simulation results on the factory automation scenario show that the HRL framework achieves better performance as the baseline single-agent RL method, with significantly less overhead of signal transmissions and delay compared to the one-agent RL methods.

Device Selection for the Coexistence of URLLC and Distributed Learning Services

Dec 22, 2022

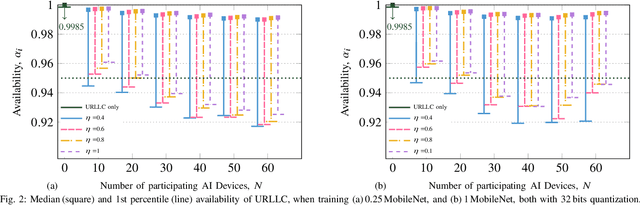

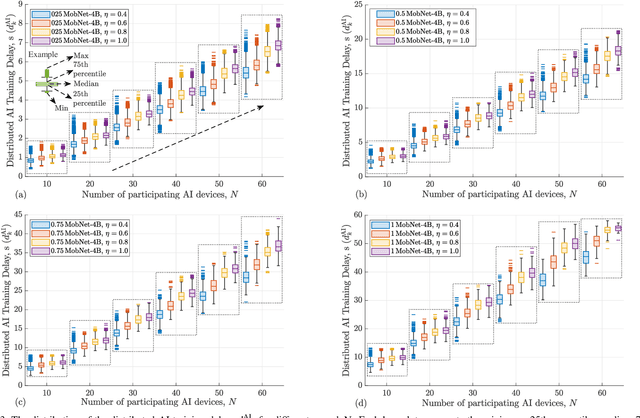

Abstract:Recent advances in distributed artificial intelligence (AI) have led to tremendous breakthroughs in various communication services, from fault-tolerant factory automation to smart cities. When distributed learning is run over a set of wirelessly connected devices, random channel fluctuations and the incumbent services running on the same network impact the performance of both distributed learning and the coexisting service. In this paper, we investigate a mixed service scenario where distributed AI workflow and ultra-reliable low latency communication (URLLC) services run concurrently over a network. Consequently, we propose a risk sensitivity-based formulation for device selection to minimize the AI training delays during its convergence period while ensuring that the operational requirements of the URLLC service are met. To address this challenging coexistence problem, we transform it into a deep reinforcement learning problem and address it via a framework based on soft actor-critic algorithm. We evaluate our solution with a realistic and 3GPP-compliant simulator for factory automation use cases. Our simulation results confirm that our solution can significantly decrease the training delay of the distributed AI service while keeping the URLLC availability above its required threshold and close to the scenario where URLLC solely consumes all network resources.

Interplay between Distributed AI Workflow and URLLC

Aug 02, 2022

Abstract:Distributed artificial intelligence (AI) has recently accomplished tremendous breakthroughs in various communication services, ranging from fault-tolerant factory automation to smart cities. When distributed learning is run over a set of wireless connected devices, random channel fluctuations, and the incumbent services simultaneously running on the same network affect the performance of distributed learning. In this paper, we investigate the interplay between distributed AI workflow and ultra-reliable low latency communication (URLLC) services running concurrently over a network. Using 3GPP compliant simulations in a factory automation use case, we show the impact of various distributed AI settings (e.g., model size and the number of participating devices) on the convergence time of distributed AI and the application layer performance of URLLC. Unless we leverage the existing 5G-NR quality of service handling mechanisms to separate the traffic from the two services, our simulation results show that the impact of distributed AI on the availability of the URLLC devices is significant. Moreover, with proper setting of distributed AI (e.g., proper user selection), we can substantially reduce network resource utilization, leading to lower latency for distributed AI and higher availability for the URLLC users. Our results provide important insights for future 6G and AI standardization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge