Mikkel Kragh

Multi-Modal Obstacle Detection in Unstructured Environments with Conditional Random Fields

Mar 13, 2019

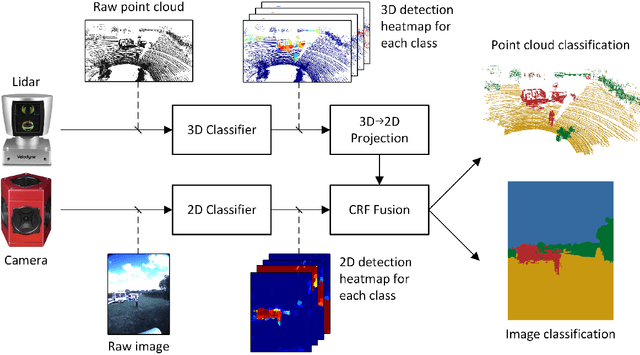

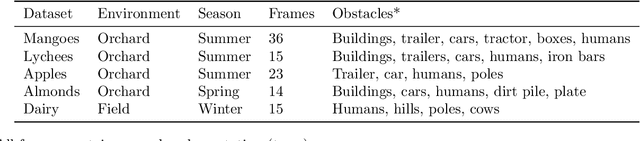

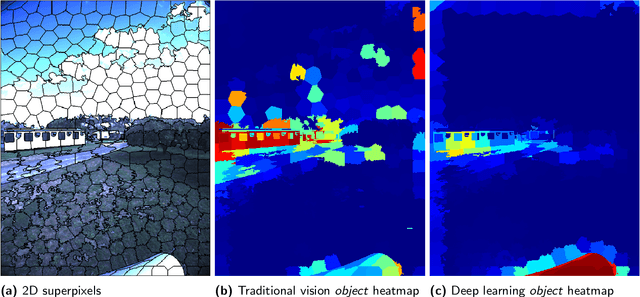

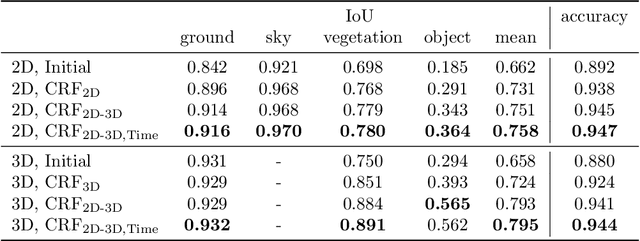

Abstract:Reliable obstacle detection and classification in rough and unstructured terrain such as agricultural fields or orchards remains a challenging problem. These environments involve large variations in both geometry and appearance, challenging perception systems that rely on only a single sensor modality. Geometrically, tall grass, fallen leaves, or terrain roughness can mistakenly be perceived as nontraversable or might even obscure actual obstacles. Likewise, traversable grass or dirt roads and obstacles such as trees and bushes might be visually ambiguous. In this paper, we combine appearance- and geometry-based detection methods by probabilistically fusing lidar and camera sensing with semantic segmentation using a conditional random field. We apply a state-of-the-art multimodal fusion algorithm from the scene analysis domain and adjust it for obstacle detection in agriculture with moving ground vehicles. This involves explicitly handling sparse point cloud data and exploiting both spatial, temporal, and multimodal links between corresponding 2D and 3D regions. The proposed method was evaluated on a diverse data set, comprising a dairy paddock and different orchards gathered with a perception research robot in Australia. Results showed that for a two-class classification problem (ground and nonground), only the camera leveraged from information provided by the other modality with an increase in the mean classification score of 0.5%. However, as more classes were introduced (ground, sky, vegetation, and object), both modalities complemented each other with improvements of 1.4% in 2D and 7.9% in 3D. Finally, introducing temporal links between successive frames resulted in improvements of 0.2% in 2D and 1.5% in 3D.

Towards Inverse Sensor Mapping in Agriculture

May 22, 2018

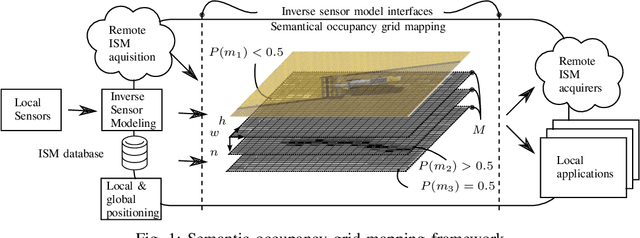

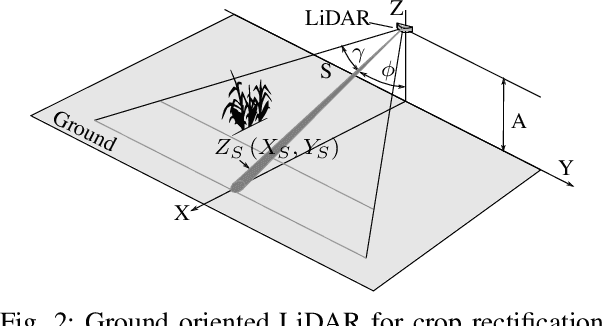

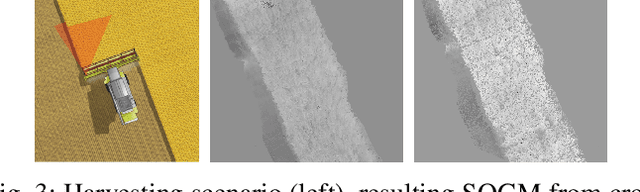

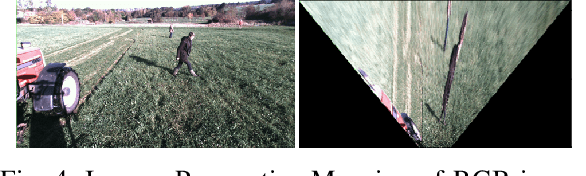

Abstract:In recent years, the drive of the Industry 4.0 initiative has enriched industrial and scientific approaches to build self-driving cars or smart factories. Agricultural applications benefit from both advances, as they are in reality mobile driving factories which process the environment. Therefore, acurate perception of the surrounding is a crucial task as it involves the goods to be processed, in contrast to standard indoor production lines. Environmental processing requires accurate and robust quantification in order to correctly adjust processing parameters and detect hazardous risks during the processing. While today approaches still implement functional elements based on a single particular set of sensors, it may become apparent that a unified representation of the environment compiled from all available information sources would be more versatile, sufficient, and cost effective. The key to this approach is the means of developing a common information language from the data provided. In this paper, we introduce and discuss techniques to build so called inverse sensor models that create a common information language among different, but typically agricultural, information providers. These can be current live sensor data, farm management systems, or long term information generated from previous processing, drones, or satellites. In the context of Industry 4.0, this enables the interoperability of different agricultural systems and allows information transparency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge