Mikael Boulic

Hennie

Transfer Learning on Transformers for Building Energy Consumption Forecasting -- A Comparative Study

Oct 18, 2024

Abstract:This study investigates the application of Transfer Learning (TL) on Transformer architectures to enhance building energy consumption forecasting. Transformers are a relatively new deep learning architecture, which has served as the foundation for groundbreaking technologies such as ChatGPT. While TL has been studied in the past, these studies considered either one TL strategy or used older deep learning models such as Recurrent Neural Networks or Convolutional Neural Networks. Here, we carry out an extensive empirical study on six different TL strategies and analyse their performance under varying feature spaces. In addition to the vanilla Transformer architecture, we also experiment with Informer and PatchTST, specifically designed for time series forecasting. We use 16 datasets from the Building Data Genome Project 2 to create building energy consumption forecasting models. Experiment results reveal that while TL is generally beneficial, especially when the target domain has no data, careful selection of the exact TL strategy should be made to gain the maximum benefit. This decision largely depends on the feature space properties such as the recorded weather features. We also note that PatchTST outperforms the other two Transformer variants (vanilla Transformer and Informer). We believe our findings would assist researchers in making informed decision in using TL and transformer architectures for building energy consumption forecasting.

LSTM-Autoencoder based Anomaly Detection for Indoor Air Quality Time Series Data

Apr 14, 2022

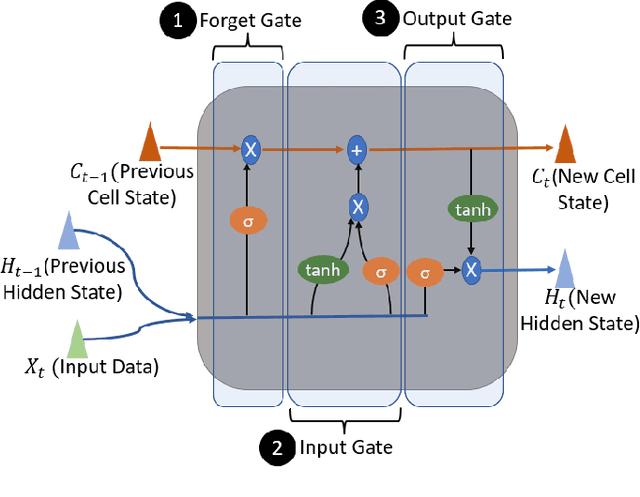

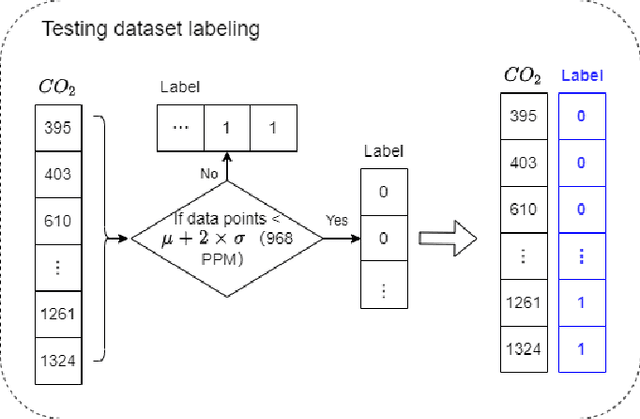

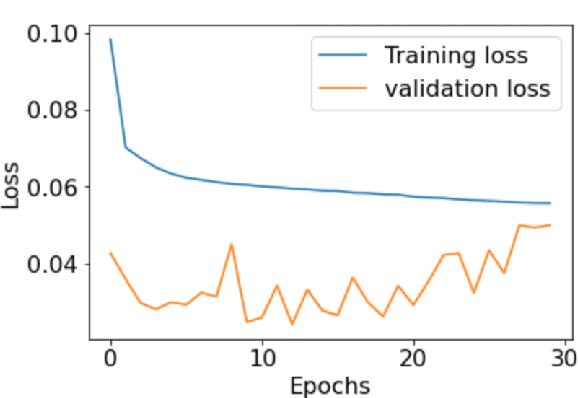

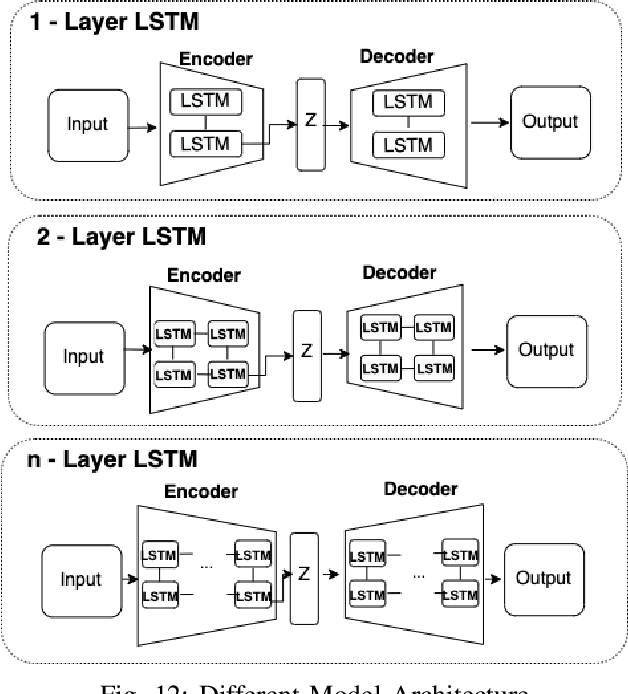

Abstract:Anomaly detection for indoor air quality (IAQ) data has become an important area of research as the quality of air is closely related to human health and well-being. However, traditional statistics and shallow machine learning-based approaches in anomaly detection in the IAQ area could not detect anomalies involving the observation of correlations across several data points (i.e., often referred to as long-term dependences). We propose a hybrid deep learning model that combines LSTM with Autoencoder for anomaly detection tasks in IAQ to address this issue. In our approach, the LSTM network is comprised of multiple LSTM cells that work with each other to learn the long-term dependences of the data in a time-series sequence. Autoencoder identifies the optimal threshold based on the reconstruction loss rates evaluated on every data across all time-series sequences. Our experimental results, based on the Dunedin CO2 time-series dataset obtained through a real-world deployment of the schools in New Zealand, demonstrate a very high and robust accuracy rate (99.50%) that outperforms other similar models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge