Michael St. Jules

Do Explanations Reflect Decisions? A Machine-centric Strategy to Quantify the Performance of Explainability Algorithms

Oct 29, 2019

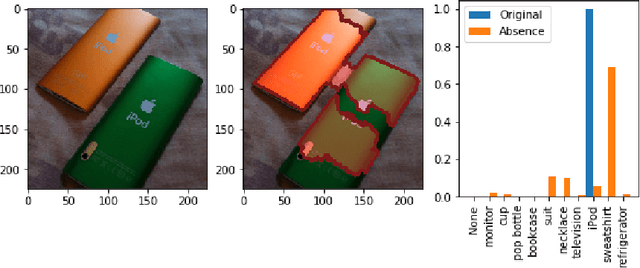

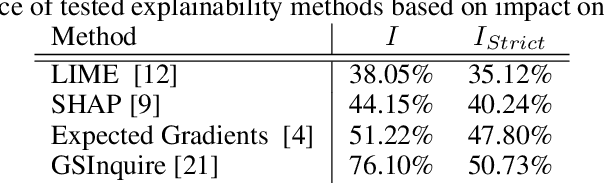

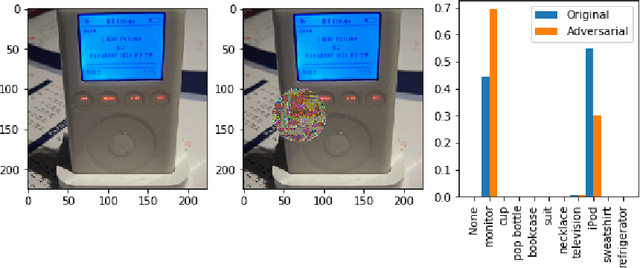

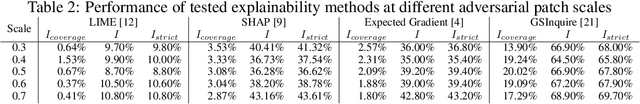

Abstract:There has been a significant surge of interest recently around the concept of explainable artificial intelligence (XAI), where the goal is to produce an interpretation for a decision made by a machine learning algorithm. Of particular interest is the interpretation of how deep neural networks make decisions, given the complexity and `black box' nature of such networks. Given the infancy of the field, there has been very limited exploration into the assessment of the performance of explainability methods, with most evaluations centered around subjective visual interpretation of the produced interpretations. In this study, we explore a more machine-centric strategy for quantifying the performance of explainability methods on deep neural networks via the notion of decision-making impact analysis. We introduce two quantitative performance metrics: i) Impact Score, which assesses the percentage of critical factors with either strong confidence reduction impact or decision changing impact, and ii) Impact Coverage, which assesses the percentage coverage of adversarially impacted factors in the input. A comprehensive analysis using this approach was conducted on several state-of-the-art explainability methods (LIME, SHAP, Expected Gradients, GSInquire) on a ResNet-50 deep convolutional neural network using a subset of ImageNet for the task of image classification. Experimental results show that the critical regions identified by LIME within the tested images had the lowest impact on the decision-making process of the network (~38%), with progressive increase in decision-making impact for SHAP (~44%), Expected Gradients (~51%), and GSInquire (~76%). While by no means perfect, the hope is that the proposed machine-centric strategy helps push the conversation forward towards better metrics for evaluating explainability methods and improve trust in deep neural networks.

MicronNet: A Highly Compact Deep Convolutional Neural Network Architecture for Real-time Embedded Traffic Sign Classification

Oct 03, 2018

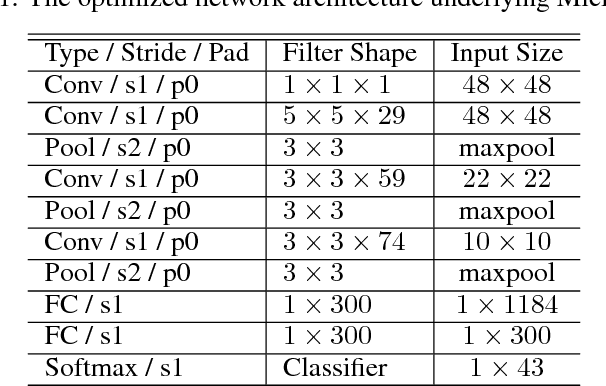

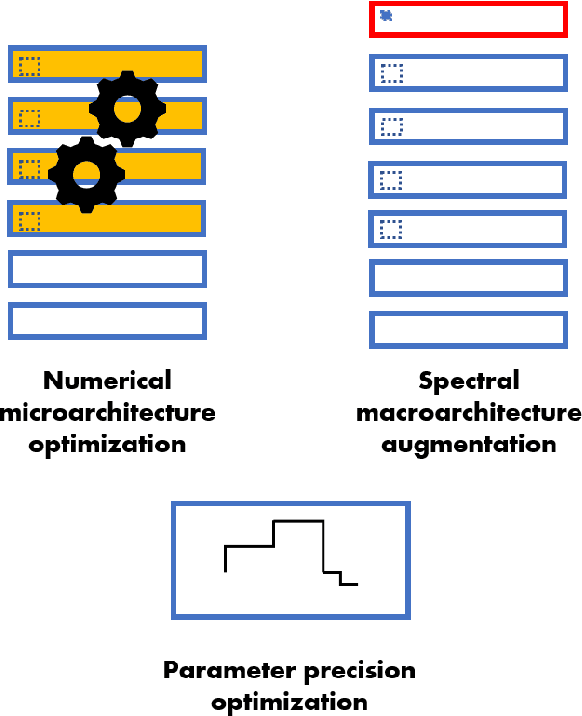

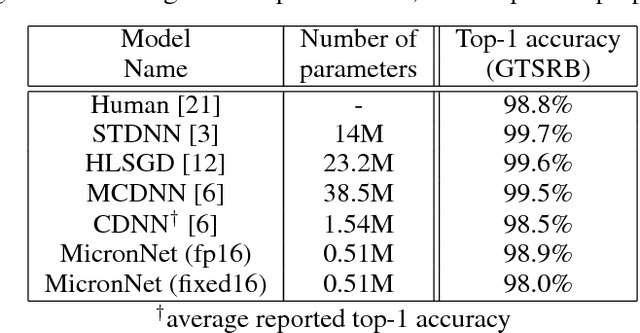

Abstract:Traffic sign recognition is a very important computer vision task for a number of real-world applications such as intelligent transportation surveillance and analysis. While deep neural networks have been demonstrated in recent years to provide state-of-the-art performance traffic sign recognition, a key challenge for enabling the widespread deployment of deep neural networks for embedded traffic sign recognition is the high computational and memory requirements of such networks. As a consequence, there are significant benefits in investigating compact deep neural network architectures for traffic sign recognition that are better suited for embedded devices. In this paper, we introduce MicronNet, a highly compact deep convolutional neural network for real-time embedded traffic sign recognition designed based on macroarchitecture design principles (e.g., spectral macroarchitecture augmentation, parameter precision optimization, etc.) as well as numerical microarchitecture optimization strategies. The resulting overall architecture of MicronNet is thus designed with as few parameters and computations as possible while maintaining recognition performance, leading to optimized information density of the proposed network. The resulting MicronNet possesses a model size of just ~1MB and ~510,000 parameters (~27x fewer parameters than state-of-the-art) while still achieving a human performance level top-1 accuracy of 98.9% on the German traffic sign recognition benchmark. Furthermore, MicronNet requires just ~10 million multiply-accumulate operations to perform inference, and has a time-to-compute of just 32.19 ms on a Cortex-A53 high efficiency processor. These experimental results show that highly compact, optimized deep neural network architectures can be designed for real-time traffic sign recognition that are well-suited for embedded scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge