Michael Matheson

Towards the Development of Entropy-Based Anomaly Detection in an Astrophysics Simulation

Sep 05, 2020

Abstract:The use of AI and ML for scientific applications is currently a very exciting and dynamic field. Much of this excitement for HPC has focused on ML applications whose analysis and classification generate very large numbers of flops. Others seek to replace scientific simulations with data-driven surrogate models. But another important use case lies in the combination application of ML to improve simulation accuracy. To that end, we present an anomaly problem which arises from a core-collapse supernovae simulation. We discuss strategies and early successes in applying anomaly detection techniques from machine learning to this scientific simulation, as well as current challenges and future possibilities.

Exascale Deep Learning for Scientific Inverse Problems

Sep 24, 2019

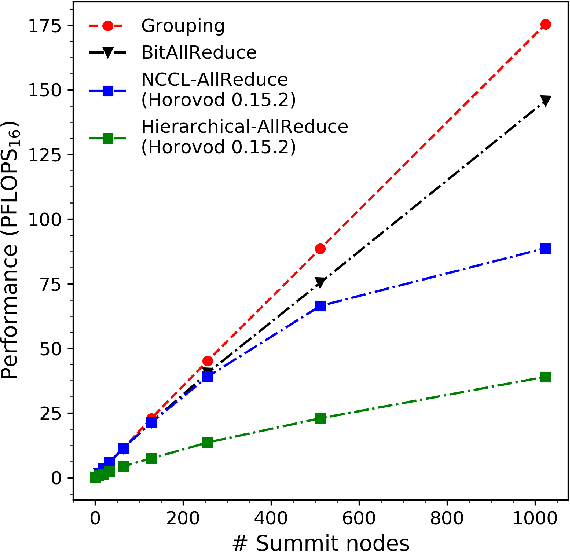

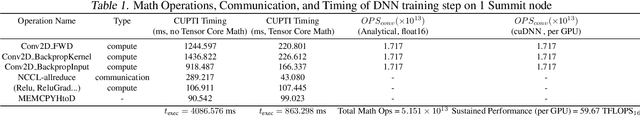

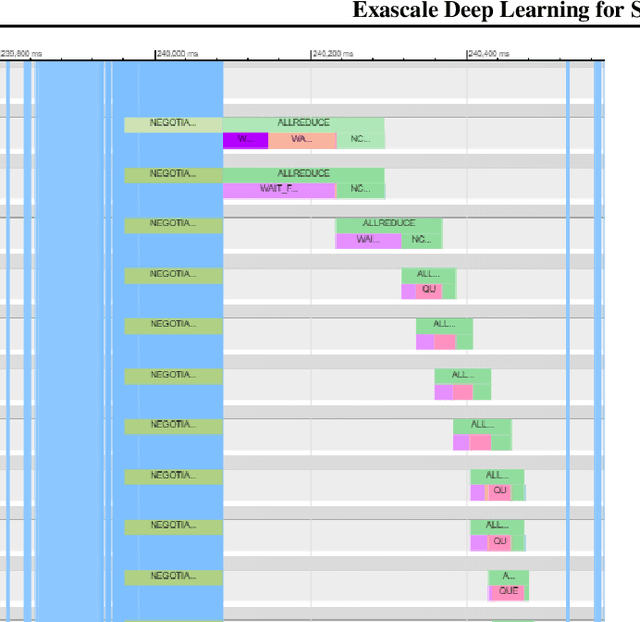

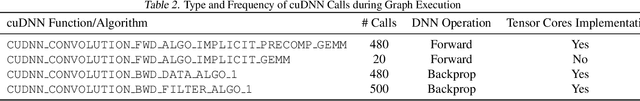

Abstract:We introduce novel communication strategies in synchronous distributed Deep Learning consisting of decentralized gradient reduction orchestration and computational graph-aware grouping of gradient tensors. These new techniques produce an optimal overlap between computation and communication and result in near-linear scaling (0.93) of distributed training up to 27,600 NVIDIA V100 GPUs on the Summit Supercomputer. We demonstrate our gradient reduction techniques in the context of training a Fully Convolutional Neural Network to approximate the solution of a longstanding scientific inverse problem in materials imaging. The efficient distributed training on a dataset size of 0.5 PB, produces a model capable of an atomically-accurate reconstruction of materials, and in the process reaching a peak performance of 2.15(4) EFLOPS$_{16}$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge