Miaolan Xie

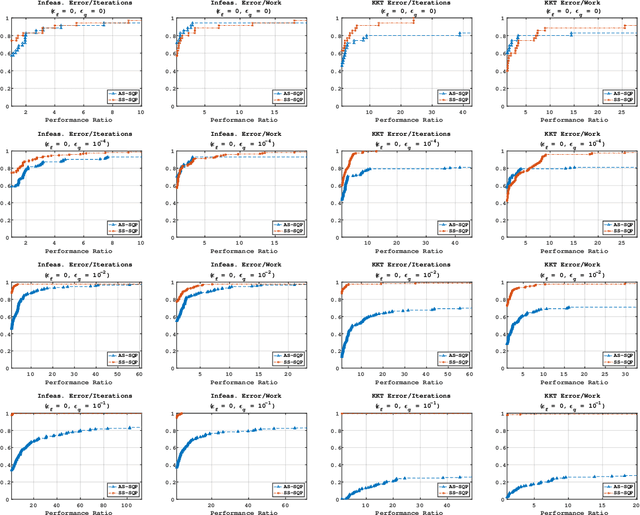

A Sequential Quadratic Programming Method with High Probability Complexity Bounds for Nonlinear Equality Constrained Stochastic Optimization

Jan 01, 2023

Abstract:A step-search sequential quadratic programming method is proposed for solving nonlinear equality constrained stochastic optimization problems. It is assumed that constraint function values and derivatives are available, but only stochastic approximations of the objective function and its associated derivatives can be computed via inexact probabilistic zeroth- and first-order oracles. Under reasonable assumptions, a high-probability bound on the iteration complexity of the algorithm to approximate first-order stationarity is derived. Numerical results on standard nonlinear optimization test problems illustrate the advantages and limitations of our proposed method.

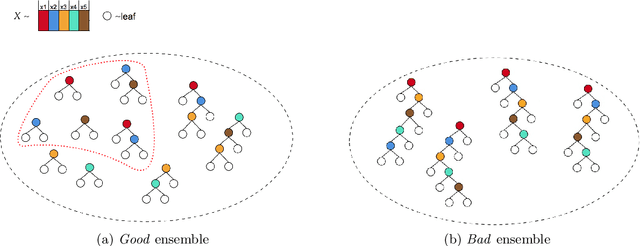

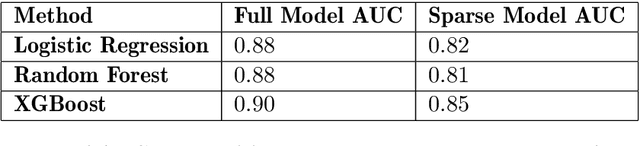

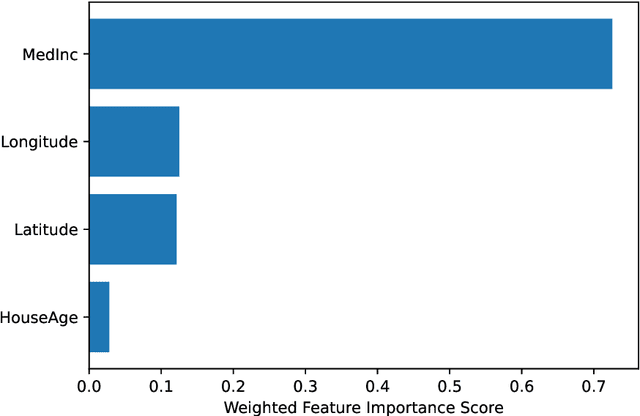

ControlBurn: Nonlinear Feature Selection with Sparse Tree Ensembles

Jul 08, 2022

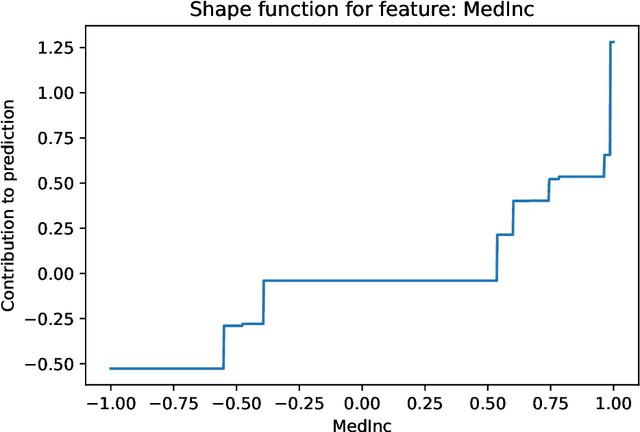

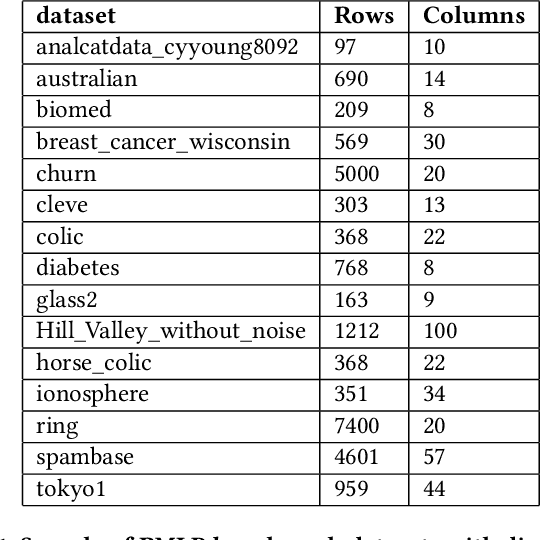

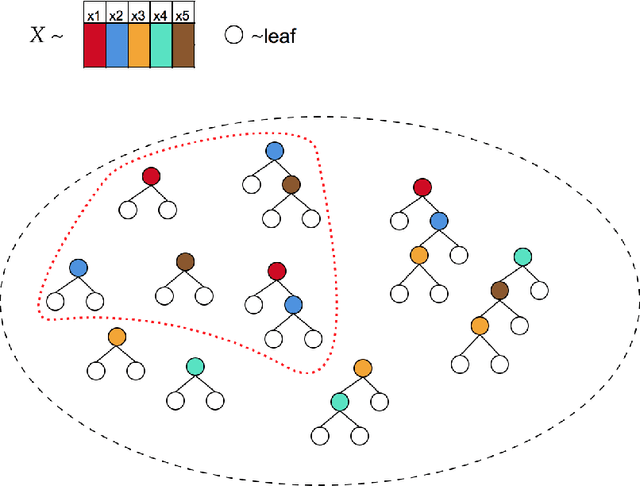

Abstract:ControlBurn is a Python package to construct feature-sparse tree ensembles that support nonlinear feature selection and interpretable machine learning. The algorithms in this package first build large tree ensembles that prioritize basis functions with few features and then select a feature-sparse subset of these basis functions using a weighted lasso optimization criterion. The package includes visualizations to analyze the features selected by the ensemble and their impact on predictions. Hence ControlBurn offers the accuracy and flexibility of tree-ensemble models and the interpretability of sparse generalized additive models. ControlBurn is scalable and flexible: for example, it can use warm-start continuation to compute the regularization path (prediction error for any number of selected features) for a dataset with tens of thousands of samples and hundreds of features in seconds. For larger datasets, the runtime scales linearly in the number of samples and features (up to a log factor), and the package support acceleration using sketching. Moreover, the ControlBurn framework accommodates feature costs, feature groupings, and $\ell_0$-based regularizers. The package is user-friendly and open-source: its documentation and source code appear on https://pypi.org/project/ControlBurn/ and https://github.com/udellgroup/controlburn/.

ControlBurn: Feature Selection by Sparse Forests

Jul 01, 2021

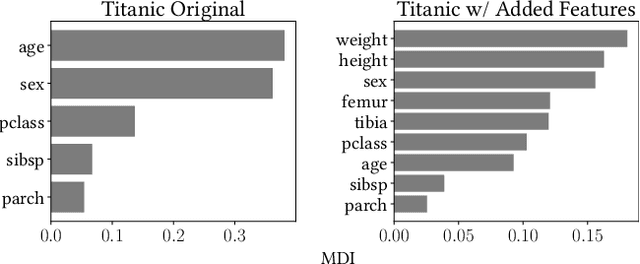

Abstract:Tree ensembles distribute feature importance evenly amongst groups of correlated features. The average feature ranking of the correlated group is suppressed, which reduces interpretability and complicates feature selection. In this paper we present ControlBurn, a feature selection algorithm that uses a weighted LASSO-based feature selection method to prune unnecessary features from tree ensembles, just as low-intensity fire reduces overgrown vegetation. Like the linear LASSO, ControlBurn assigns all the feature importance of a correlated group of features to a single feature. Moreover, the algorithm is efficient and only requires a single training iteration to run, unlike iterative wrapper-based feature selection methods. We show that ControlBurn performs substantially better than feature selection methods with comparable computational costs on datasets with correlated features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge