Meysam Sadeghi

Downlink Power Allocation in Massive MIMO via Deep Learning: Adversarial Attacks and Training

Jun 14, 2022

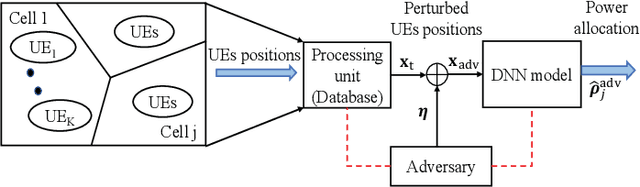

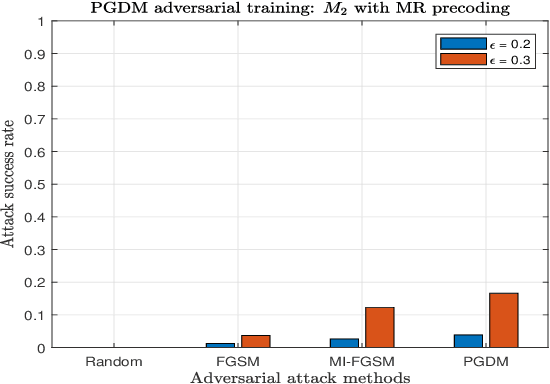

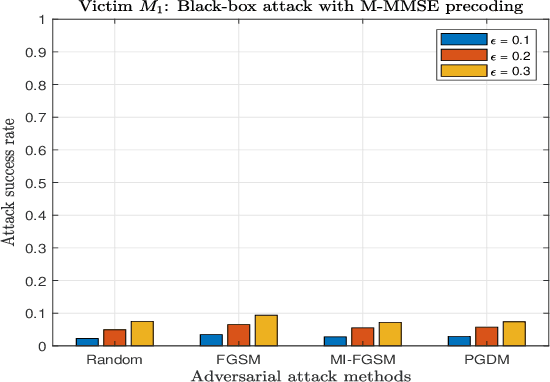

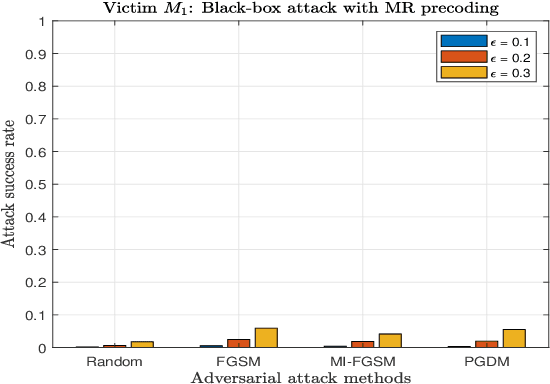

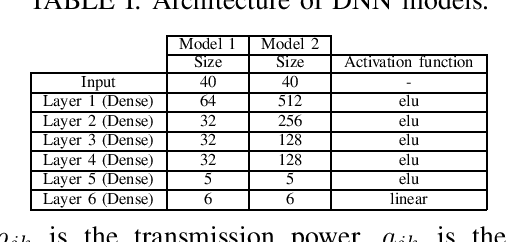

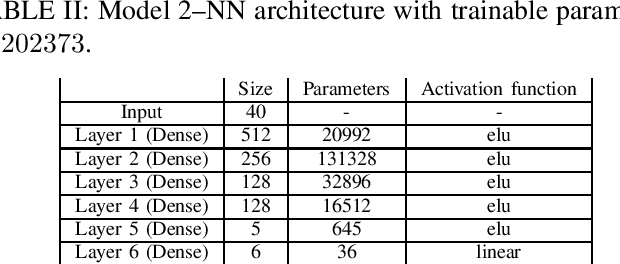

Abstract:The successful emergence of deep learning (DL) in wireless system applications has raised concerns about new security-related challenges. One such security challenge is adversarial attacks. Although there has been much work demonstrating the susceptibility of DL-based classification tasks to adversarial attacks, regression-based problems in the context of a wireless system have not been studied so far from an attack perspective. The aim of this paper is twofold: (i) we consider a regression problem in a wireless setting and show that adversarial attacks can break the DL-based approach and (ii) we analyze the effectiveness of adversarial training as a defensive technique in adversarial settings and show that the robustness of DL-based wireless system against attacks improves significantly. Specifically, the wireless application considered in this paper is the DL-based power allocation in the downlink of a multicell massive multi-input-multi-output system, where the goal of the attack is to yield an infeasible solution by the DL model. We extend the gradient-based adversarial attacks: fast gradient sign method (FGSM), momentum iterative FGSM, and projected gradient descent method to analyze the susceptibility of the considered wireless application with and without adversarial training. We analyze the deep neural network (DNN) models performance against these attacks, where the adversarial perturbations are crafted using both the white-box and black-box attacks.

MRT-based Joint Unicast and Multigroup Multicast Transmission in Massive MIMO Systems

Dec 31, 2021

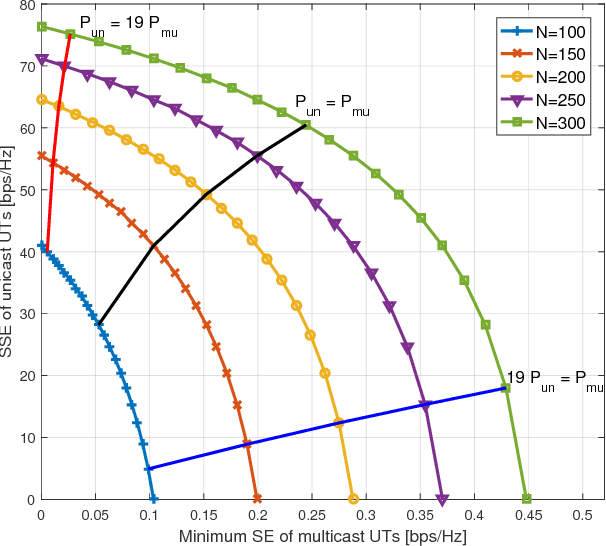

Abstract:We study joint unicast and multigroup multicast transmission in single-cell massive multiple-input-multiple-output (MIMO) systems, under maximum ratio transmission. For the unicast transmission, the objective is to maximize the weighted sum spectral efficiency (SE) of the unicast user terminals (UTs) and for the multicast transmission the objective is to maximize the minimum SE of the multicast UTs. These two problems are coupled to each other in a conflicting manner, due to their shared power resource and interference. To address this, we formulate a multiobjective optimization problem (MOOP). We derive the Pareto boundary of the MOOP analytically and determine the values of the system parameters to achieve any desired Pareto optimal point. Moreover, we prove that the Pareto region is convex, hence the system should serve the unicast and multicast UTs at the same time-frequency resource.

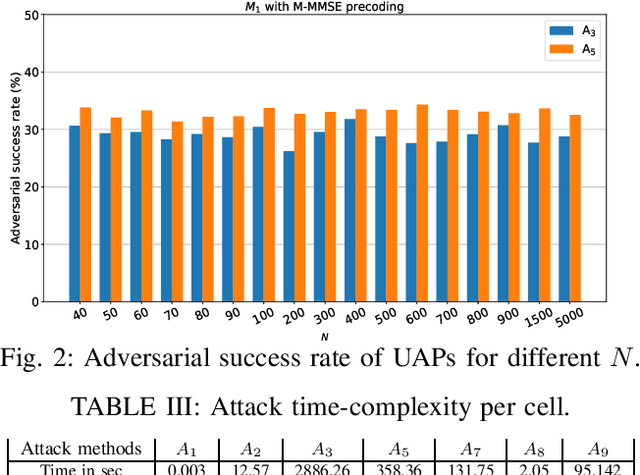

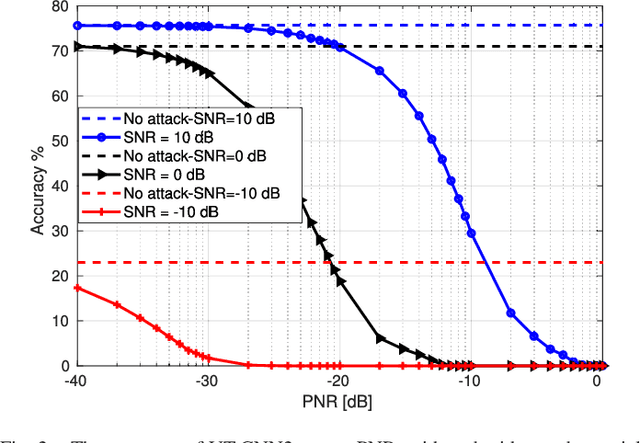

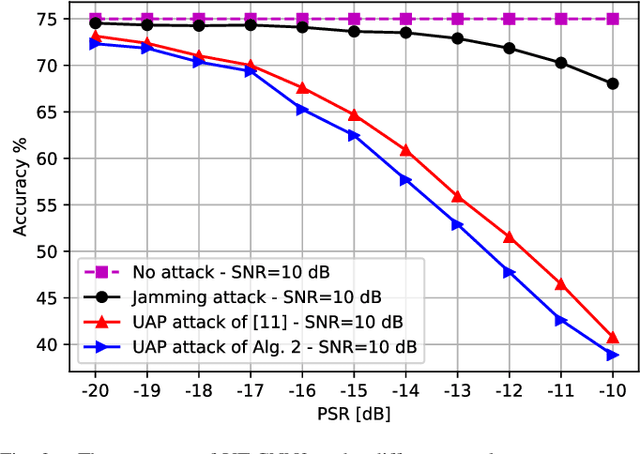

Universal Adversarial Attacks on Neural Networks for Power Allocation in a Massive MIMO System

Oct 10, 2021

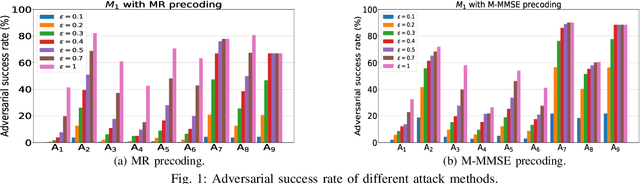

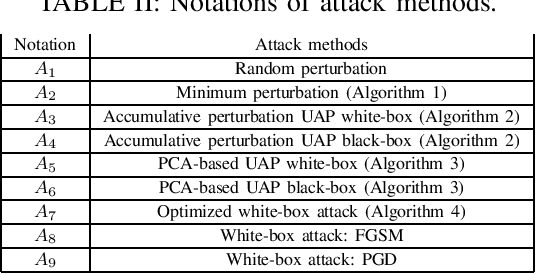

Abstract:Deep learning (DL) architectures have been successfully used in many applications including wireless systems. However, they have been shown to be susceptible to adversarial attacks. We analyze DL-based models for a regression problem in the context of downlink power allocation in massive multiple-input-multiple-output systems and propose universal adversarial perturbation (UAP)-crafting methods as white-box and black-box attacks. We benchmark the UAP performance of white-box and black-box attacks for the considered application and show that the adversarial success rate can achieve up to 60% and 40%, respectively. The proposed UAP-based attacks make a more practical and realistic approach as compared to classical white-box attacks.

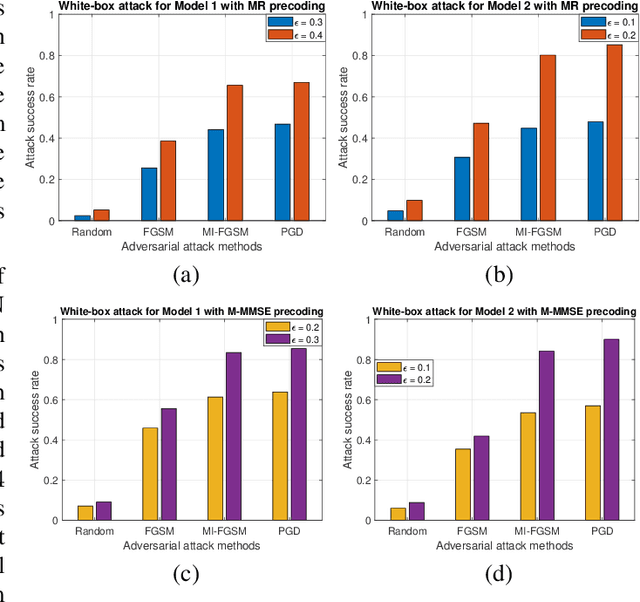

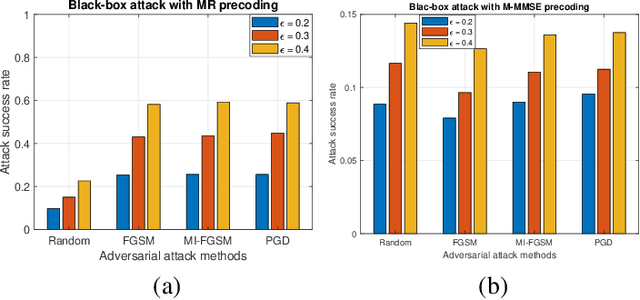

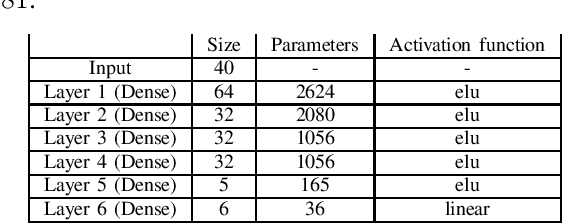

Adversarial Attacks on Deep Learning Based Power Allocation in a Massive MIMO Network

Jan 28, 2021

Abstract:Deep learning (DL) is becoming popular as a new tool for many applications in wireless communication systems. However, for many classification tasks (e.g., modulation classification) it has been shown that DL-based wireless systems are susceptible to adversarial examples; adversarial examples are well-crafted malicious inputs to the neural network (NN) with the objective to cause erroneous outputs. In this paper, we extend this to regression problems and show that adversarial attacks can break DL-based power allocation in the downlink of a massive multiple-input-multiple-output (maMIMO) network. Specifically, we extend the fast gradient sign method (FGSM), momentum iterative FGSM, and projected gradient descent adversarial attacks in the context of power allocation in a maMIMO system. We benchmark the performance of these attacks and show that with a small perturbation in the input of the NN, the white-box attacks can result in infeasible solutions up to 86%. Furthermore, we investigate the performance of black-box attacks. All the evaluations conducted in this work are based on an open dataset and NN models, which are publicly available.

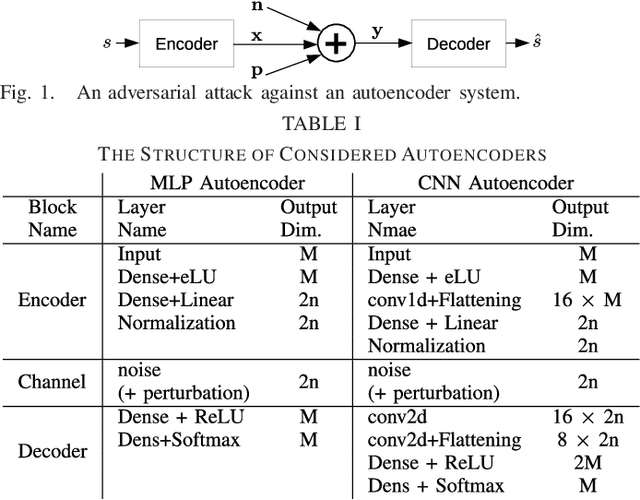

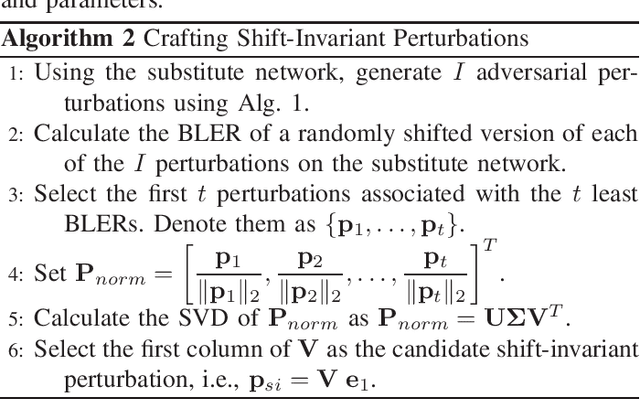

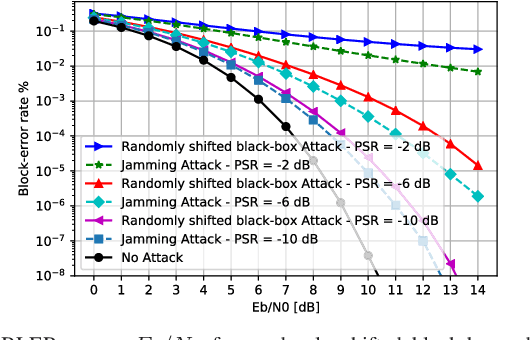

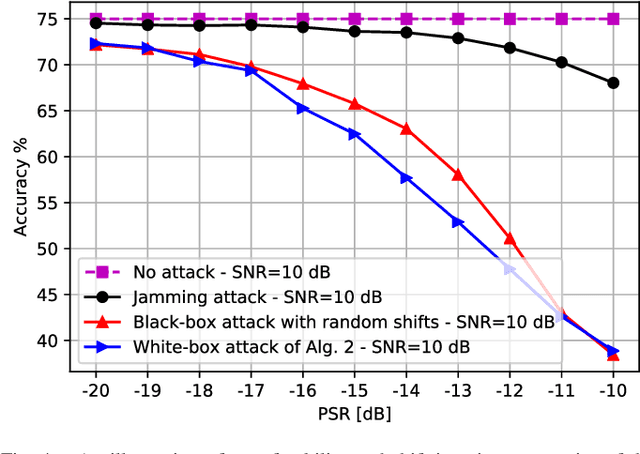

Physical Adversarial Attacks Against End-to-End Autoencoder Communication Systems

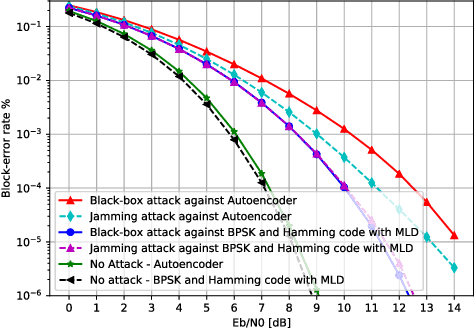

Feb 22, 2019

Abstract:We show that end-to-end learning of communication systems through deep neural network (DNN) autoencoders can be extremely vulnerable to physical adversarial attacks. Specifically, we elaborate how an attacker can craft effective physical black-box adversarial attacks. Due to the openness (broadcast nature) of the wireless channel, an adversary transmitter can increase the block-error-rate of a communication system by orders of magnitude by transmitting a well-designed perturbation signal over the channel. We reveal that the adversarial attacks are more destructive than jamming attacks. We also show that classical coding schemes are more robust than autoencoders against both adversarial and jamming attacks. The codes are available at [1].

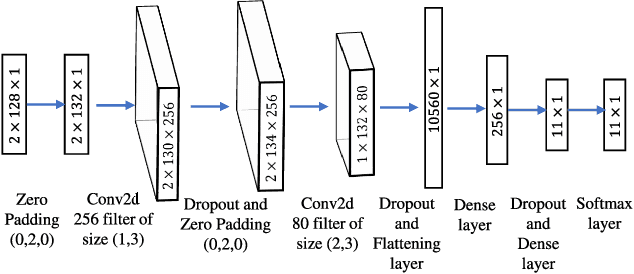

Adversarial Attacks on Deep-Learning Based Radio Signal Classification

Aug 23, 2018

Abstract:Deep learning (DL), despite its enormous success in many computer vision and language processing applications, is exceedingly vulnerable to adversarial attacks. We consider the use of DL for radio signal (modulation) classification tasks, and present practical methods for the crafting of white-box and universal black-box adversarial attacks in that application. We show that these attacks can considerably reduce the classification performance, with extremely small perturbations of the input. In particular, these attacks are significantly more powerful than classical jamming attacks, which raises significant security and robustness concerns in the use of DL-based algorithms for the wireless physical layer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge