Mehregan Dor

Initialization of Monocular Visual Navigation for Autonomous Agents Using Modified Structure from Small Motion

Sep 24, 2024

Abstract:We propose a standalone monocular visual Simultaneous Localization and Mapping (vSLAM) initialization pipeline for autonomous robots in space. Our method, a state-of-the-art factor graph optimization pipeline, enhances classical Structure from Small Motion (SfSM) to robustly initialize a monocular agent in weak-perspective projection scenes. Furthermore, it overcomes visual estimation challenges introduced by spacecraft inspection trajectories, such as: center-pointing motion, which exacerbates the bas-relief ambiguity, and the presence of a dominant plane in the scene, which causes motion estimation degeneracies in classical Structure from Motion (SfM). We validate our method on realistic, simulated satellite inspection images exhibiting weak-perspective projection, and we demonstrate its effectiveness and improved performance compared to other monocular initialization procedures.

AstroSLAM: Autonomous Monocular Navigation in the Vicinity of a Celestial Small Body -- Theory and Experiments

Dec 01, 2022Abstract:We propose AstroSLAM, a standalone vision-based solution for autonomous online navigation around an unknown target small celestial body. AstroSLAM is predicated on the formulation of the SLAM problem as an incrementally growing factor graph, facilitated by the use of the GTSAM library and the iSAM2 engine. By combining sensor fusion with orbital motion priors, we achieve improved performance over a baseline SLAM solution. We incorporate orbital motion constraints into the factor graph by devising a novel relative dynamics factor, which links the relative pose of the spacecraft to the problem of predicting trajectories stemming from the motion of the spacecraft in the vicinity of the small body. We demonstrate the excellent performance of AstroSLAM using both real legacy mission imagery and trajectory data courtesy of NASA's Planetary Data System, as well as real in-lab imagery data generated on a 3 degree-of-freedom spacecraft simulator test-bed.

AstroVision: Towards Autonomous Feature Detection and Description for Missions to Small Bodies Using Deep Learning

Aug 03, 2022

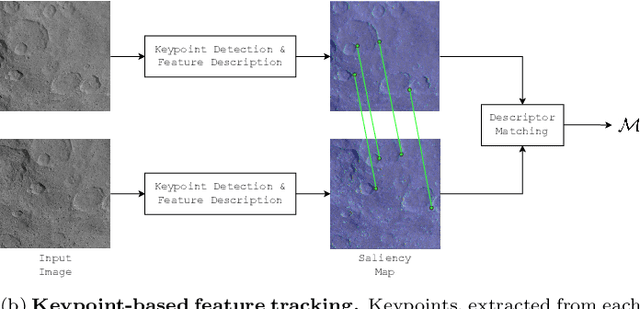

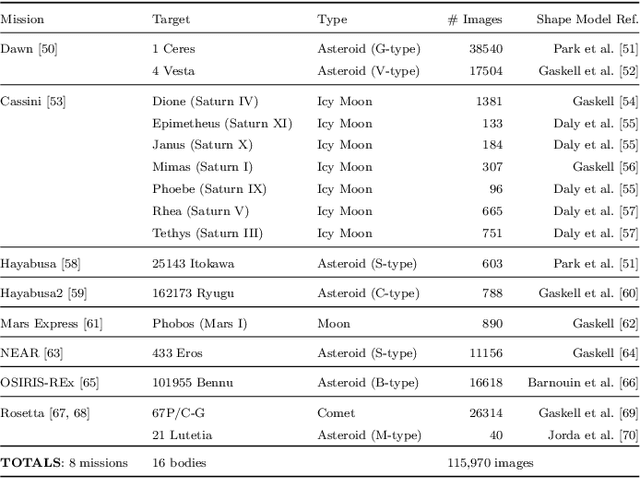

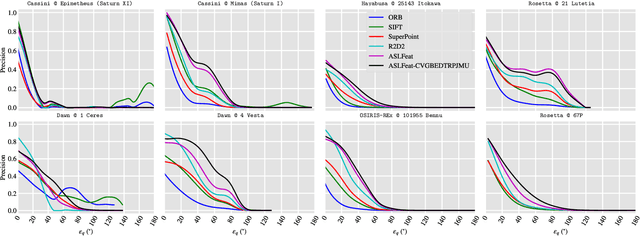

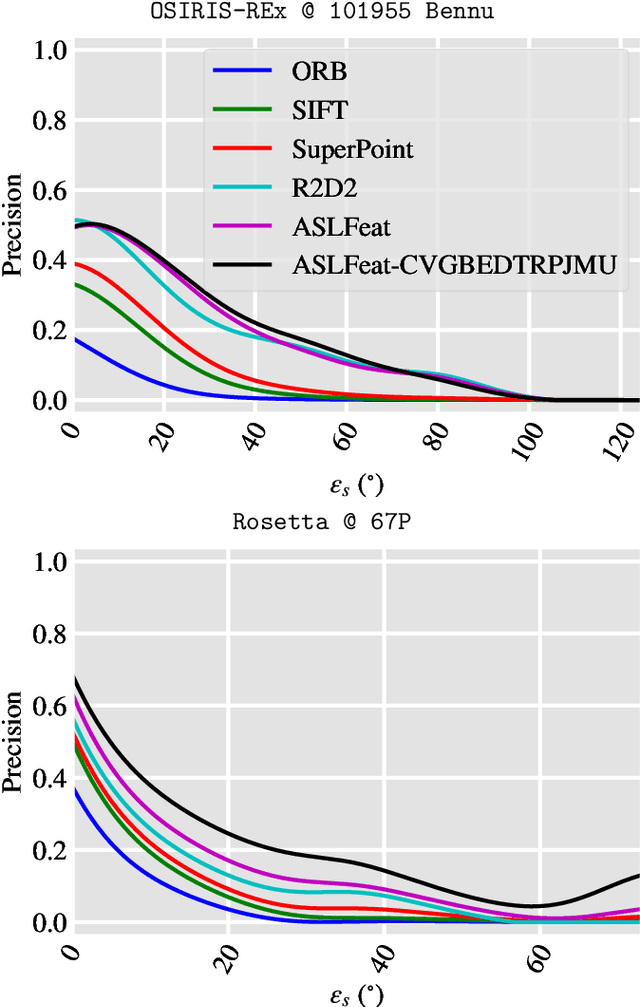

Abstract:Missions to small celestial bodies rely heavily on optical feature tracking for characterization of and relative navigation around the target body. While deep learning has led to great advancements in feature detection and description, training and validating data-driven models for space applications is challenging due to the limited availability of large-scale, annotated datasets. This paper introduces AstroVision, a large-scale dataset comprised of 115,970 densely annotated, real images of 16 different small bodies captured during past and ongoing missions. We leverage AstroVision to develop a set of standardized benchmarks and conduct an exhaustive evaluation of both handcrafted and data-driven feature detection and description methods. Next, we employ AstroVision for end-to-end training of a state-of-the-art, deep feature detection and description network and demonstrate improved performance on multiple benchmarks. The full benchmarking pipeline and the dataset will be made publicly available to facilitate the advancement of computer vision algorithms for space applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge