Mehdi Rahimi

Printed Texts Tracking and Following for a Finger-Wearable Electro-Braille System Through Opto-electrotactile Feedback

Aug 06, 2021Abstract:This paper presents our recent development on a portable and refreshable text reading and sensory substitution system for the blind or visually impaired (BVI), called Finger-eye. The system mainly consists of an opto-text processing unit and a compact electro-tactile based display that can deliver text-related electrical signals to the fingertip skin through a wearable and Braille-dot patterned electrode array and thus delivers the electro-stimulation based Braille touch sensations to the fingertip. To achieve the goal of aiding BVI to read any text not written in Braille through this portable system, in this work, a Rapid Optical Character Recognition (R-OCR) method is firstly developed for real-time processing text information based on a Fisheye imaging device mounted at the finger-wearable electro-tactile display. This allows real-time translation of printed text to electro-Braille along with natural movement of user's fingertip as if reading any Braille display or book. More importantly, an electro-tactile neuro-stimulation feedback mechanism is proposed and incorporated with the R-OCR method, which facilitates a new opto-electrotactile feedback based text line tracking control approach that enables text line following by user fingertip during reading. Multiple experiments were designed and conducted to test the ability of blindfolded participants to read through and follow the text line based on the opto-electrotactile-feedback method. The experiments show that as the result of the opto-electrotactile-feedback, the users were able to maintain their fingertip within a $2mm$ distance of the text while scanning a text line. This research is a significant step to aid the BVI users with a portable means to translate and follow to read any printed text to Braille, whether in the digital realm or physically, on any surface.

A Comparison of Various Approaches to Reinforcement Learning Algorithms for Multi-robot Box Pushing

Sep 21, 2018

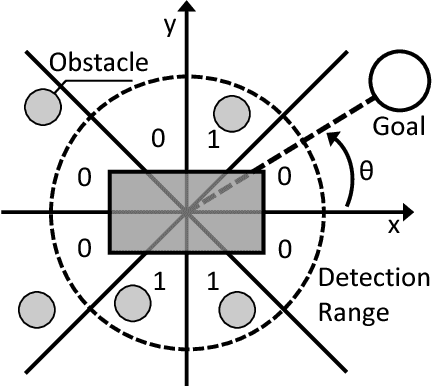

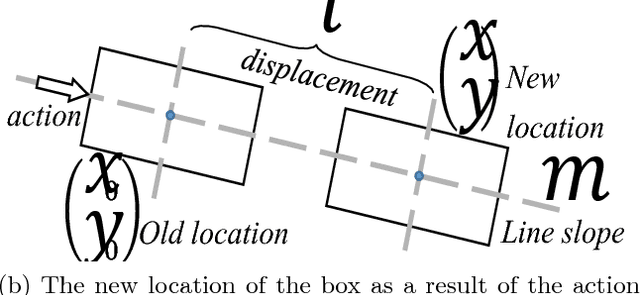

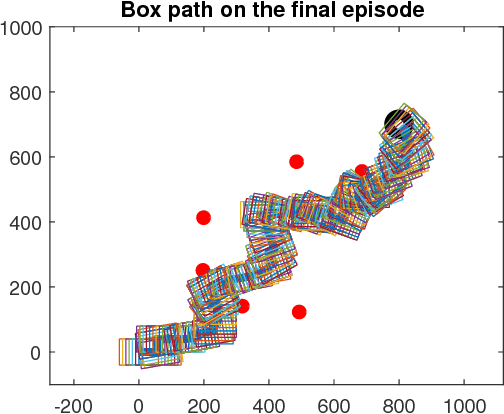

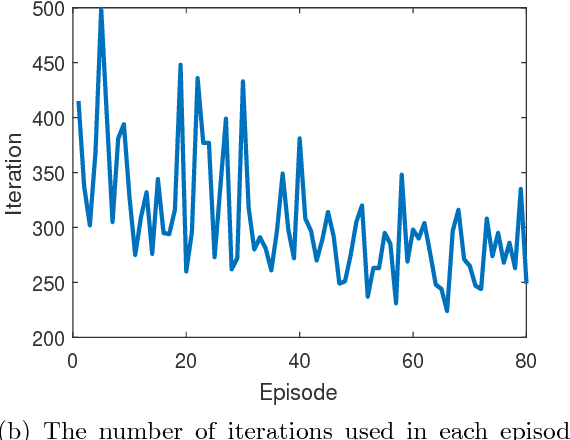

Abstract:In this paper, a comparison of reinforcement learning algorithms and their performance on a robot box pushing task is provided. The robot box pushing problem is structured as both a single-agent problem and also a multi-agent problem. A Q-learning algorithm is applied to the single-agent box pushing problem, and three different Q-learning algorithms are applied to the multi-agent box pushing problem. Both sets of algorithms are applied on a dynamic environment that is comprised of static objects, a static goal location, a dynamic box location, and dynamic agent positions. A simulation environment is developed to test the four algorithms, and their performance is compared through graphical explanations of test results. The comparison shows that the newly applied reinforcement algorithm out-performs the previously applied algorithms on the robot box pushing problem in a dynamic environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge