Mehadi Hassen

Task Selection for AutoML System Evaluation

Aug 26, 2022

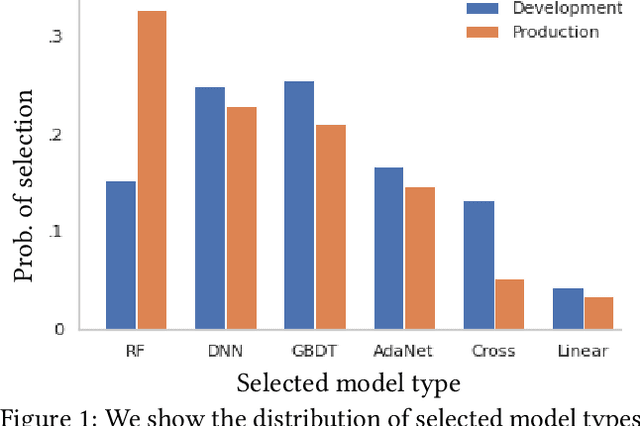

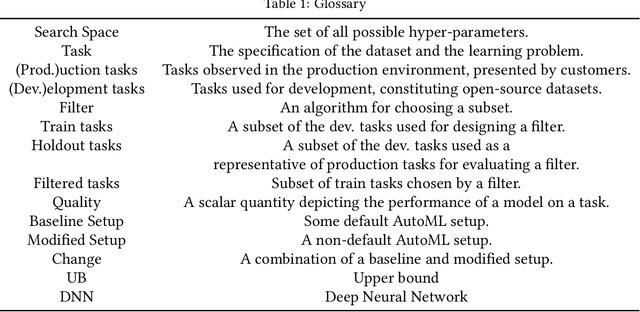

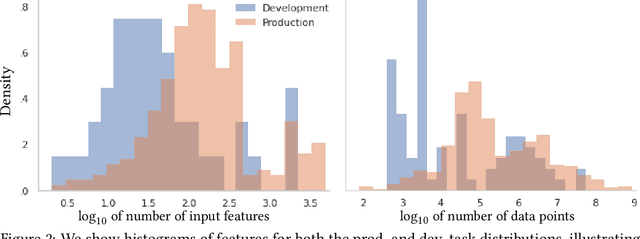

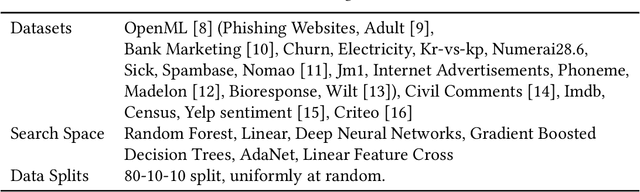

Abstract:Our goal is to assess if AutoML system changes - i.e., to the search space or hyperparameter optimization - will improve the final model's performance on production tasks. However, we cannot test the changes on production tasks. Instead, we only have access to limited descriptors about tasks that our AutoML system previously executed, like the number of data points or features. We also have a set of development tasks to test changes, ex., sampled from OpenML with no usage constraints. However, the development and production task distributions are different leading us to pursue changes that only improve development and not production. This paper proposes a method to leverage descriptor information about AutoML production tasks to select a filtered subset of the most relevant development tasks. Empirical studies show that our filtering strategy improves the ability to assess AutoML system changes on holdout tasks with different distributions than development.

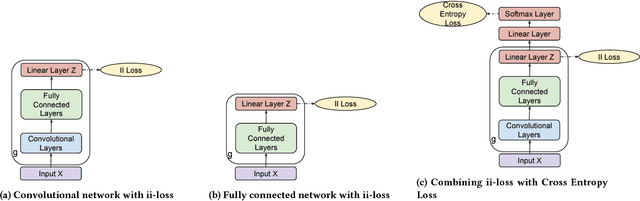

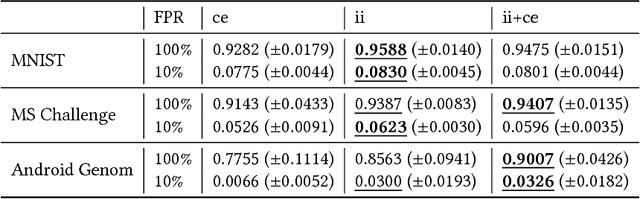

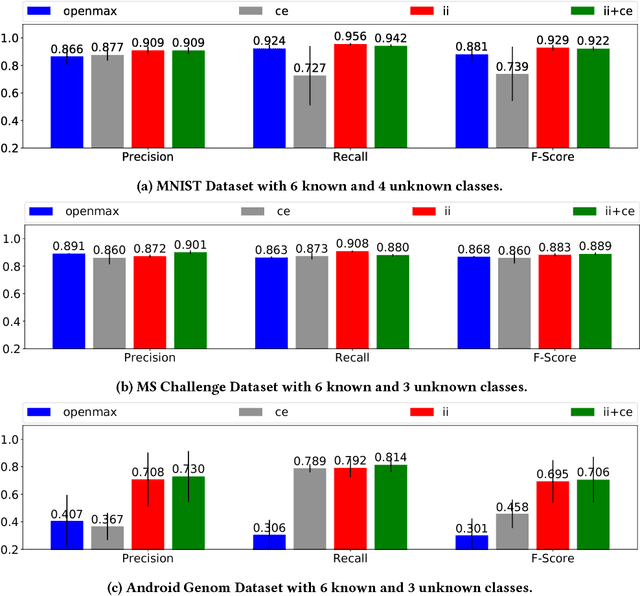

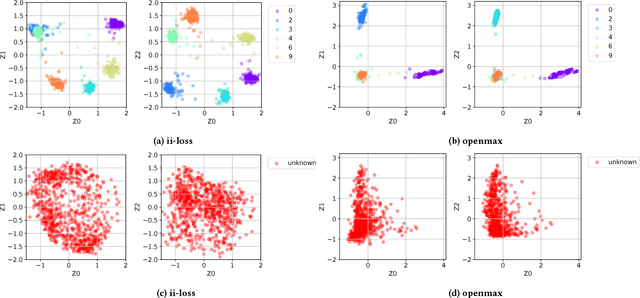

Learning a Neural-network-based Representation for Open Set Recognition

Feb 12, 2018

Abstract:Open set recognition problems exist in many domains. For example in security, new malware classes emerge regularly; therefore malware classification systems need to identify instances from unknown classes in addition to discriminating between known classes. In this paper we present a neural network based representation for addressing the open set recognition problem. In this representation instances from the same class are close to each other while instances from different classes are further apart, resulting in statistically significant improvement when compared to other approaches on three datasets from two different domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge