Philip K. Chan

Feature Decoupling in Self-supervised Representation Learning for Open Set Recognition

Sep 28, 2022

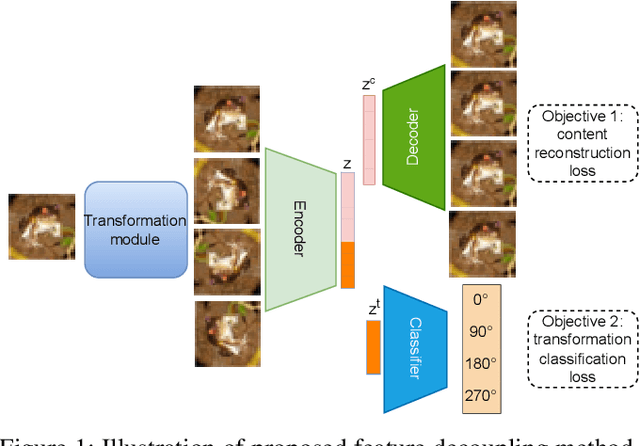

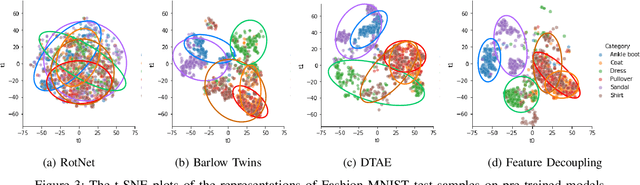

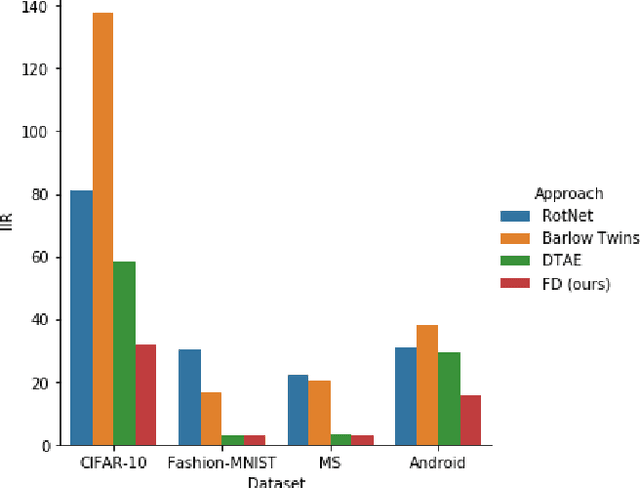

Abstract:Assuming unknown classes could be present during classification, the open set recognition (OSR) task aims to classify an instance into a known class or reject it as unknown. In this paper, we use a two-stage training strategy for the OSR problems. In the first stage, we introduce a self-supervised feature decoupling method that finds the content features of the input samples from the known classes. Specifically, our feature decoupling approach learns a representation that can be split into content features and transformation features. In the second stage, we fine-tune the content features with the class labels. The fine-tuned content features are then used for the OSR problems. Moreover, we consider an unsupervised OSR scenario, where we cluster the content features learned from the first stage. To measure representation quality, we introduce intra-inter ratio (IIR). Our experimental results indicate that our proposed self-supervised approach outperforms others in image and malware OSR problems. Also, our analyses indicate that IIR is correlated with OSR performance.

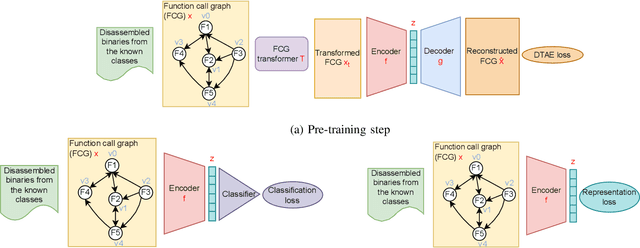

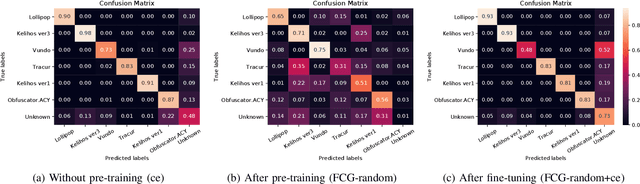

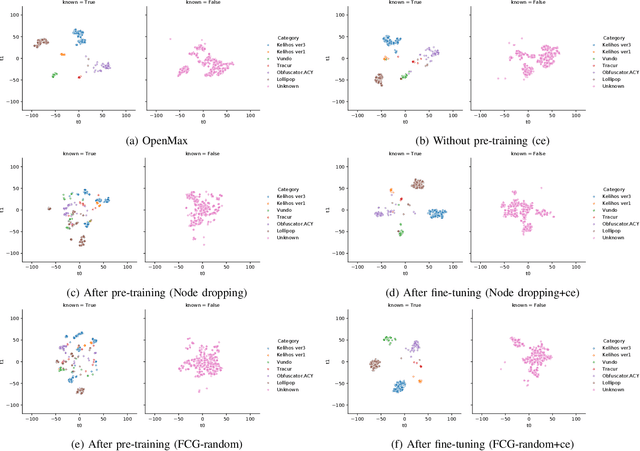

Representation learning with function call graph transformations for malware open set recognition

May 13, 2022

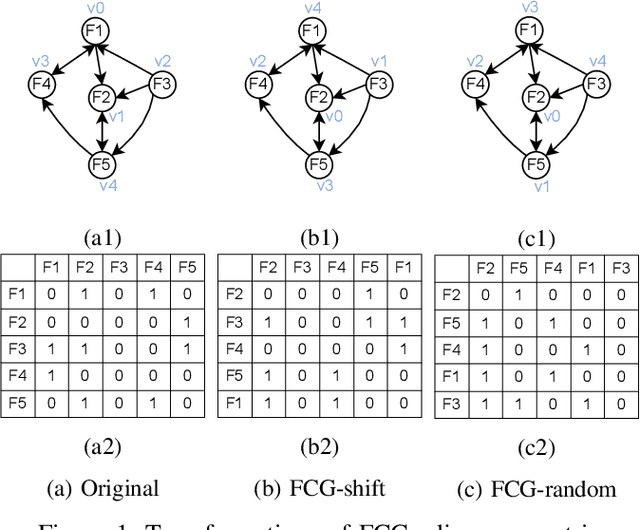

Abstract:Open set recognition (OSR) problem has been a challenge in many machine learning (ML) applications, such as security. As new/unknown malware families occur regularly, it is difficult to exhaust samples that cover all the classes for the training process in ML systems. An advanced malware classification system should classify the known classes correctly while sensitive to the unknown class. In this paper, we introduce a self-supervised pre-training approach for the OSR problem in malware classification. We propose two transformations for the function call graph (FCG) based malware representations to facilitate the pretext task. Also, we present a statistical thresholding approach to find the optimal threshold for the unknown class. Moreover, the experiment results indicate that our proposed pre-training process can improve different performances of different downstream loss functions for the OSR problem.

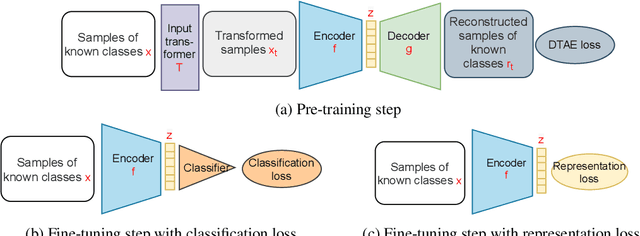

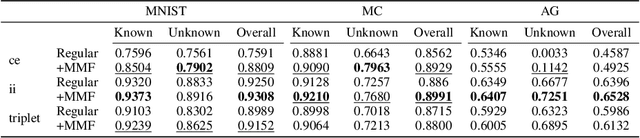

Self-supervised Detransformation Autoencoder for Representation Learning in Open Set Recognition

May 28, 2021

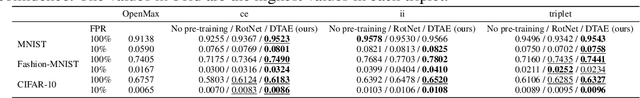

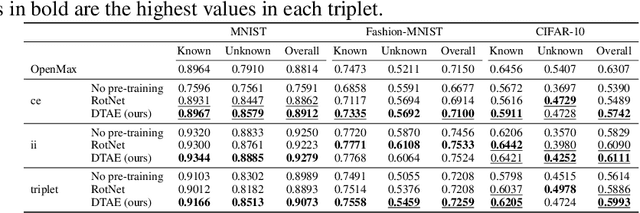

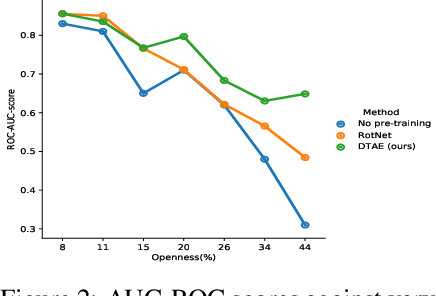

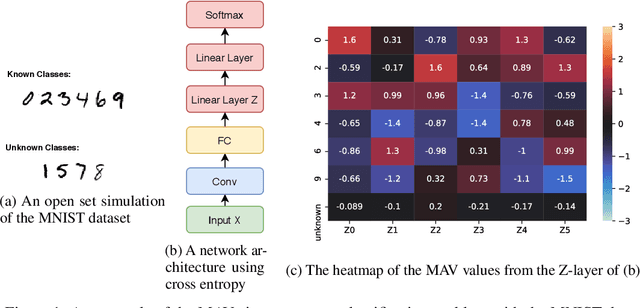

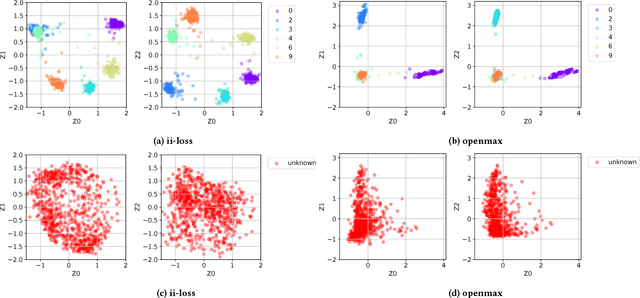

Abstract:The objective of Open set recognition (OSR) is to learn a classifier that can reject the unknown samples while classifying the known classes accurately. In this paper, we propose a self-supervision method, Detransformation Autoencoder (DTAE), for the OSR problem. This proposed method engages in learning representations that are invariant to the transformations of the input data. Experiments on several standard image datasets indicate that the pre-training process significantly improves the model performance in the OSR tasks. Meanwhile, our proposed self-supervision method achieves significant gains in detecting the unknown class and classifying the known classes. Moreover, our analysis indicates that DTAE can yield representations that contain more target class information and less transformation information than RotNet.

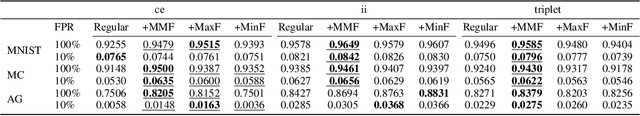

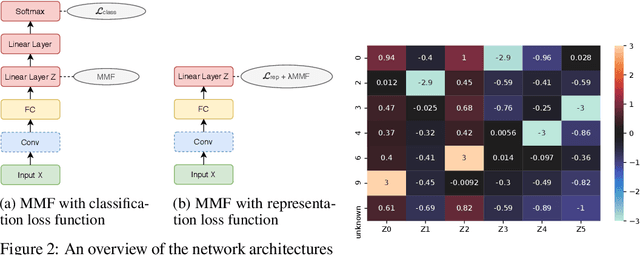

MMF: A loss extension for feature learning in open set recognition

Jun 26, 2020

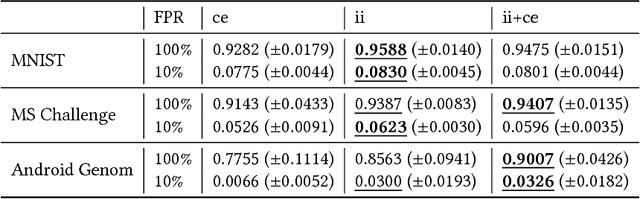

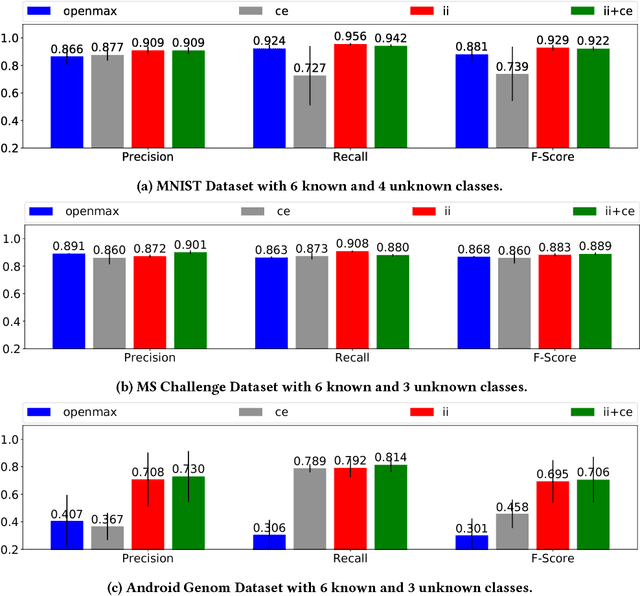

Abstract:Open set recognition (OSR) is the problem of classifying the known classes, meanwhile identifying the unknown classes when the collected samples cannot exhaust all the classes. There are many applications for the OSR problem. For instance, the frequently emerged new malware classes require a system that can classify the known classes and identify the unknown malware classes. In this paper, we propose an add-on extension for loss functions in neural networks to address the OSR problem. Our loss extension leverages the neural network to find polar representations for the known classes so that the representations of the known and the unknown classes become more effectively separable. Our contributions include: First, we introduce an extension that can be incorporated into different loss functions to find more discriminative representations. Second, we show that the proposed extension can significantly improve the performances of two different types of loss functions on datasets from two different domains. Third, we show that with the proposed extension, one loss function outperforms the others in terms of training time and model accuracy.

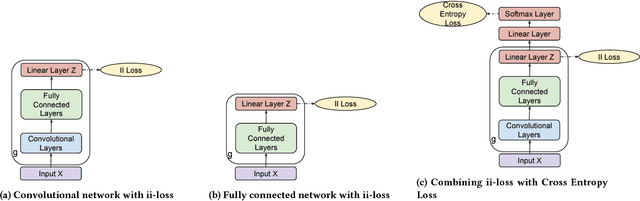

Learning a Neural-network-based Representation for Open Set Recognition

Feb 12, 2018

Abstract:Open set recognition problems exist in many domains. For example in security, new malware classes emerge regularly; therefore malware classification systems need to identify instances from unknown classes in addition to discriminating between known classes. In this paper we present a neural network based representation for addressing the open set recognition problem. In this representation instances from the same class are close to each other while instances from different classes are further apart, resulting in statistically significant improvement when compared to other approaches on three datasets from two different domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge