Megan Micheletti

Understanding Postpartum Parents' Experiences via Two Digital Platforms

Dec 22, 2022

Abstract:Digital platforms, including online forums and helplines, have emerged as avenues of support for caregivers suffering from postpartum mental health distress. Understanding support seekers' experiences as shared on these platforms could provide crucial insight into caregivers' needs during this vulnerable time. In the current work, we provide a descriptive analysis of the concerns, psychological states, and motivations shared by healthy and distressed postpartum support seekers on two digital platforms, a one-on-one digital helpline and a publicly available online forum. Using a combination of human annotations, dictionary models and unsupervised techniques, we find stark differences between the experiences of distressed and healthy mothers. Distressed mothers described interpersonal problems and a lack of support, with 8.60% - 14.56% reporting severe symptoms including suicidal ideation. In contrast, the majority of healthy mothers described childcare issues, such as questions about breastfeeding or sleeping, and reported no severe mental health concerns. Across the two digital platforms, we found that distressed mothers shared similar content. However, the patterns of speech and affect shared by distressed mothers differed between the helpline vs. the online forum, suggesting the design of these platforms may shape meaningful measures of their support-seeking experiences. Our results provide new insight into the experiences of caregivers suffering from postpartum mental health distress. We conclude by discussing methodological considerations for understanding content shared by support seekers and design considerations for the next generation of support tools for postpartum parents.

Classification of Infant Crying in Real-World Home Environments Using Deep Learning

May 25, 2020

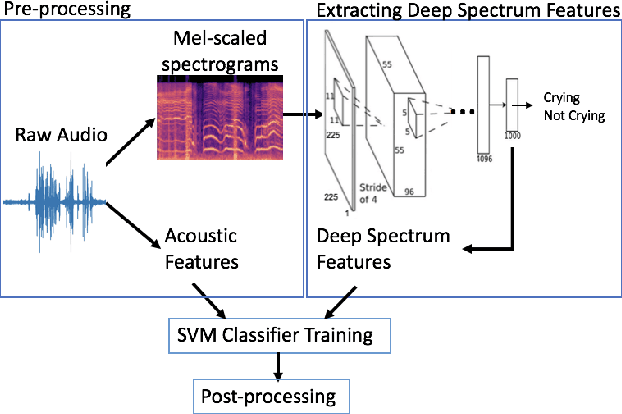

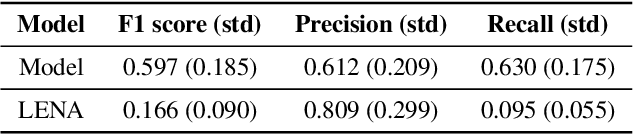

Abstract:In the domain of social signal processing, audio recognition is a promising avenue for accessing daily behaviors that contribute to health and well-being. However, despite advances in mobile computing and machine learning, audio behavior detection models are largely constrained to data collected in controlled settings, such as call centers. This is problematic as it means their performance is unlikely to generalize to real-world applications. In the current paper, we present a model combining deep spectrum and acoustic features to detect and classify infant distress vocalizations from 24 hour, continuous, raw real-world data collected via a wearable audio recorder. Our model dramatically outperforms infant distress detection models trained and tested on equivalent real-world datasets. In particular, our model has an F1 score of 0.597 relative to F1 scores of 0.166 and 0.26 achieved by state-of-practice and state-of-the-art real-world infant distress classifiers, respectively. We end by discussing what may have facilitated this massive gain in accuracy, including using supervised deep spectrum features and the fact that we collected and annotated a massive dataset of 780 hours of real-world audio data with over 25 hours of labelled distress.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge