Md. Al Mehedi Hasan

Parkinson Disease Detection Based on In-air Dynamics Feature Extraction and Selection Using Machine Learning

Dec 19, 2024Abstract:Parkinson's disease (PD) is a progressive neurological disorder that impairs movement control, leading to symptoms such as tremors, stiffness, and bradykinesia. Many researchers analyzing handwriting data for PD detection typically rely on computing statistical features over the entirety of the handwriting task. While this method can capture broad patterns, it has several limitations, including a lack of focus on dynamic change, oversimplified feature representation, lack of directional information, and missing micro-movements or subtle variations. Consequently, these systems face challenges in achieving good performance accuracy, robustness, and sensitivity. To overcome this problem, we proposed an optimized PD detection methodology that incorporates newly developed dynamic kinematic features and machine learning (ML)-based techniques to capture movement dynamics during handwriting tasks. In the procedure, we first extracted 65 newly developed kinematic features from the first and last 10% phases of the handwriting task rather than using the entire task. Alongside this, we also reused 23 existing kinematic features, resulting in a comprehensive new feature set. Next, we enhanced the kinematic features by applying statistical formulas to compute hierarchical features from the handwriting data. This approach allows us to capture subtle movement variations that distinguish PD patients from healthy controls. To further optimize the feature set, we applied the Sequential Forward Floating Selection method to select the most relevant features, reducing dimensionality and computational complexity. Finally, we employed an ML-based approach based on ensemble voting across top-performing tasks, achieving an impressive 96.99\% accuracy on task-wise classification and 99.98% accuracy on task ensembles, surpassing the existing state-of-the-art model by 2% for the PaHaW dataset.

Bengali Sign Language Recognition through Hand Pose Estimation using Multi-Branch Spatial-Temporal Attention Model

Aug 26, 2024

Abstract:Hand gesture-based sign language recognition (SLR) is one of the most advanced applications of machine learning, and computer vision uses hand gestures. Although, in the past few years, many researchers have widely explored and studied how to address BSL problems, specific unaddressed issues remain, such as skeleton and transformer-based BSL recognition. In addition, the lack of evaluation of the BSL model in various concealed environmental conditions can prove the generalized property of the existing model by facing daily life signs. As a consequence, existing BSL recognition systems provide a limited perspective of their generalisation ability as they are tested on datasets containing few BSL alphabets that have a wide disparity in gestures and are easy to differentiate. To overcome these limitations, we propose a spatial-temporal attention-based BSL recognition model considering hand joint skeletons extracted from the sequence of images. The main aim of utilising hand skeleton-based BSL data is to ensure the privacy and low-resolution sequence of images, which need minimum computational cost and low hardware configurations. Our model captures discriminative structural displacements and short-range dependency based on unified joint features projected onto high-dimensional feature space. Specifically, the use of Separable TCN combined with a powerful multi-head spatial-temporal attention architecture generated high-performance accuracy. The extensive experiments with a proposed dataset and two benchmark BSL datasets with a wide range of evaluations, such as intra- and inter-dataset evaluation settings, demonstrated that our proposed models achieve competitive performance with extremely low computational complexity and run faster than existing models.

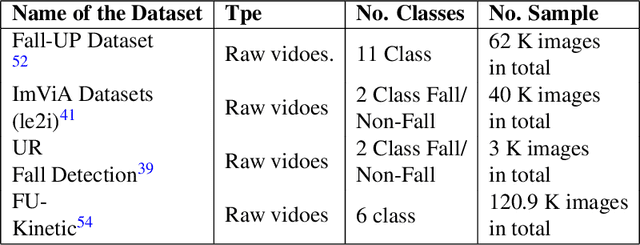

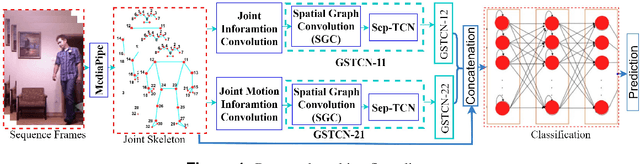

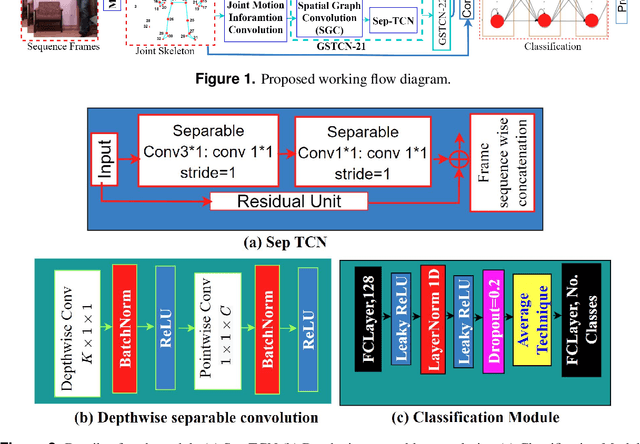

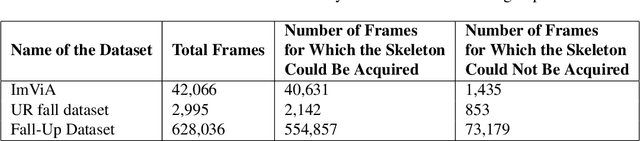

Computer-Aided Fall Recognition Using a Three-Stream Spatial-Temporal GCN Model with Adaptive Feature Aggregation

Aug 22, 2024

Abstract:The prevention of falls is paramount in modern healthcare, particularly for the elderly, as falls can lead to severe injuries or even fatalities. Additionally, the growing incidence of falls among the elderly, coupled with the urgent need to prevent suicide attempts resulting from medication overdose, underscores the critical importance of accurate and efficient fall detection methods. In this scenario, a computer-aided fall detection system is inevitable to save elderly people's lives worldwide. Many researchers have been working to develop fall detection systems. However, the existing fall detection systems often struggle with issues such as unsatisfactory performance accuracy, limited robustness, high computational complexity, and sensitivity to environmental factors due to a lack of effective features. In response to these challenges, this paper proposes a novel three-stream spatial-temporal feature-based fall detection system. Our system incorporates joint skeleton-based spatial and temporal Graph Convolutional Network (GCN) features, joint motion-based spatial and temporal GCN features, and residual connections-based features. Each stream employs adaptive graph-based feature aggregation and consecutive separable convolutional neural networks (Sep-TCN), significantly reducing computational complexity and model parameters compared to prior systems. Experimental results across multiple datasets demonstrate the superior effectiveness and efficiency of our proposed system, with accuracies of 99.51\%, 99.15\%, 99.79\% and 99.85 \% achieved on the ImViA, UR-Fall, Fall-UP and FU-Kinect datasets, respectively. The remarkable performance of our system highlights its superiority, efficiency, and generalizability in real-world fall detection scenarios, offering significant advancements in healthcare and societal well-being.

Cervical Cancer Detection Using Multi-Branch Deep Learning Model

Aug 20, 2024Abstract:Cervical cancer is a crucial global health concern for women, and the persistent infection of High-risk HPV mainly triggers this remains a global health challenge, with young women diagnosis rates soaring from 10\% to 40\% over three decades. While Pap smear screening is a prevalent diagnostic method, visual image analysis can be lengthy and often leads to mistakes. Early detection of the disease can contribute significantly to improving patient outcomes. In recent decades, many researchers have employed machine learning techniques that achieved promise in cervical cancer detection processes based on medical images. In recent years, many researchers have employed various deep-learning techniques to achieve high-performance accuracy in detecting cervical cancer but are still facing various challenges. This research proposes an innovative and novel approach to automate cervical cancer image classification using Multi-Head Self-Attention (MHSA) and convolutional neural networks (CNNs). The proposed method leverages the strengths of both MHSA mechanisms and CNN to effectively capture both local and global features within cervical images in two streams. MHSA facilitates the model's ability to focus on relevant regions of interest, while CNN extracts hierarchical features that contribute to accurate classification. Finally, we combined the two stream features and fed them into the classification module to refine the feature and the classification. To evaluate the performance of the proposed approach, we used the SIPaKMeD dataset, which classifies cervical cells into five categories. Our model achieved a remarkable accuracy of 98.522\%. This performance has high recognition accuracy of medical image classification and holds promise for its applicability in other medical image recognition tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge