Maxwell T. West

Adversarial Robustness Guarantees for Quantum Classifiers

May 16, 2024

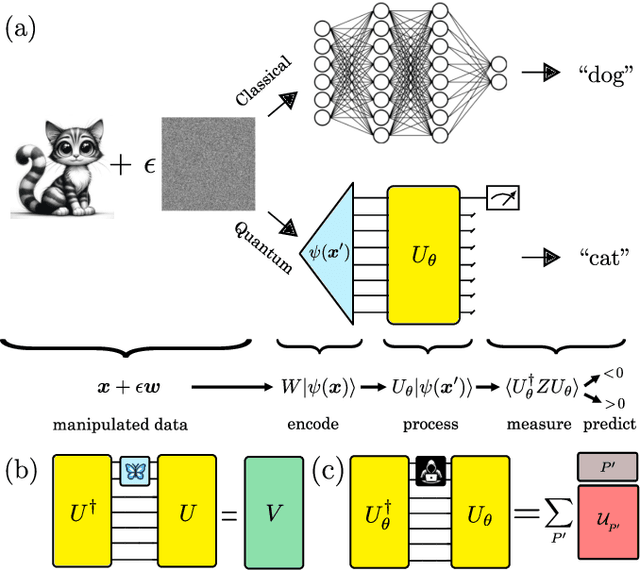

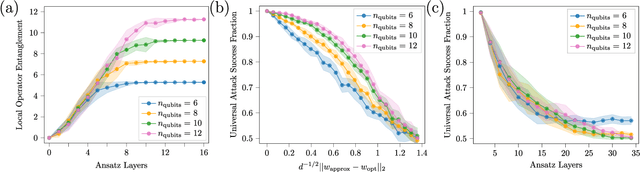

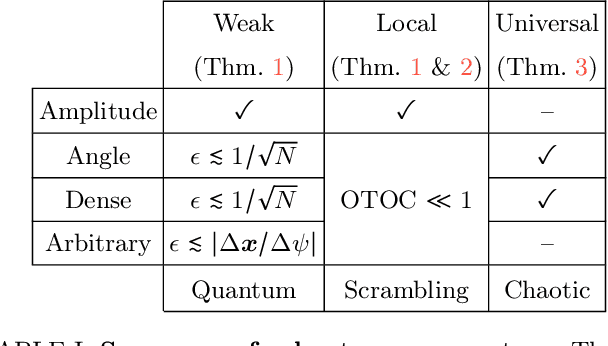

Abstract:Despite their ever more widespread deployment throughout society, machine learning algorithms remain critically vulnerable to being spoofed by subtle adversarial tampering with their input data. The prospect of near-term quantum computers being capable of running {quantum machine learning} (QML) algorithms has therefore generated intense interest in their adversarial vulnerability. Here we show that quantum properties of QML algorithms can confer fundamental protections against such attacks, in certain scenarios guaranteeing robustness against classically-armed adversaries. We leverage tools from many-body physics to identify the quantum sources of this protection. Our results offer a theoretical underpinning of recent evidence which suggest quantum advantages in the search for adversarial robustness. In particular, we prove that quantum classifiers are: (i) protected against weak perturbations of data drawn from the trained distribution, (ii) protected against local attacks if they are insufficiently scrambling, and (iii) protected against universal adversarial attacks if they are sufficiently quantum chaotic. Our analytic results are supported by numerical evidence demonstrating the applicability of our theorems and the resulting robustness of a quantum classifier in practice. This line of inquiry constitutes a concrete pathway to advantage in QML, orthogonal to the usually sought improvements in model speed or accuracy.

Towards quantum enhanced adversarial robustness in machine learning

Jun 22, 2023Abstract:Machine learning algorithms are powerful tools for data driven tasks such as image classification and feature detection, however their vulnerability to adversarial examples - input samples manipulated to fool the algorithm - remains a serious challenge. The integration of machine learning with quantum computing has the potential to yield tools offering not only better accuracy and computational efficiency, but also superior robustness against adversarial attacks. Indeed, recent work has employed quantum mechanical phenomena to defend against adversarial attacks, spurring the rapid development of the field of quantum adversarial machine learning (QAML) and potentially yielding a new source of quantum advantage. Despite promising early results, there remain challenges towards building robust real-world QAML tools. In this review we discuss recent progress in QAML and identify key challenges. We also suggest future research directions which could determine the route to practicality for QAML approaches as quantum computing hardware scales up and noise levels are reduced.

* 10 Pages, 4 Figures

Hybrid Quantum-Classical Generative Adversarial Network for High Resolution Image Generation

Dec 22, 2022

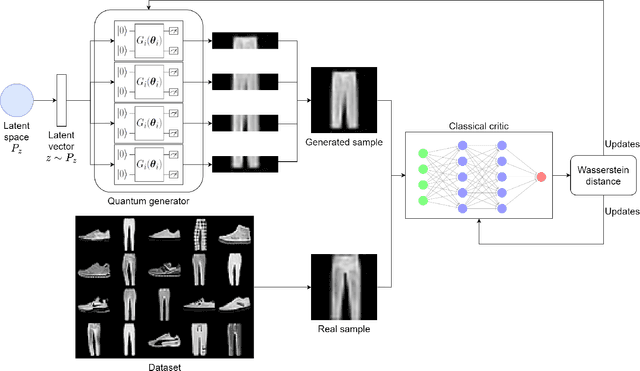

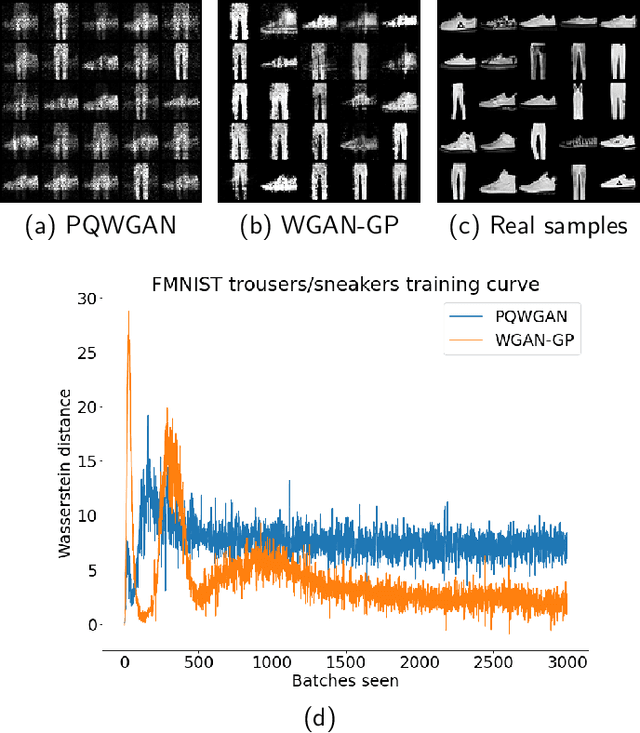

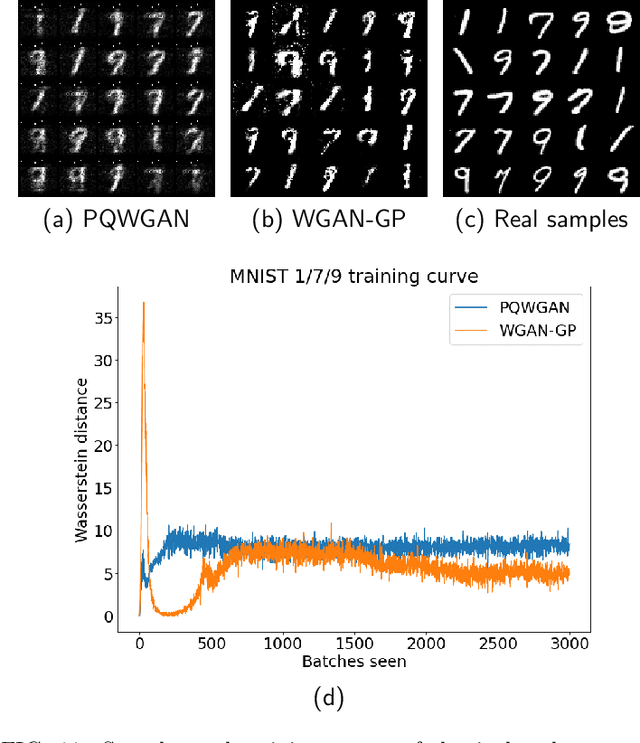

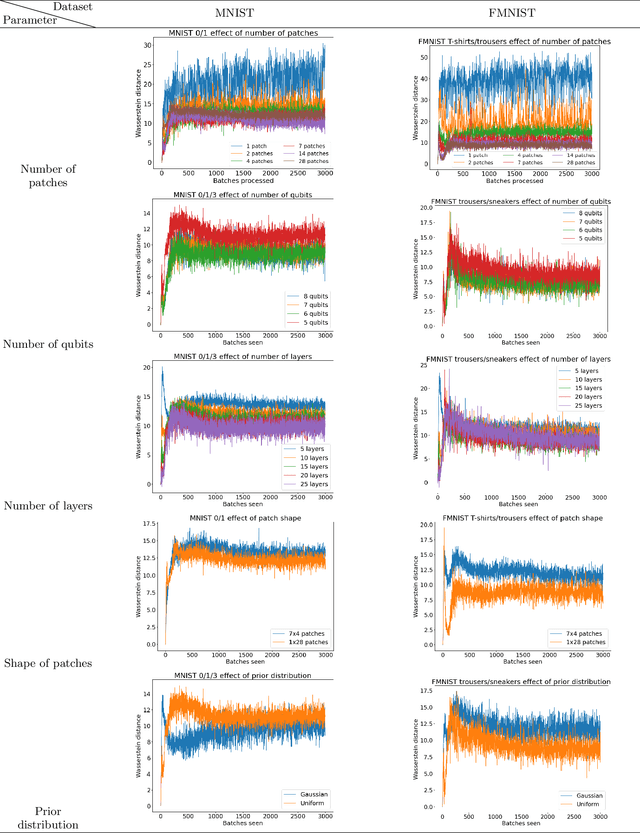

Abstract:Quantum machine learning (QML) has received increasing attention due to its potential to outperform classical machine learning methods in various problems. A subclass of QML methods is quantum generative adversarial networks (QGANs) which have been studied as a quantum counterpart of classical GANs widely used in image manipulation and generation tasks. The existing work on QGANs is still limited to small-scale proof-of-concept examples based on images with significant down-scaling. Here we integrate classical and quantum techniques to propose a new hybrid quantum-classical GAN framework. We demonstrate its superior learning capabilities by generating $28 \times 28$ pixels grey-scale images without dimensionality reduction or classical pre/post-processing on multiple classes of the standard MNIST and Fashion MNIST datasets, which achieves comparable results to classical frameworks with 3 orders of magnitude less trainable generator parameters. To gain further insight into the working of our hybrid approach, we systematically explore the impact of its parameter space by varying the number of qubits, the size of image patches, the number of layers in the generator, the shape of the patches and the choice of prior distribution. Our results show that increasing the quantum generator size generally improves the learning capability of the network. The developed framework provides a foundation for future design of QGANs with optimal parameter set tailored for complex image generation tasks.

Benchmarking Adversarially Robust Quantum Machine Learning at Scale

Nov 23, 2022

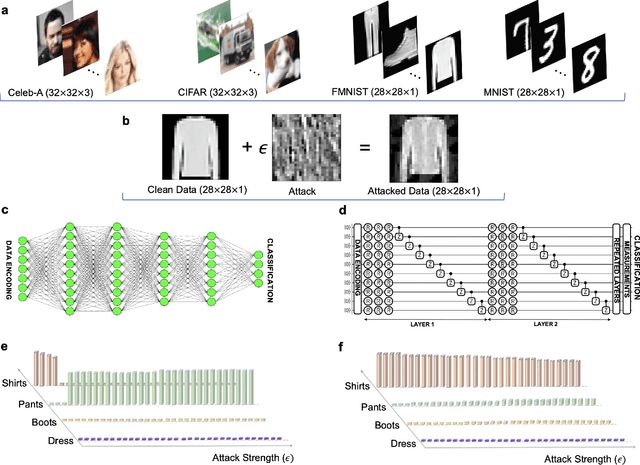

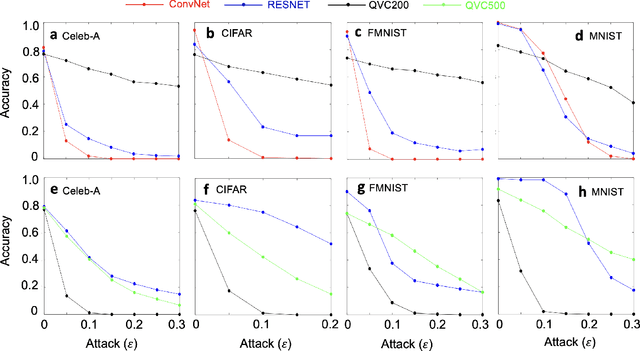

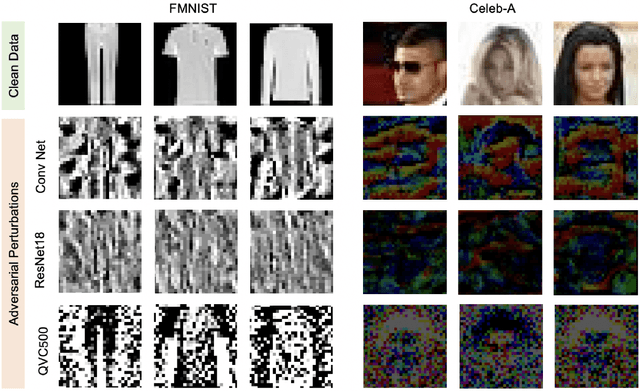

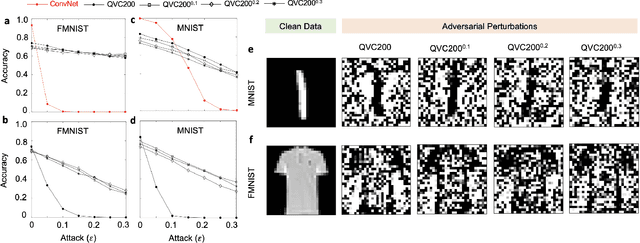

Abstract:Machine learning (ML) methods such as artificial neural networks are rapidly becoming ubiquitous in modern science, technology and industry. Despite their accuracy and sophistication, neural networks can be easily fooled by carefully designed malicious inputs known as adversarial attacks. While such vulnerabilities remain a serious challenge for classical neural networks, the extent of their existence is not fully understood in the quantum ML setting. In this work, we benchmark the robustness of quantum ML networks, such as quantum variational classifiers (QVC), at scale by performing rigorous training for both simple and complex image datasets and through a variety of high-end adversarial attacks. Our results show that QVCs offer a notably enhanced robustness against classical adversarial attacks by learning features which are not detected by the classical neural networks, indicating a possible quantum advantage for ML tasks. Contrarily, and remarkably, the converse is not true, with attacks on quantum networks also capable of deceiving classical neural networks. By combining quantum and classical network outcomes, we propose a novel adversarial attack detection technology. Traditionally quantum advantage in ML systems has been sought through increased accuracy or algorithmic speed-up, but our work has revealed the potential for a new kind of quantum advantage through superior robustness of ML models, whose practical realisation will address serious security concerns and reliability issues of ML algorithms employed in a myriad of applications including autonomous vehicles, cybersecurity, and surveillance robotic systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge