Maximilian Kraus

Multiple Pedestrians and Vehicles Tracking in Aerial Imagery: A Comprehensive Study

Oct 19, 2020

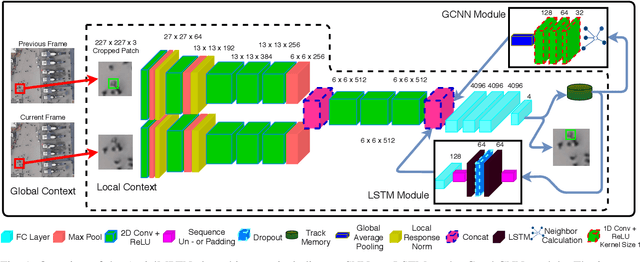

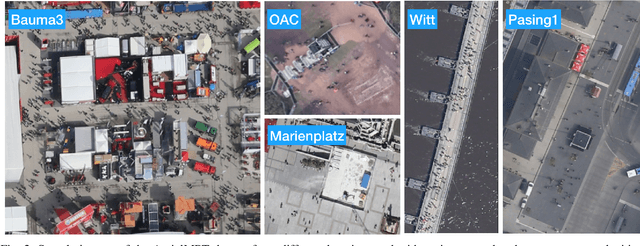

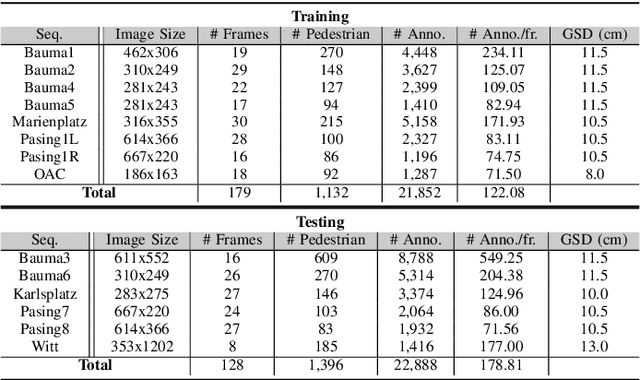

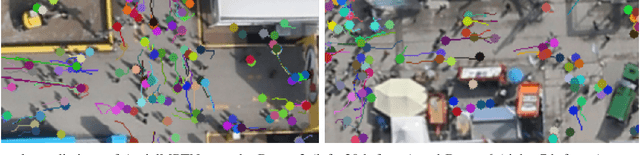

Abstract:In this paper, we address various challenges in multi-pedestrian and vehicle tracking in high-resolution aerial imagery by intensive evaluation of a number of traditional and Deep Learning based Single- and Multi-Object Tracking methods. We also describe our proposed Deep Learning based Multi-Object Tracking method AerialMPTNet that fuses appearance, temporal, and graphical information using a Siamese Neural Network, a Long Short-Term Memory, and a Graph Convolutional Neural Network module for a more accurate and stable tracking. Moreover, we investigate the influence of the Squeeze-and-Excitation layers and Online Hard Example Mining on the performance of AerialMPTNet. To the best of our knowledge, we are the first in using these two for a regression-based Multi-Object Tracking. Additionally, we studied and compared the L1 and Huber loss functions. In our experiments, we extensively evaluate AerialMPTNet on three aerial Multi-Object Tracking datasets, namely AerialMPT and KIT AIS pedestrian and vehicle datasets. Qualitative and quantitative results show that AerialMPTNet outperforms all previous methods for the pedestrian datasets and achieves competitive results for the vehicle dataset. In addition, Long Short-Term Memory and Graph Convolutional Neural Network modules enhance the tracking performance. Moreover, using Squeeze-and-Excitation and Online Hard Example Mining significantly helps for some cases while degrades the results for other cases. In addition, according to the results, L1 yields better results with respect to Huber loss for most of the scenarios. The presented results provide a deep insight into challenges and opportunities of the aerial Multi-Object Tracking domain, paving the way for future research.

AerialMPTNet: Multi-Pedestrian Tracking in Aerial Imagery Using Temporal and Graphical Features

Jun 27, 2020

Abstract:Multi-pedestrian tracking in aerial imagery has several applications such as large-scale event monitoring, disaster management, search-and-rescue missions, and as input into predictive crowd dynamic models. Due to the challenges such as the large number and the tiny size of the pedestrians (e.g., 4 x 4 pixels) with their similar appearances as well as different scales and atmospheric conditions of the images with their extremely low frame rates (e.g., 2 fps), current state-of-the-art algorithms including the deep learning-based ones are unable to perform well. In this paper, we propose AerialMPTNet, a novel approach for multi-pedestrian tracking in geo-referenced aerial imagery by fusing appearance features from a Siamese Neural Network, movement predictions from a Long Short-Term Memory, and pedestrian interconnections from a GraphCNN. In addition, to address the lack of diverse aerial pedestrian tracking datasets, we introduce the Aerial Multi-Pedestrian Tracking (AerialMPT) dataset consisting of 307 frames and 44,740 pedestrians annotated. We believe that AerialMPT is the largest and most diverse dataset to this date and will be released publicly. We evaluate AerialMPTNet on AerialMPT and KIT AIS, and benchmark with several state-of-the-art tracking methods. Results indicate that AerialMPTNet significantly outperforms other methods on accuracy and time-efficiency.

Identity Recognition in Intelligent Cars with Behavioral Data and LSTM-ResNet Classifier

Mar 02, 2020

Abstract:Identity recognition in a car cabin is a critical task nowadays and offers a great field of applications ranging from personalizing intelligent cars to suit drivers physical and behavioral needs to increasing safety and security. However, the performance and applicability of published approaches are still not suitable for use in series cars and need to be improved. In this paper, we investigate Human Identity Recognition in a car cabin with Time Series Classification (TSC) and deep neural networks. We use gas and brake pedal pressure as input to our models. This data is easily collectable during driving in everyday situations. Since our classifiers have very little memory requirements and do not require any input data preproccesing, we were able to train on one Intel i5-3210M processor only. Our classification approach is based on a combination of LSTM and ResNet. The network trained on a subset of NUDrive outperforms the ResNet and LSTM models trained solely by 35.9 % and 53.85 % accuracy respectively. We reach a final accuracy of 79.49 % on a 10-drivers subset of NUDrive and 96.90 % on a 5-drivers subset of UTDrive.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge