Maxime Kawawa-Beaudan

Behavioral Sequence Modeling with Ensemble Learning

Nov 04, 2024

Abstract:We investigate the use of sequence analysis for behavior modeling, emphasizing that sequential context often outweighs the value of aggregate features in understanding human behavior. We discuss framing common problems in fields like healthcare, finance, and e-commerce as sequence modeling tasks, and address challenges related to constructing coherent sequences from fragmented data and disentangling complex behavior patterns. We present a framework for sequence modeling using Ensembles of Hidden Markov Models, which are lightweight, interpretable, and efficient. Our ensemble-based scoring method enables robust comparison across sequences of different lengths and enhances performance in scenarios with imbalanced or scarce data. The framework scales in real-world scenarios, is compatible with downstream feature-based modeling, and is applicable in both supervised and unsupervised learning settings. We demonstrate the effectiveness of our method with results on a longitudinal human behavior dataset.

Ensemble Methods for Sequence Classification with Hidden Markov Models

Sep 11, 2024Abstract:We present a lightweight approach to sequence classification using Ensemble Methods for Hidden Markov Models (HMMs). HMMs offer significant advantages in scenarios with imbalanced or smaller datasets due to their simplicity, interpretability, and efficiency. These models are particularly effective in domains such as finance and biology, where traditional methods struggle with high feature dimensionality and varied sequence lengths. Our ensemble-based scoring method enables the comparison of sequences of any length and improves performance on imbalanced datasets. This study focuses on the binary classification problem, particularly in scenarios with data imbalance, where the negative class is the majority (e.g., normal data) and the positive class is the minority (e.g., anomalous data), often with extreme distribution skews. We propose a novel training approach for HMM Ensembles that generalizes to multi-class problems and supports classification and anomaly detection. Our method fits class-specific groups of diverse models using random data subsets, and compares likelihoods across classes to produce composite scores, achieving high average precisions and AUCs. In addition, we compare our approach with neural network-based methods such as Convolutional Neural Networks (CNNs) and Long Short-Term Memory networks (LSTMs), highlighting the efficiency and robustness of HMMs in data-scarce environments. Motivated by real-world use cases, our method demonstrates robust performance across various benchmarks, offering a flexible framework for diverse applications.

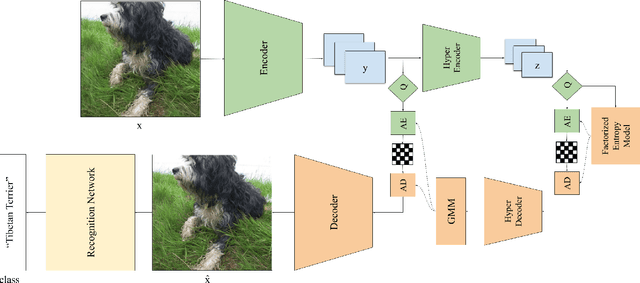

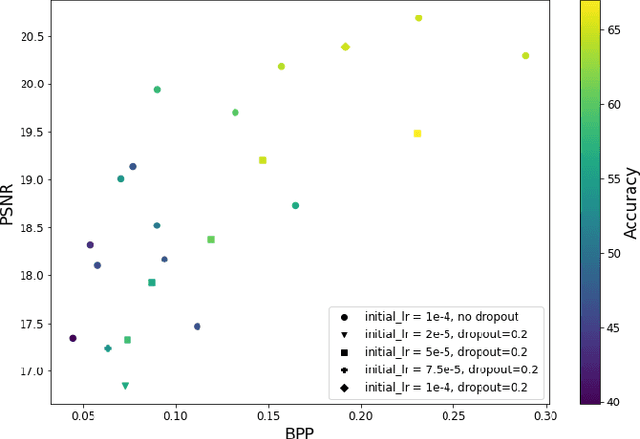

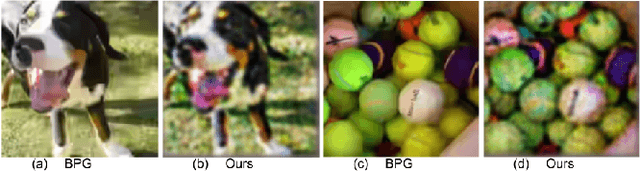

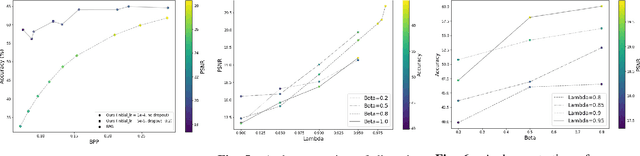

Recognition-Aware Learned Image Compression

Feb 01, 2022

Abstract:Learned image compression methods generally optimize a rate-distortion loss, trading off improvements in visual distortion for added bitrate. Increasingly, however, compressed imagery is used as an input to deep learning networks for various tasks such as classification, object detection, and superresolution. We propose a recognition-aware learned compression method, which optimizes a rate-distortion loss alongside a task-specific loss, jointly learning compression and recognition networks. We augment a hierarchical autoencoder-based compression network with an EfficientNet recognition model and use two hyperparameters to trade off between distortion, bitrate, and recognition performance. We characterize the classification accuracy of our proposed method as a function of bitrate and find that for low bitrates our method achieves as much as 26% higher recognition accuracy at equivalent bitrates compared to traditional methods such as Better Portable Graphics (BPG).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge