Maxime Girard

Memory-Optimized Once-For-All Network

Sep 05, 2024

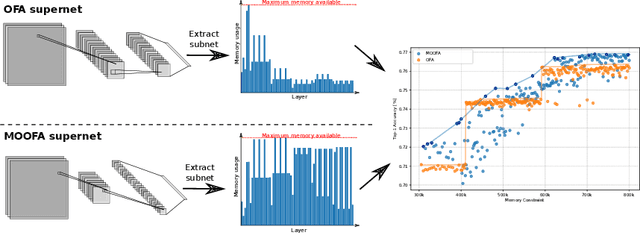

Abstract:Deploying Deep Neural Networks (DNNs) on different hardware platforms is challenging due to varying resource constraints. Besides handcrafted approaches aiming at making deep models hardware-friendly, Neural Architectures Search is rising as a toolbox to craft more efficient DNNs without sacrificing performance. Among these, the Once-For-All (OFA) approach offers a solution by allowing the sampling of well-performing sub-networks from a single supernet -- this leads to evident advantages in terms of computation. However, OFA does not fully utilize the potential memory capacity of the target device, focusing instead on limiting maximum memory usage per layer. This leaves room for an unexploited potential in terms of model generalizability. In this paper, we introduce a Memory-Optimized OFA (MOOFA) supernet, designed to enhance DNN deployment on resource-limited devices by maximizing memory usage (and for instance, features diversity) across different configurations. Tested on ImageNet, our MOOFA supernet demonstrates improvements in memory exploitation and model accuracy compared to the original OFA supernet. Our code is available at https://github.com/MaximeGirard/memory-optimized-once-for-all.

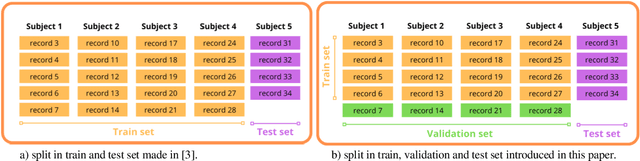

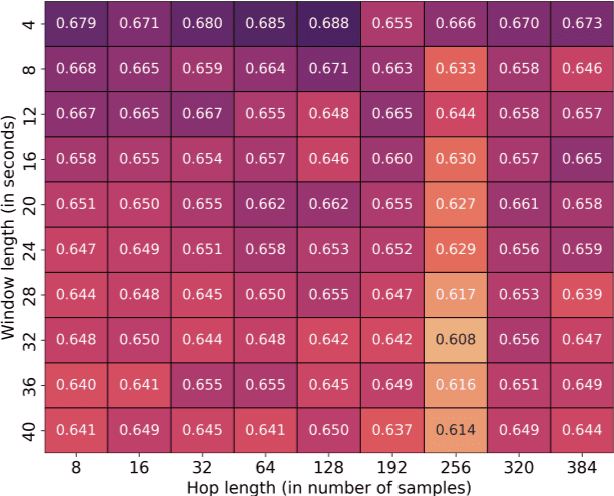

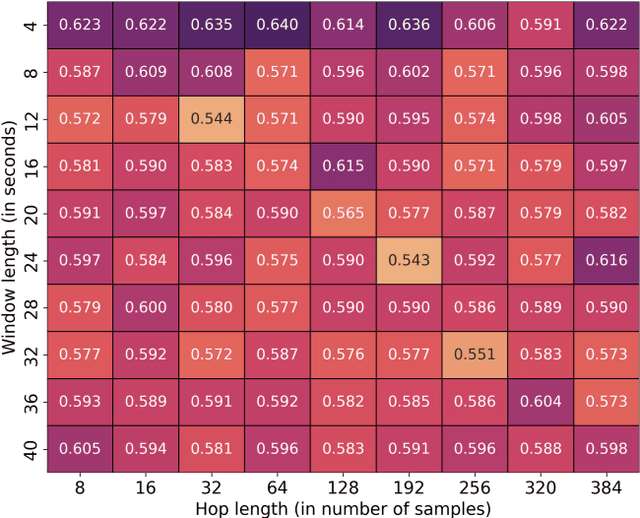

Enhanced EEG-Based Mental State Classification : A novel approach to eliminate data leakage and improve training optimization for Machine Learning

Dec 14, 2023

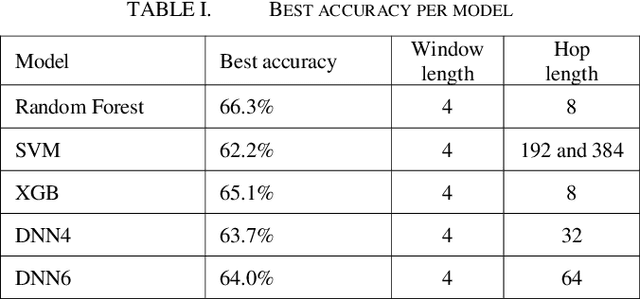

Abstract:In this paper, we explore prior research and introduce a new methodology for classifying mental state levels based on EEG signals utilizing machine learning (ML). Our method proposes an optimized training method by introducing a validation set and a refined standardization process to rectify data leakage shortcomings observed in preceding studies. Furthermore, we establish novel benchmark figures for various models, including random forest and deep neural networks.

Estimating the energy requirements for long term memory formation

Jan 16, 2023Abstract:Brains consume metabolic energy to process information, but also to store memories. The energy required for memory formation can be substantial, for instance in fruit flies memory formation leads to a shorter lifespan upon subsequent starvation (Mery and Kawecki, 2005). Here we estimate that the energy required corresponds to about 10mJ/bit and compare this to biophysical estimates as well as energy requirements in computer hardware. We conclude that biological memory storage is expensive, but the reason behind it is not known.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge