Maulikkumar Dhameliya

Deep Learning based Multi-Modal Sensing for Tracking and State Extraction of Small Quadcopters

Dec 08, 2020

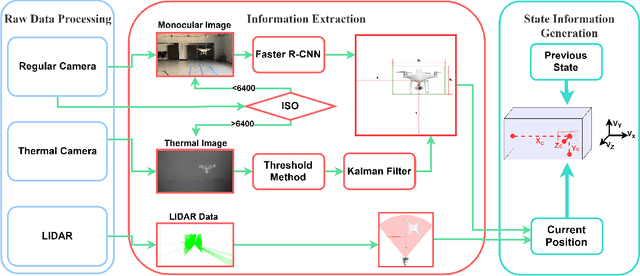

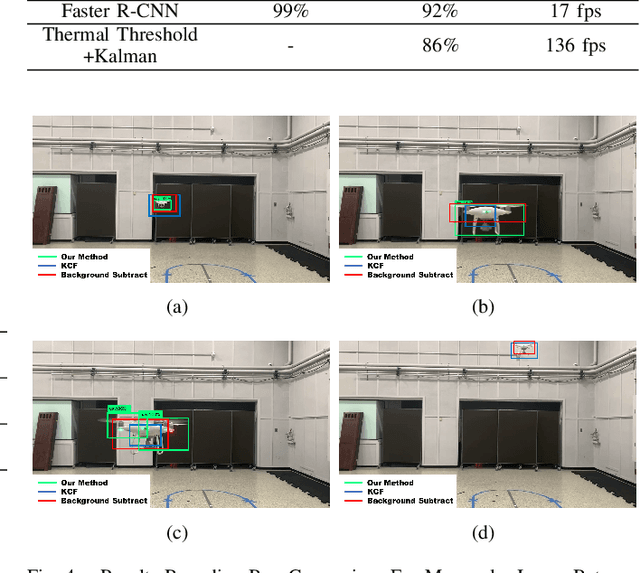

Abstract:This paper proposes a multi-sensor based approach to detect, track, and localize a quadcopter unmanned aerial vehicle (UAV). Specifically, a pipeline is developed to process monocular RGB and thermal video (captured from a fixed platform) to detect and track the UAV in our FoV. Subsequently, a 2D planar lidar is used to allow conversion of pixel data to actual distance measurements, and thereby enable localization of the UAV in global coordinates. The monocular data is processed through a deep learning-based object detection method that computes an initial bounding box for the UAV. The thermal data is processed through a thresholding and Kalman filter approach to detect and track the bounding box. Training and testing data are prepared by combining a set of original experiments conducted in a motion capture environment and publicly available UAV image data. The new pipeline compares favorably to existing methods and demonstrates promising tracking and localization capacity of sample experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge