Matthias Pesch

EAP4EMSIG -- Enhancing Event-Driven Microscopy for Microfluidic Single-Cell Analysis

Mar 30, 2025Abstract:Microfluidic Live-Cell Imaging yields data on microbial cell factories. However, continuous acquisition is challenging as high-throughput experiments often lack realtime insights, delaying responses to stochastic events. We introduce three components in the Experiment Automation Pipeline for Event-Driven Microscopy to Smart Microfluidic Single-Cell Analysis: a fast, accurate Deep Learning autofocusing method predicting the focus offset, an evaluation of real-time segmentation methods and a realtime data analysis dashboard. Our autofocusing achieves a Mean Absolute Error of 0.0226\textmu m with inference times below 50~ms. Among eleven Deep Learning segmentation methods, Cellpose~3 reached a Panoptic Quality of 93.58\%, while a distance-based method is fastest (121~ms, Panoptic Quality 93.02\%). All six Deep Learning Foundation Models were unsuitable for real-time segmentation.

EAP4EMSIG -- Experiment Automation Pipeline for Event-Driven Microscopy to Smart Microfluidic Single-Cells Analysis

Nov 06, 2024Abstract:Microfluidic Live-Cell Imaging (MLCI) generates high-quality data that allows biotechnologists to study cellular growth dynamics in detail. However, obtaining these continuous data over extended periods is challenging, particularly in achieving accurate and consistent real-time event classification at the intersection of imaging and stochastic biology. To address this issue, we introduce the Experiment Automation Pipeline for Event-Driven Microscopy to Smart Microfluidic Single-Cells Analysis (EAP4EMSIG). In particular, we present initial zero-shot results from the real-time segmentation module of our approach. Our findings indicate that among four State-Of-The- Art (SOTA) segmentation methods evaluated, Omnipose delivers the highest Panoptic Quality (PQ) score of 0.9336, while Contour Proposal Network (CPN) achieves the fastest inference time of 185 ms with the second-highest PQ score of 0.8575. Furthermore, we observed that the vision foundation model Segment Anything is unsuitable for this particular use case.

Baseline and Triangulation Geometry in a Standard Plenoptic Camera

Oct 09, 2020

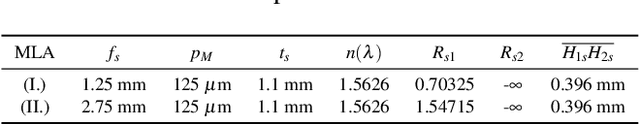

Abstract:In this paper, we demonstrate light field triangulation to determine depth distances and baselines in a plenoptic camera. Advances in micro lenses and image sensors have enabled plenoptic cameras to capture a scene from different viewpoints with sufficient spatial resolution. While object distances can be inferred from disparities in a stereo viewpoint pair using triangulation, this concept remains ambiguous when applied in the case of plenoptic cameras. We present a geometrical light field model allowing the triangulation to be applied to a plenoptic camera in order to predict object distances or specify baselines as desired. It is shown that distance estimates from our novel method match those of real objects placed in front of the camera. Additional benchmark tests with an optical design software further validate the model's accuracy with deviations of less than +-0.33 % for several main lens types and focus settings. A variety of applications in the automotive and robotics field can benefit from this estimation model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge