Vladan Velisavljevic

Baseline and Triangulation Geometry in a Standard Plenoptic Camera

Oct 09, 2020

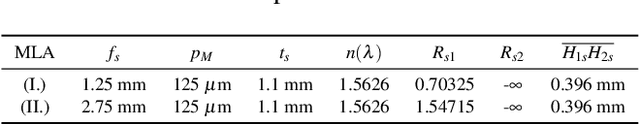

Abstract:In this paper, we demonstrate light field triangulation to determine depth distances and baselines in a plenoptic camera. Advances in micro lenses and image sensors have enabled plenoptic cameras to capture a scene from different viewpoints with sufficient spatial resolution. While object distances can be inferred from disparities in a stereo viewpoint pair using triangulation, this concept remains ambiguous when applied in the case of plenoptic cameras. We present a geometrical light field model allowing the triangulation to be applied to a plenoptic camera in order to predict object distances or specify baselines as desired. It is shown that distance estimates from our novel method match those of real objects placed in front of the camera. Additional benchmark tests with an optical design software further validate the model's accuracy with deviations of less than +-0.33 % for several main lens types and focus settings. A variety of applications in the automotive and robotics field can benefit from this estimation model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge