Matthias Kirsch

Visualisation of Medical Image Fusion and Translation for Accurate Diagnosis of High Grade Gliomas

Jan 30, 2020

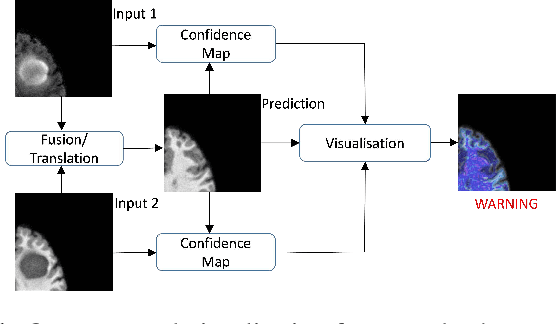

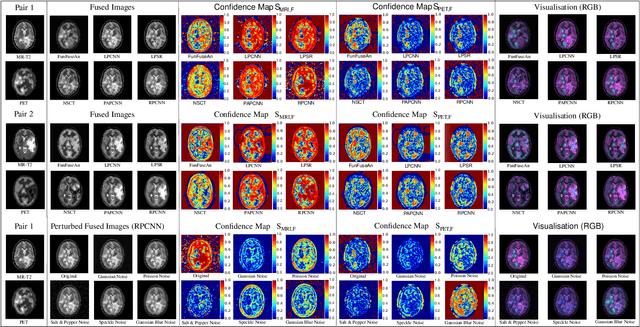

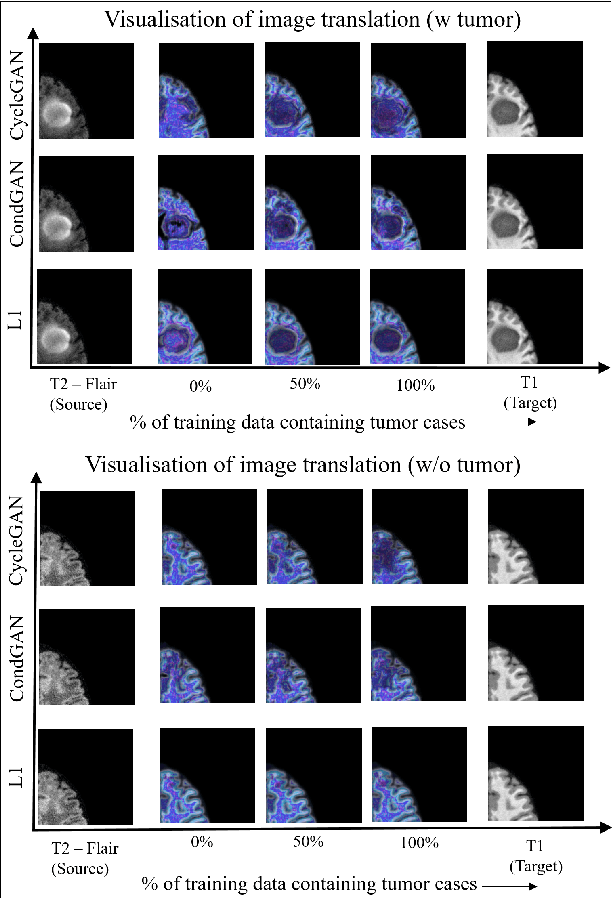

Abstract:The medical image fusion combines two or more modalities into a single view while medical image translation synthesizes new images and assists in data augmentation. Together, these methods help in faster diagnosis of high grade malignant gliomas. However, they might be untrustworthy due to which neurosurgeons demand a robust visualisation tool to verify the reliability of the fusion and translation results before they make pre-operative surgical decisions. In this paper, we propose a novel approach to compute a confidence heat map between the source-target image pair by estimating the information transfer from the source to the target image using the joint probability distribution of the two images. We evaluate several fusion and translation methods using our visualisation procedure and showcase its robustness in enabling neurosurgeons to make finer clinical decisions.

Structural Similarity based Anatomical and Functional Brain Imaging Fusion

Aug 14, 2019

Abstract:Multimodal medical image fusion helps in combining contrasting features from two or more input imaging modalities to represent fused information in a single image. One of the pivotal clinical applications of medical image fusion is the merging of anatomical and functional modalities for fast diagnosis of malignant tissues. In this paper, we present a novel end-to-end unsupervised learning-based Convolutional Neural Network (CNN) for fusing the high and low frequency components of MRI-PET grayscale image pairs, publicly available at ADNI, by exploiting Structural Similarity Index (SSIM) as the loss function during training. We then apply color coding for the visualization of the fused image by quantifying the contribution of each input image in terms of the partial derivatives of the fused image. We find that our fusion and visualization approach results in better visual perception of the fused image, while also comparing favorably to previous methods when applying various quantitative assessment metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge