Matthew Ellis

In Situ Framework for Coupling Simulation and Machine Learning with Application to CFD

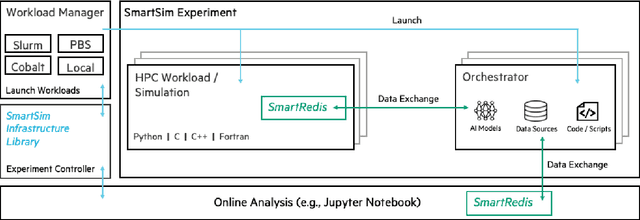

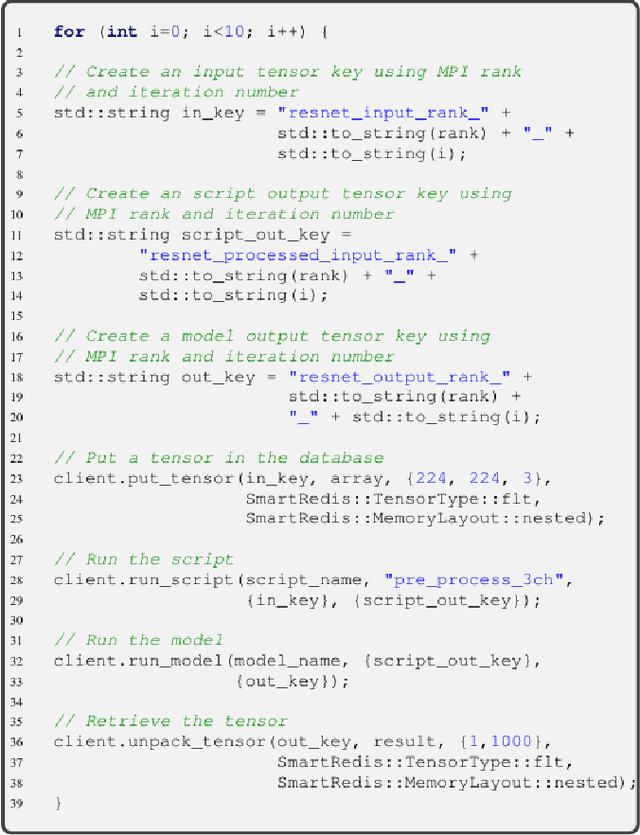

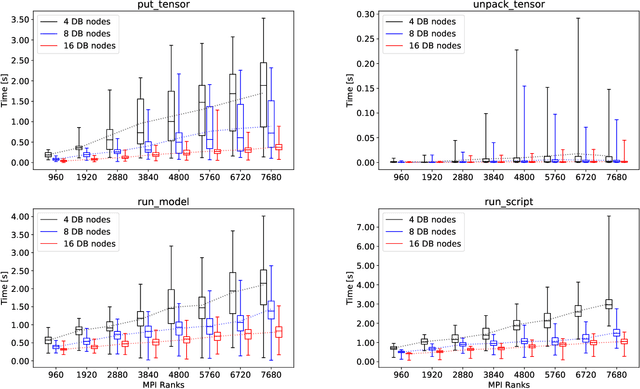

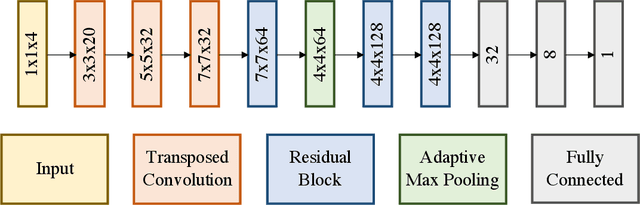

Jun 22, 2023Abstract:Recent years have seen many successful applications of machine learning (ML) to facilitate fluid dynamic computations. As simulations grow, generating new training datasets for traditional offline learning creates I/O and storage bottlenecks. Additionally, performing inference at runtime requires non-trivial coupling of ML framework libraries with simulation codes. This work offers a solution to both limitations by simplifying this coupling and enabling in situ training and inference workflows on heterogeneous clusters. Leveraging SmartSim, the presented framework deploys a database to store data and ML models in memory, thus circumventing the file system. On the Polaris supercomputer, we demonstrate perfect scaling efficiency to the full machine size of the data transfer and inference costs thanks to a novel co-located deployment of the database. Moreover, we train an autoencoder in situ from a turbulent flow simulation, showing that the framework overhead is negligible relative to a solver time step and training epoch.

Using Machine Learning at Scale in HPC Simulations with SmartSim: An Application to Ocean Climate Modeling

Apr 13, 2021

Abstract:We demonstrate the first climate-scale, numerical ocean simulations improved through distributed, online inference of Deep Neural Networks (DNN) using SmartSim. SmartSim is a library dedicated to enabling online analysis and Machine Learning (ML) for traditional HPC simulations. In this paper, we detail the SmartSim architecture and provide benchmarks including online inference with a shared ML model on heterogeneous HPC systems. We demonstrate the capability of SmartSim by using it to run a 12-member ensemble of global-scale, high-resolution ocean simulations, each spanning 19 compute nodes, all communicating with the same ML architecture at each simulation timestep. In total, 970 billion inferences are collectively served by running the ensemble for a total of 120 simulated years. Finally, we show our solution is stable over the full duration of the model integrations, and that the inclusion of machine learning has minimal impact on the simulation runtimes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge