Matteo Tortora

Context-Gated Cross-Modal Perception with Visual Mamba for PET-CT Lung Tumor Segmentation

Oct 31, 2025Abstract:Accurate lung tumor segmentation is vital for improving diagnosis and treatment planning, and effectively combining anatomical and functional information from PET and CT remains a major challenge. In this study, we propose vMambaX, a lightweight multimodal framework integrating PET and CT scan images through a Context-Gated Cross-Modal Perception Module (CGM). Built on the Visual Mamba architecture, vMambaX adaptively enhances inter-modality feature interaction, emphasizing informative regions while suppressing noise. Evaluated on the PCLT20K dataset, the model outperforms baseline models while maintaining lower computational complexity. These results highlight the effectiveness of adaptive cross-modal gating for multimodal tumor segmentation and demonstrate the potential of vMambaX as an efficient and scalable framework for advanced lung cancer analysis. The code is available at https://github.com/arco-group/vMambaX.

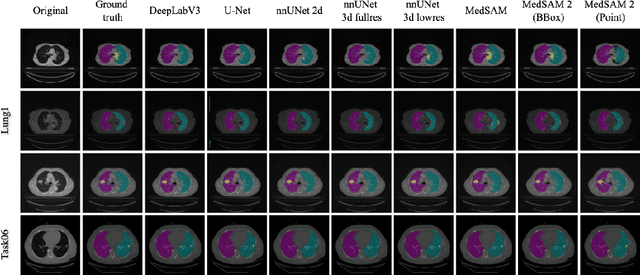

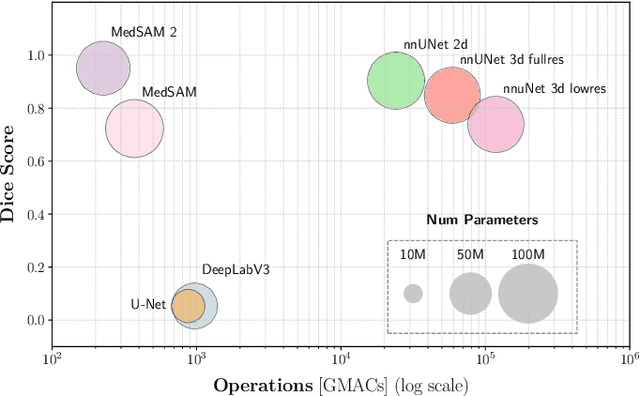

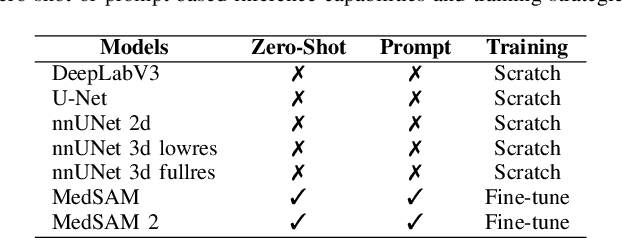

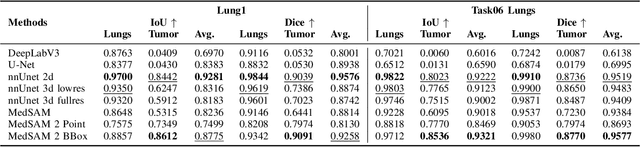

Can Foundation Models Really Segment Tumors? A Benchmarking Odyssey in Lung CT Imaging

May 02, 2025

Abstract:Accurate lung tumor segmentation is crucial for improving diagnosis, treatment planning, and patient outcomes in oncology. However, the complexity of tumor morphology, size, and location poses significant challenges for automated segmentation. This study presents a comprehensive benchmarking analysis of deep learning-based segmentation models, comparing traditional architectures such as U-Net and DeepLabV3, self-configuring models like nnUNet, and foundation models like MedSAM, and MedSAM~2. Evaluating performance across two lung tumor segmentation datasets, we assess segmentation accuracy and computational efficiency under various learning paradigms, including few-shot learning and fine-tuning. The results reveal that while traditional models struggle with tumor delineation, foundation models, particularly MedSAM~2, outperform them in both accuracy and computational efficiency. These findings underscore the potential of foundation models for lung tumor segmentation, highlighting their applicability in improving clinical workflows and patient outcomes.

MATNet: Multi-Level Fusion and Self-Attention Transformer-Based Model for Multivariate Multi-Step Day-Ahead PV Generation Forecasting

Jun 17, 2023Abstract:The integration of renewable energy sources (RES) into modern power systems has become increasingly important due to climate change and macroeconomic and geopolitical instability. Among the RES, photovoltaic (PV) energy is rapidly emerging as one of the world's most promising. However, its widespread adoption poses challenges related to its inherently uncertain nature that can lead to imbalances in the electrical system. Therefore, accurate forecasting of PV production can help resolve these uncertainties and facilitate the integration of PV into modern power systems. Currently, PV forecasting methods can be divided into two main categories: physics-based and data-based strategies, with AI-based models providing state-of-the-art performance in PV power forecasting. However, while these AI-based models can capture complex patterns and relationships in the data, they ignore the underlying physical prior knowledge of the phenomenon. Therefore, we propose MATNet, a novel self-attention transformer-based architecture for multivariate multi-step day-ahead PV power generation forecasting. It consists of a hybrid approach that combines the AI paradigm with the prior physical knowledge of PV power generation of physics-based methods. The model is fed with historical PV data and historical and forecast weather data through a multi-level joint fusion approach. The effectiveness of the proposed model is evaluated using the Ausgrid benchmark dataset with different regression performance metrics. The results show that our proposed architecture significantly outperforms the current state-of-the-art methods with an RMSE equal to 0.0460. These findings demonstrate the potential of MATNet in improving forecasting accuracy and suggest that it could be a promising solution to facilitate the integration of PV energy into the power grid.

RadioPathomics: Multimodal Learning in Non-Small Cell Lung Cancer for Adaptive Radiotherapy

Apr 26, 2022

Abstract:The current cancer treatment practice collects multimodal data, such as radiology images, histopathology slides, genomics and clinical data. The importance of these data sources taken individually has fostered the recent raise of radiomics and pathomics, i.e. the extraction of quantitative features from radiology and histopathology images routinely collected to predict clinical outcomes or to guide clinical decisions using artificial intelligence algorithms. Nevertheless, how to combine them into a single multimodal framework is still an open issue. In this work we therefore develop a multimodal late fusion approach that combines hand-crafted features computed from radiomics, pathomics and clinical data to predict radiation therapy treatment outcomes for non-small-cell lung cancer patients. Within this context, we investigate eight different late fusion rules (i.e. product, maximum, minimum, mean, decision template, Dempster-Shafer, majority voting, and confidence rule) and two patient-wise aggregation rules leveraging the richness of information given by computer tomography images and whole-slide scans. The experiments in leave-one-patient-out cross-validation on an in-house cohort of 33 patients show that the proposed multimodal paradigm with an AUC equal to $90.9\%$ outperforms each unimodal approach, suggesting that data integration can advance precision medicine. As a further contribution, we also compare the hand-crafted representations with features automatically computed by deep networks, and the late fusion paradigm with early fusion, another popular multimodal approach. In both cases, the experiments show that the proposed multimodal approach provides the best results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge