Matteo Mancini

Lossy compression of multidimensional medical images using sinusoidal activation networks: an evaluation study

Aug 03, 2022

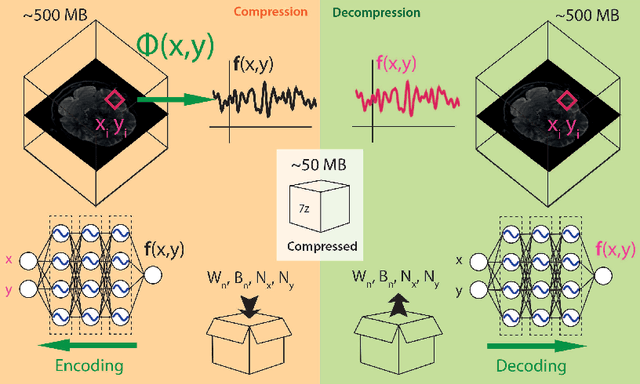

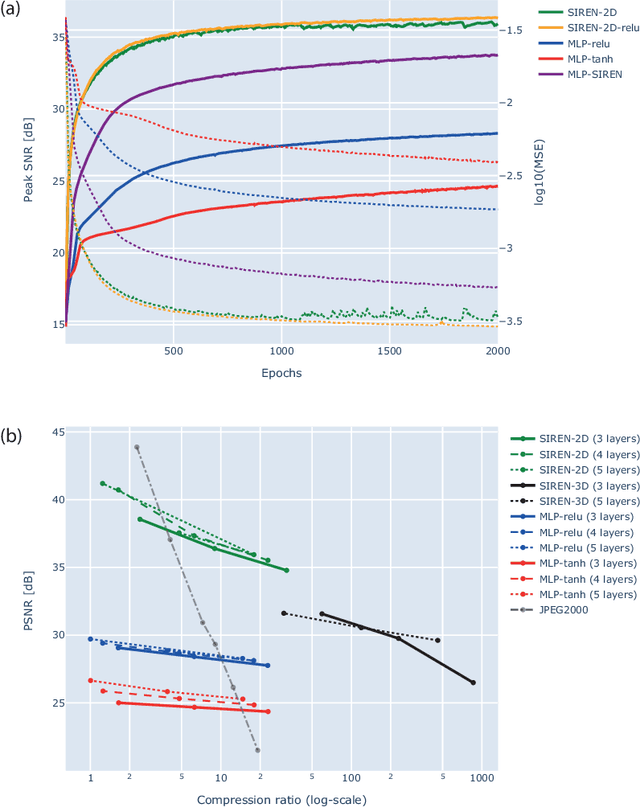

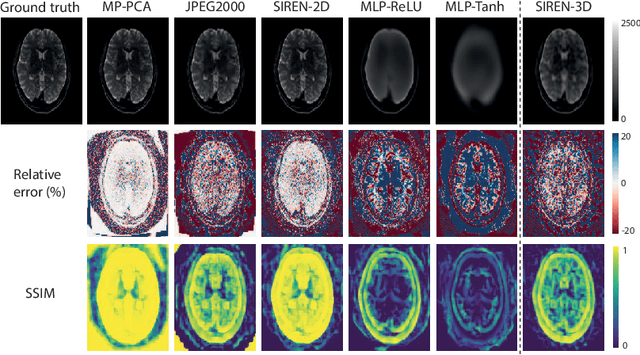

Abstract:In this work, we evaluate how neural networks with periodic activation functions can be leveraged to reliably compress large multidimensional medical image datasets, with proof-of-concept application to 4D diffusion-weighted MRI (dMRI). In the medical imaging landscape, multidimensional MRI is a key area of research for developing biomarkers that are both sensitive and specific to the underlying tissue microstructure. However, the high-dimensional nature of these data poses a challenge in terms of both storage and sharing capabilities and associated costs, requiring appropriate algorithms able to represent the information in a low-dimensional space. Recent theoretical developments in deep learning have shown how periodic activation functions are a powerful tool for implicit neural representation of images and can be used for compression of 2D images. Here we extend this approach to 4D images and show how any given 4D dMRI dataset can be accurately represented through the parameters of a sinusoidal activation network, achieving a data compression rate about 10 times higher than the standard DEFLATE algorithm. Our results show that the proposed approach outperforms benchmark ReLU and Tanh activation perceptron architectures in terms of mean squared error, peak signal-to-noise ratio and structural similarity index. Subsequent analyses using the tensor and spherical harmonics representations demonstrate that the proposed lossy compression reproduces accurately the characteristics of the original data, leading to relative errors about 5 to 10 times lower than the benchmark JPEG2000 lossy compression and similar to standard pre-processing steps such as MP-PCA denosing, suggesting a loss of information within the currently accepted levels for clinical application.

Synth-by-Reg (SbR): Contrastive learning for synthesis-based registration of paired images

Jul 30, 2021

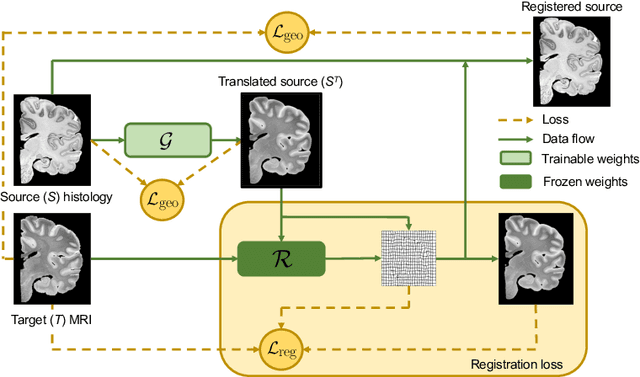

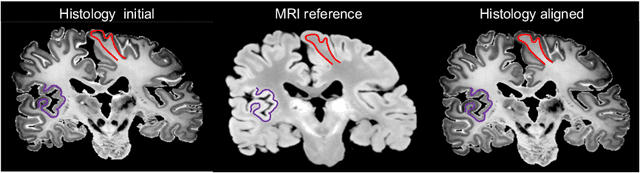

Abstract:Nonlinear inter-modality registration is often challenging due to the lack of objective functions that are good proxies for alignment. Here we propose a synthesis-by-registration method to convert this problem into an easier intra-modality task. We introduce a registration loss for weakly supervised image translation between domains that does not require perfectly aligned training data. This loss capitalises on a registration U-Net with frozen weights, to drive a synthesis CNN towards the desired translation. We complement this loss with a structure preserving constraint based on contrastive learning, which prevents blurring and content shifts due to overfitting. We apply this method to the registration of histological sections to MRI slices, a key step in 3D histology reconstruction. Results on two different public datasets show improvements over registration based on mutual information (13% reduction in landmark error) and synthesis-based algorithms such as CycleGAN (11% reduction), and are comparable to a registration CNN with label supervision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge