Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Martina Lanzi

Learning the Roots of Visual Domain Shift

Jul 20, 2016Figures and Tables:

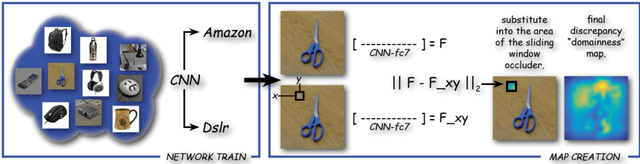

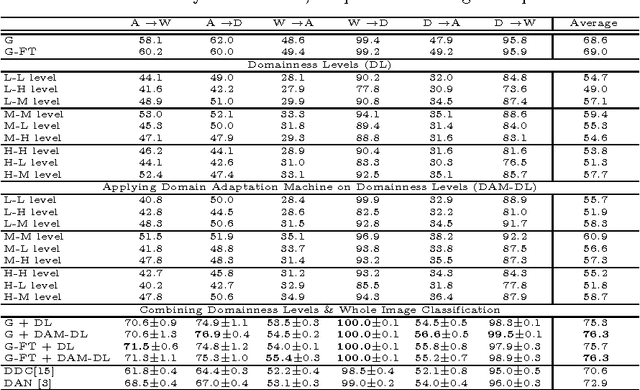

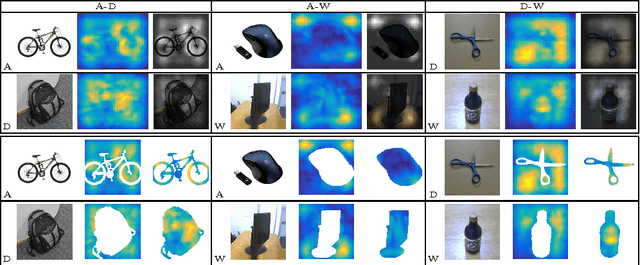

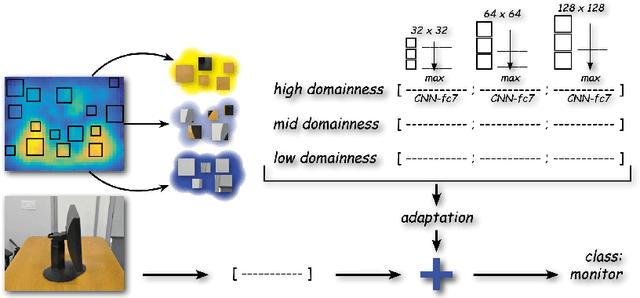

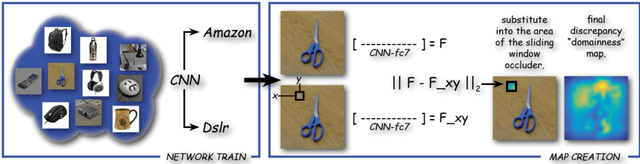

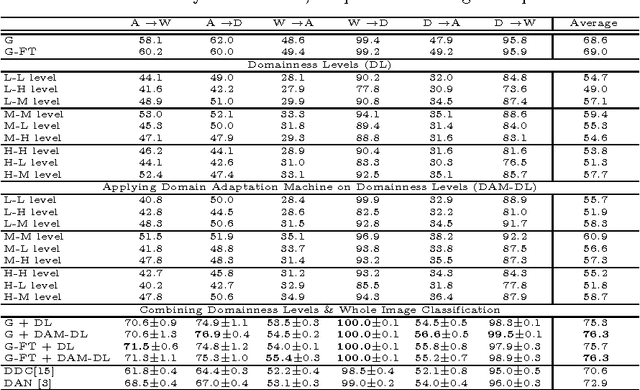

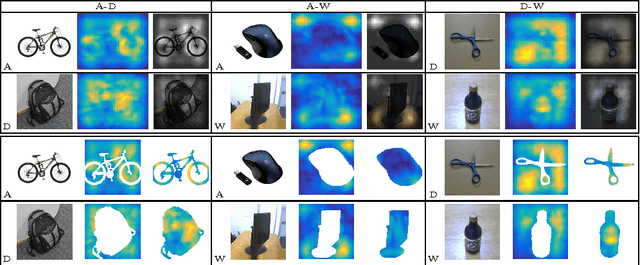

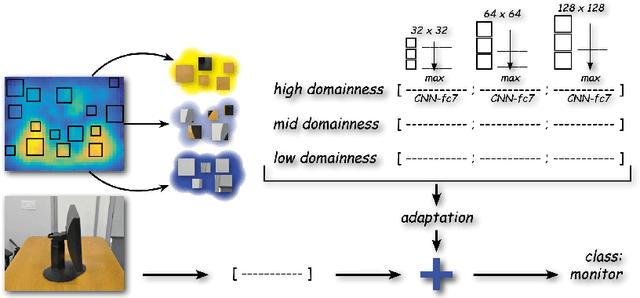

Abstract:In this paper we focus on the spatial nature of visual domain shift, attempting to learn where domain adaptation originates in each given image of the source and target set. We borrow concepts and techniques from the CNN visualization literature, and learn domainnes maps able to localize the degree of domain specificity in images. We derive from these maps features related to different domainnes levels, and we show that by considering them as a preprocessing step for a domain adaptation algorithm, the final classification performance is strongly improved. Combined with the whole image representation, these features provide state of the art results on the Office dataset.

* Extended Abstract

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge