Martin Rohrmeier

A Computational Cognitive Model for Processing Repetitions of Hierarchical Relations

Apr 14, 2025Abstract:Patterns are fundamental to human cognition, enabling the recognition of structure and regularity across diverse domains. In this work, we focus on structural repeats, patterns that arise from the repetition of hierarchical relations within sequential data, and develop a candidate computational model of how humans detect and understand such structural repeats. Based on a weighted deduction system, our model infers the minimal generative process of a given sequence in the form of a Template program, a formalism that enriches the context-free grammar with repetition combinators. Such representation efficiently encodes the repetition of sub-computations in a recursive manner. As a proof of concept, we demonstrate the expressiveness of our model on short sequences from music and action planning. The proposed model offers broader insights into the mental representations and cognitive mechanisms underlying human pattern recognition.

Recursive Bayesian Networks: Generalising and Unifying Probabilistic Context-Free Grammars and Dynamic Bayesian Networks

Dec 01, 2021

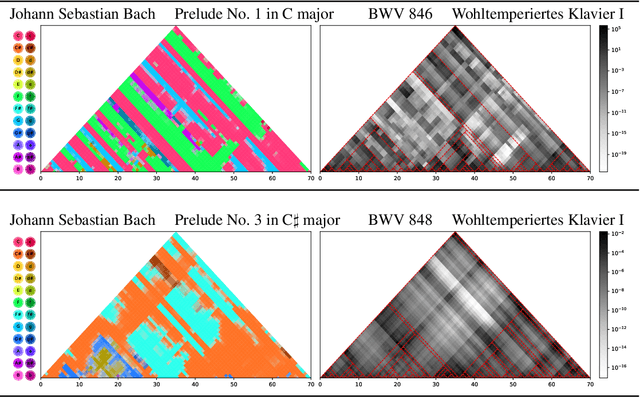

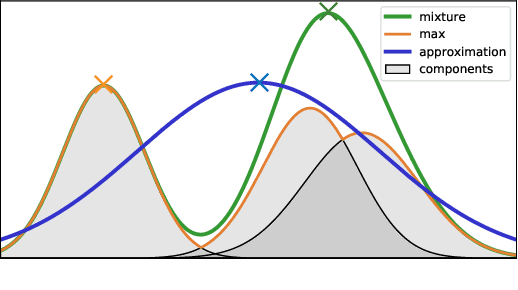

Abstract:Probabilistic context-free grammars (PCFGs) and dynamic Bayesian networks (DBNs) are widely used sequence models with complementary strengths and limitations. While PCFGs allow for nested hierarchical dependencies (tree structures), their latent variables (non-terminal symbols) have to be discrete. In contrast, DBNs allow for continuous latent variables, but the dependencies are strictly sequential (chain structure). Therefore, neither can be applied if the latent variables are assumed to be continuous and also to have a nested hierarchical dependency structure. In this paper, we present Recursive Bayesian Networks (RBNs), which generalise and unify PCFGs and DBNs, combining their strengths and containing both as special cases. RBNs define a joint distribution over tree-structured Bayesian networks with discrete or continuous latent variables. The main challenge lies in performing joint inference over the exponential number of possible structures and the continuous variables. We provide two solutions: 1) For arbitrary RBNs, we generalise inside and outside probabilities from PCFGs to the mixed discrete-continuous case, which allows for maximum posterior estimates of the continuous latent variables via gradient descent, while marginalising over network structures. 2) For Gaussian RBNs, we additionally derive an analytic approximation, allowing for robust parameter optimisation and Bayesian inference. The capacity and diverse applications of RBNs are illustrated on two examples: In a quantitative evaluation on synthetic data, we demonstrate and discuss the advantage of RBNs for segmentation and tree induction from noisy sequences, compared to change point detection and hierarchical clustering. In an application to musical data, we approach the unsolved problem of hierarchical music analysis from the raw note level and compare our results to expert annotations.

Formal models of Structure Building in Music, Language and Animal Songs

Jan 16, 2019

Abstract:Human language, music and a variety of animal vocalisations constitute ways of sonic communication that exhibit remarkable structural complexity. While the complexities of language and possible parallels in animal communication have been discussed intensively, reflections on the complexity of music and animal song, and their comparisons are underrepresented. In some ways, music and animal songs are more comparable to each other than to language, as propositional semantics cannot be used as as indicator of communicative success or well-formedness, and notions of grammaticality are less easily defined. This review brings together accounts of the principles of structure building in language, music and animal song, relating them to the corresponding models in formal language theory, with a special focus on evaluating the benefits of using the Chomsky hierarchy (CH). We further discuss common misunderstandings and shortcomings concerning the CH, as well as extensions or augmentations of it that address some of these issues, and suggest ways to move beyond.

A generalized parsing framework for Abstract Grammars

Jan 19, 2018

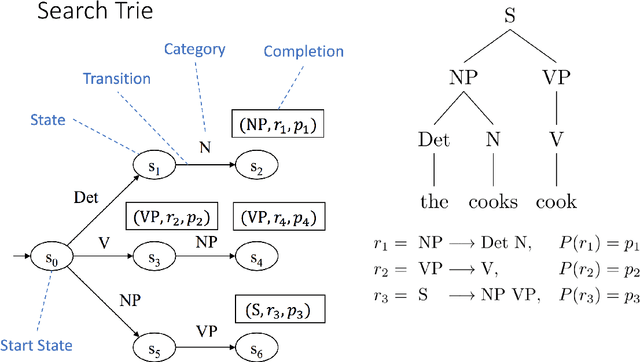

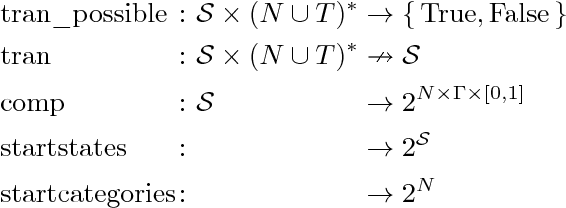

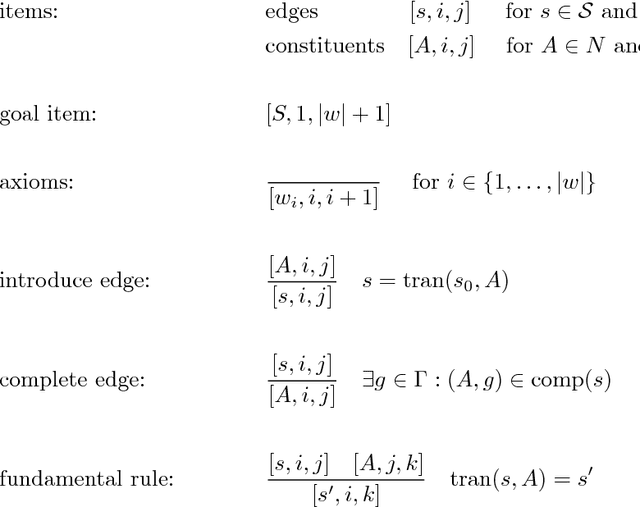

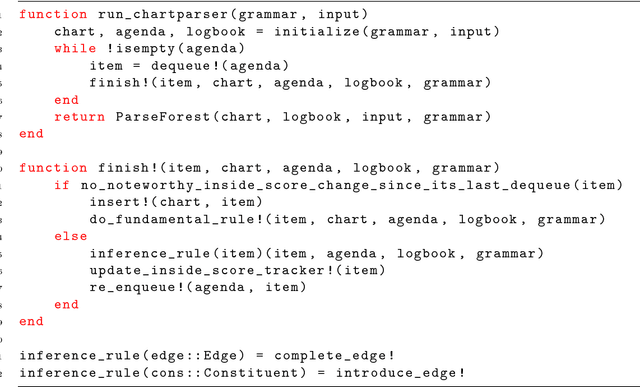

Abstract:This technical report presents a general framework for parsing a variety of grammar formalisms. We develop a grammar formalism, called an Abstract Grammar, which is general enough to represent grammars at many levels of the hierarchy, including Context Free Grammars, Minimalist Grammars, and Generalized Context-free Grammars. We then develop a single parsing framework which is capable of parsing grammars which are at least up to GCFGs on the hierarchy. Our parsing framework exposes a grammar interface, so that it can parse any particular grammar formalism that can be reduced to an Abstract Grammar.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge