Martin Mienkina

Coherence Based Sound Speed Aberration Correction -- with clinical validation in obstetric ultrasound

Nov 25, 2024

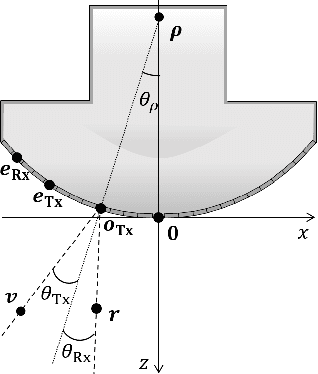

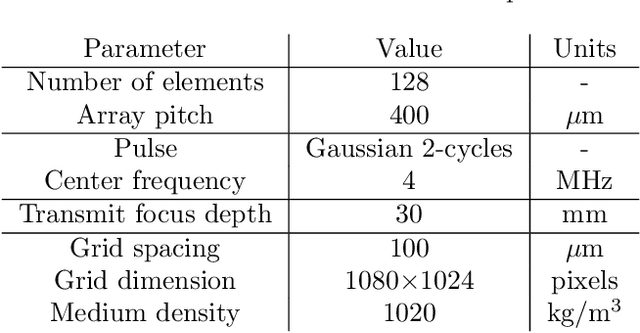

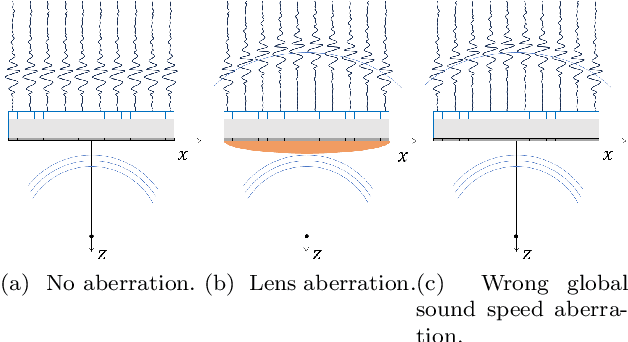

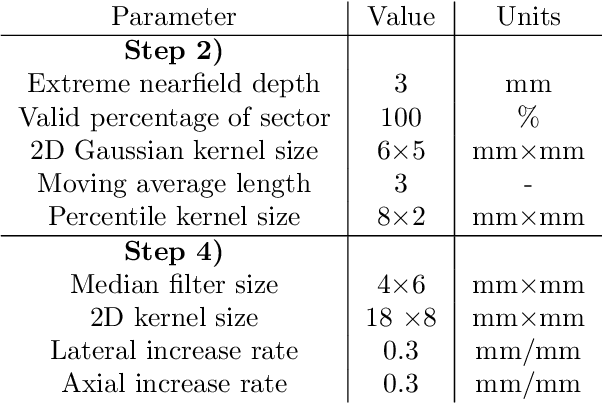

Abstract:The purpose of this work is to demonstrate a robust and clinically validated method for correcting sound speed aberrations in medical ultrasound. We propose a correction method that calculates focusing delays directly from the observed two-way distributed average sound speed. The method beamforms multiple coherence images and selects the sound speed that maximizes the coherence for each image pixel. The main contribution of this work is the direct estimation of aberration, without the ill-posed inversion of a local sound speed map, and the proposed processing of coherence images which adapts to in vivo situations where low coherent regions and off-axis scattering represents a challenge. The method is validated in vitro and in silico showing high correlation with ground truth speed of sound maps. Further, the method is clinically validated by being applied to channel data recorded from 172 obstetric Bmode images, and 12 case examples are presented and discussed in detail. The data is recorded with a GE HealthCare Voluson Expert 22 system with an eM6c matrix array probe. The images are evaluated by three expert clinicians, and the results show that the corrected images are preferred or gave equivalent quality to no correction (1540m/s) for 72.5% of the 172 images. In addition, a sharpness metric from digital photography is used to quantify image quality improvement. The increase in sharpness and the change in average sound speed are shown to be linearly correlated with a Pearson Correlation Coefficient of 0.67.

Fast Medical Shape Reconstruction via Meta-learned Implicit Neural Representations

Sep 11, 2024

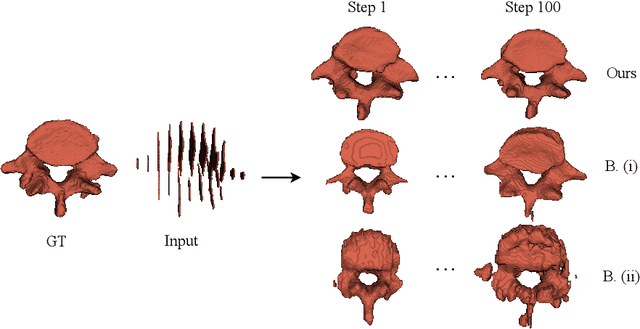

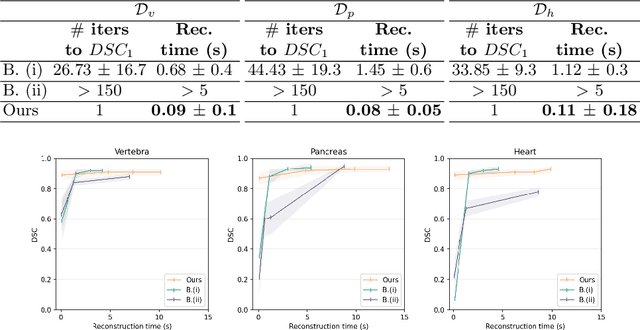

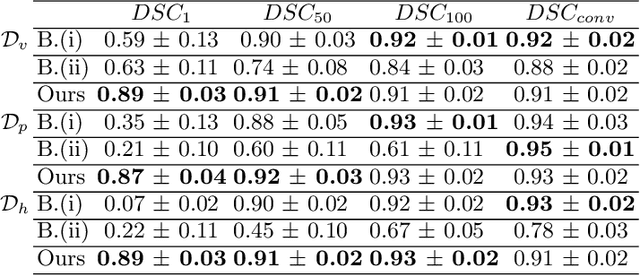

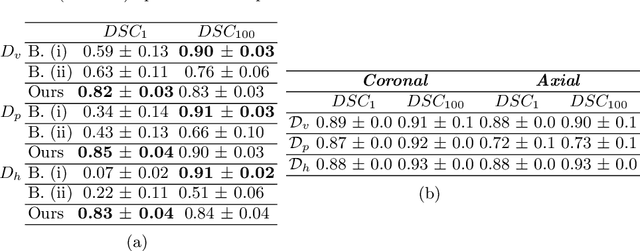

Abstract:Efficient and fast reconstruction of anatomical structures plays a crucial role in clinical practice. Minimizing retrieval and processing times not only potentially enhances swift response and decision-making in critical scenarios but also supports interactive surgical planning and navigation. Recent methods attempt to solve the medical shape reconstruction problem by utilizing implicit neural functions. However, their performance suffers in terms of generalization and computation time, a critical metric for real-time applications. To address these challenges, we propose to leverage meta-learning to improve the network parameters initialization, reducing inference time by an order of magnitude while maintaining high accuracy. We evaluate our approach on three public datasets covering different anatomical shapes and modalities, namely CT and MRI. Our experimental results show that our model can handle various input configurations, such as sparse slices with different orientations and spacings. Additionally, we demonstrate that our method exhibits strong transferable capabilities in generalizing to shape domains unobserved at training time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge