Philip Matthias Winter

Fast Medical Shape Reconstruction via Meta-learned Implicit Neural Representations

Sep 11, 2024

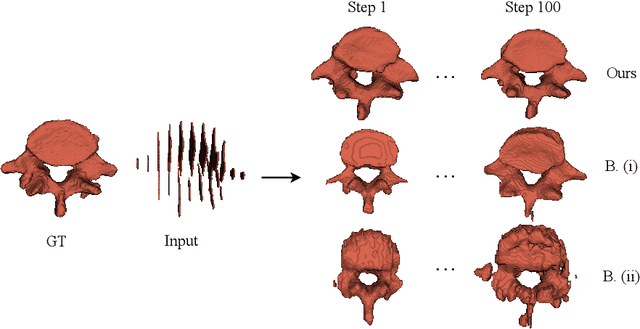

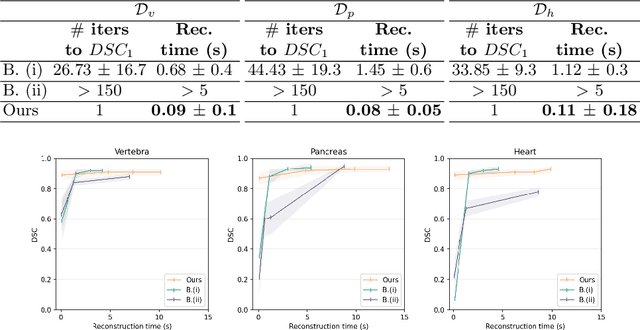

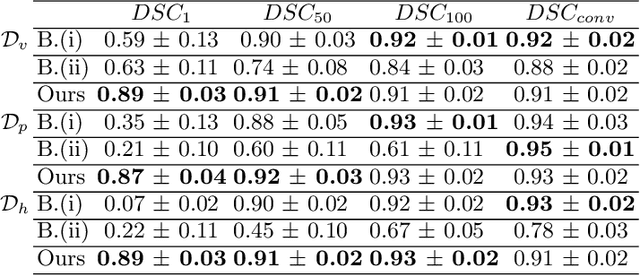

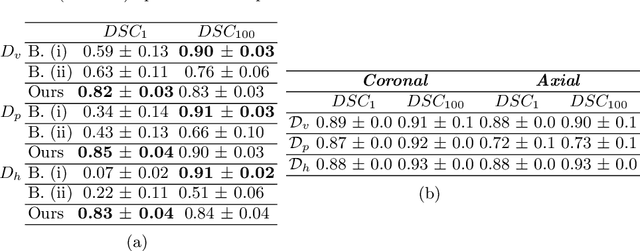

Abstract:Efficient and fast reconstruction of anatomical structures plays a crucial role in clinical practice. Minimizing retrieval and processing times not only potentially enhances swift response and decision-making in critical scenarios but also supports interactive surgical planning and navigation. Recent methods attempt to solve the medical shape reconstruction problem by utilizing implicit neural functions. However, their performance suffers in terms of generalization and computation time, a critical metric for real-time applications. To address these challenges, we propose to leverage meta-learning to improve the network parameters initialization, reducing inference time by an order of magnitude while maintaining high accuracy. We evaluate our approach on three public datasets covering different anatomical shapes and modalities, namely CT and MRI. Our experimental results show that our model can handle various input configurations, such as sparse slices with different orientations and spacings. Additionally, we demonstrate that our method exhibits strong transferable capabilities in generalizing to shape domains unobserved at training time.

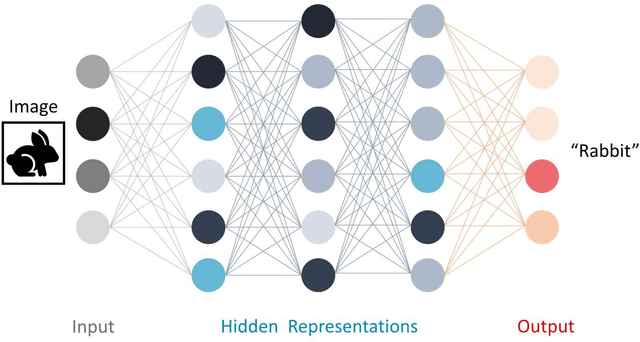

PARMESAN: Parameter-Free Memory Search and Transduction for Dense Prediction Tasks

Mar 18, 2024Abstract:In this work we address flexibility in deep learning by means of transductive reasoning. For adaptation to new tasks or new data, existing methods typically involve tuning of learnable parameters or even complete re-training from scratch, rendering such approaches unflexible in practice. We argue that the notion of separating computation from memory by the means of transduction can act as a stepping stone for solving these issues. We therefore propose PARMESAN (parameter-free memory search and transduction), a scalable transduction method which leverages a memory module for solving dense prediction tasks. At inference, hidden representations in memory are being searched to find corresponding examples. In contrast to other methods, PARMESAN learns without the requirement for any continuous training or fine-tuning of learnable parameters simply by modifying the memory content. Our method is compatible with commonly used neural architectures and canonically transfers to 1D, 2D, and 3D grid-based data. We demonstrate the capabilities of our approach at complex tasks such as continual and few-shot learning. PARMESAN learns up to 370 times faster than common baselines while being on par in terms of predictive performance, knowledge retention, and data-efficiency.

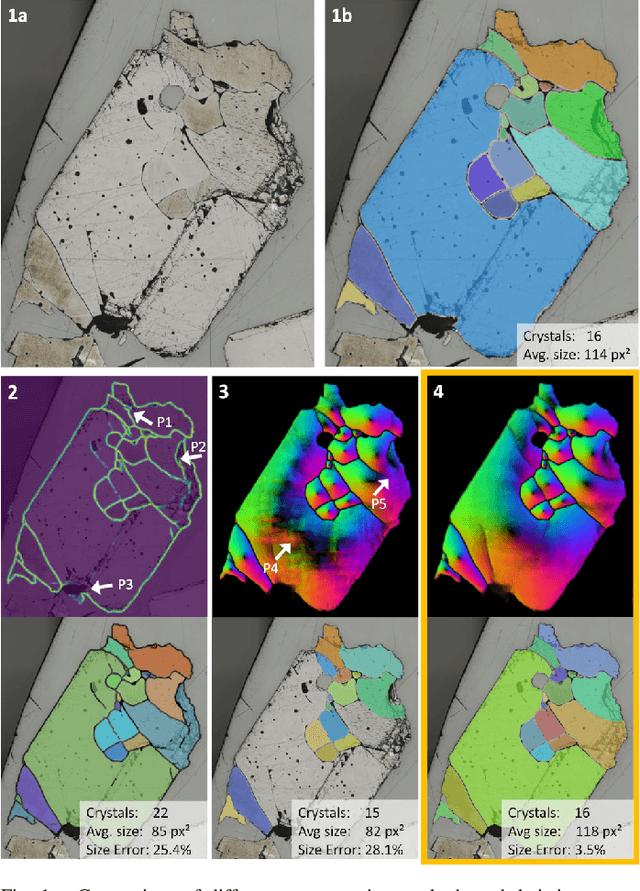

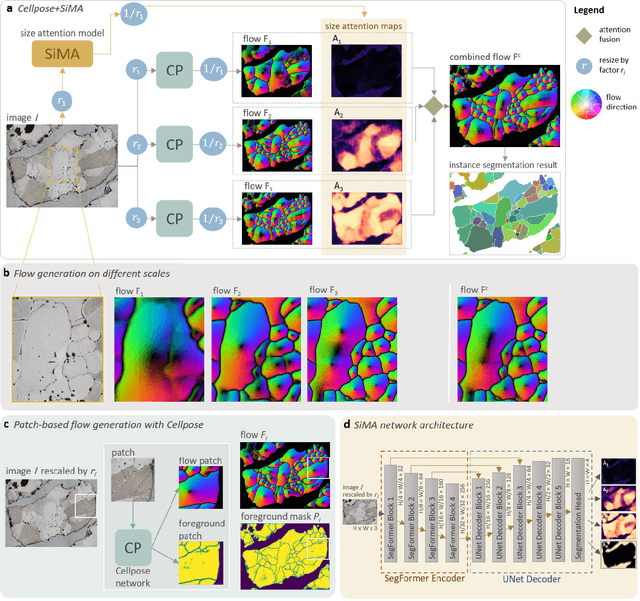

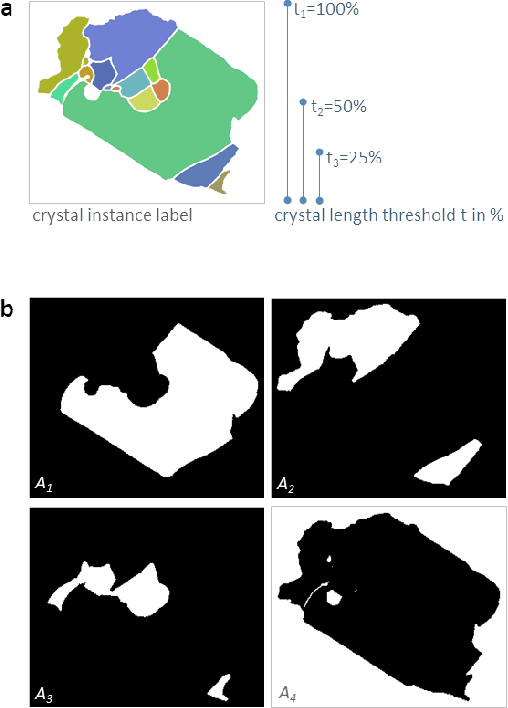

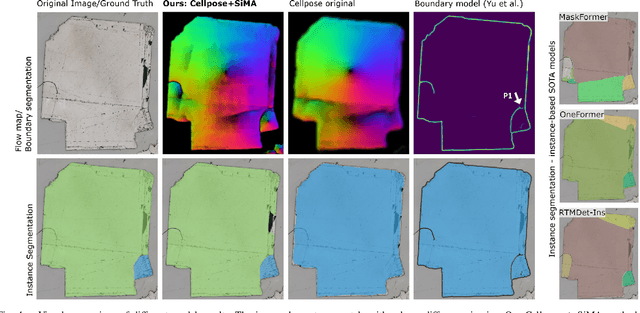

Multi-scale attention-based instance segmentation for measuring crystals with large size variation

Jan 08, 2024

Abstract:Quantitative measurement of crystals in high-resolution images allows for important insights into underlying material characteristics. Deep learning has shown great progress in vision-based automatic crystal size measurement, but current instance segmentation methods reach their limits with images that have large variation in crystal size or hard to detect crystal boundaries. Even small image segmentation errors, such as incorrectly fused or separated segments, can significantly lower the accuracy of the measured results. Instead of improving the existing pixel-wise boundary segmentation methods, we propose to use an instance-based segmentation method, which gives more robust segmentation results to improve measurement accuracy. Our novel method enhances flow maps with a size-aware multi-scale attention module. The attention module adaptively fuses information from multiple scales and focuses on the most relevant scale for each segmented image area. We demonstrate that our proposed attention fusion strategy outperforms state-of-the-art instance and boundary segmentation methods, as well as simple average fusion of multi-scale predictions. We evaluate our method on a refractory raw material dataset of high-resolution images with large variation in crystal size and show that our model can be used to calculate the crystal size more accurately than existing methods.

Trusted Artificial Intelligence: Towards Certification of Machine Learning Applications

Mar 31, 2021

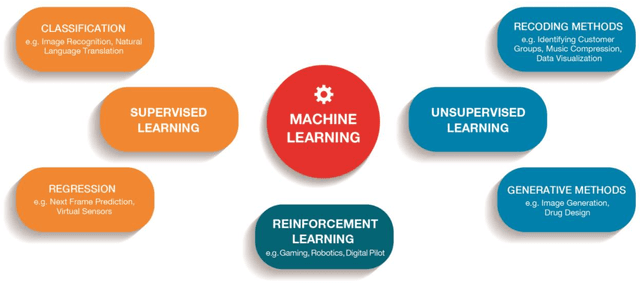

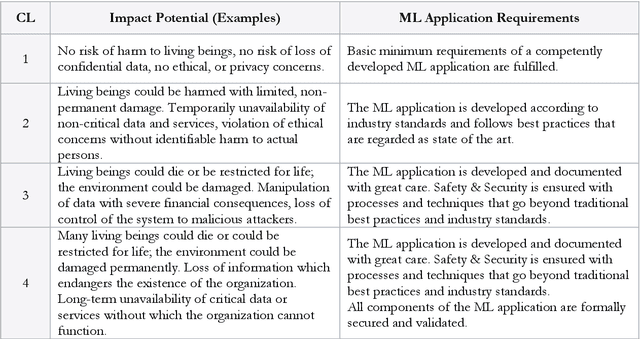

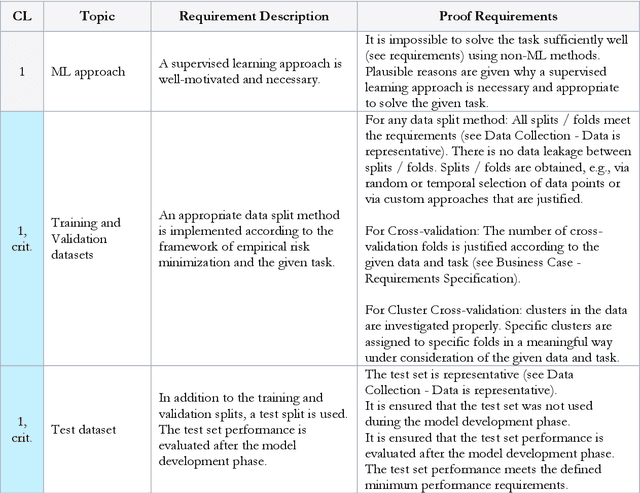

Abstract:Artificial Intelligence is one of the fastest growing technologies of the 21st century and accompanies us in our daily lives when interacting with technical applications. However, reliance on such technical systems is crucial for their widespread applicability and acceptance. The societal tools to express reliance are usually formalized by lawful regulations, i.e., standards, norms, accreditations, and certificates. Therefore, the T\"UV AUSTRIA Group in cooperation with the Institute for Machine Learning at the Johannes Kepler University Linz, proposes a certification process and an audit catalog for Machine Learning applications. We are convinced that our approach can serve as the foundation for the certification of applications that use Machine Learning and Deep Learning, the techniques that drive the current revolution in Artificial Intelligence. While certain high-risk areas, such as fully autonomous robots in workspaces shared with humans, are still some time away from certification, we aim to cover low-risk applications with our certification procedure. Our holistic approach attempts to analyze Machine Learning applications from multiple perspectives to evaluate and verify the aspects of secure software development, functional requirements, data quality, data protection, and ethics. Inspired by existing work, we introduce four criticality levels to map the criticality of a Machine Learning application regarding the impact of its decisions on people, environment, and organizations. Currently, the audit catalog can be applied to low-risk applications within the scope of supervised learning as commonly encountered in industry. Guided by field experience, scientific developments, and market demands, the audit catalog will be extended and modified accordingly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge