Markus Hofinger

The Five Elements of Flow

Dec 23, 2019

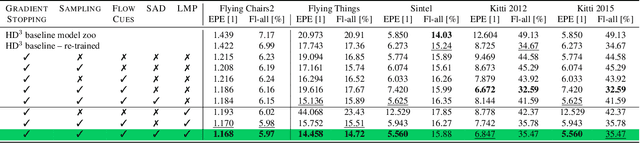

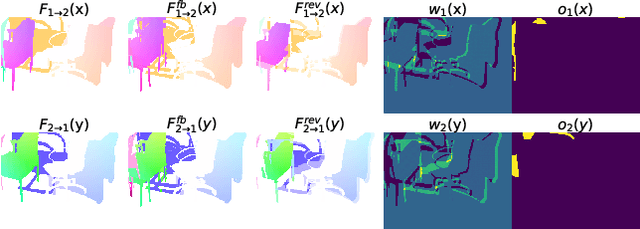

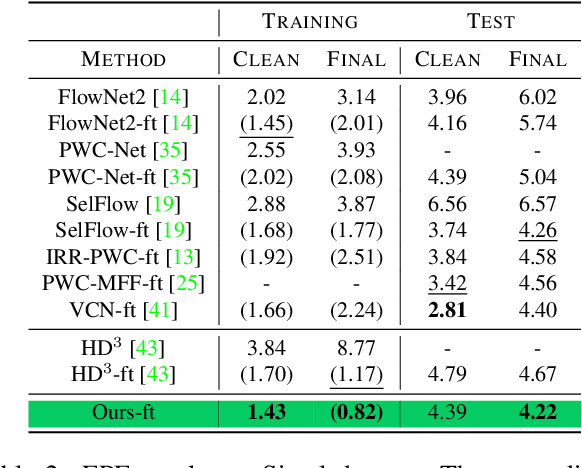

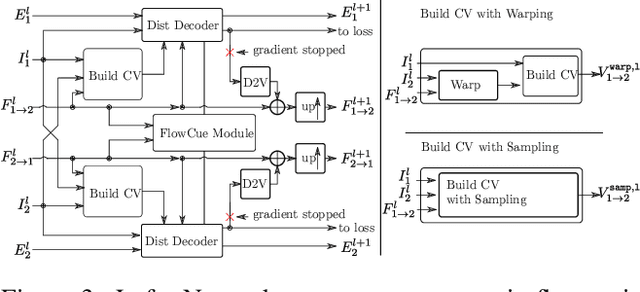

Abstract:In this work we propose five concrete steps to improve the performance of optical flow algorithms. We carefully reviewed recently introduced innovations and well-established techniques in deep learning-based flow methods including i) pyramidal feature representations, ii) flow-based consistency checks, iii) cost volume construction practices or iv) distillation, and present extensions or alternatives to inhibiting factors we identified therein. We also show how changing the way gradients propagate in modern flow networks can lead to surprising boosts in performance. Finally, we contribute a novel feature that adaptively guides the learning process towards improving on under-performing flow predictions. Our findings are conceptually simple and easy to implement, yet result in compelling improvements on relevant error measures that we demonstrate via exhaustive ablations on datasets like Flying Chairs2, Flying Things, Sintel and KITTI. We establish new state-of-the-art results on the challenging Sintel and Kitti 2015 test datasets, and even show the portability of our findings to different optical flow and depth from stereo approaches.

Learning Multi-Object Tracking and Segmentation from Automatic Annotations

Dec 04, 2019

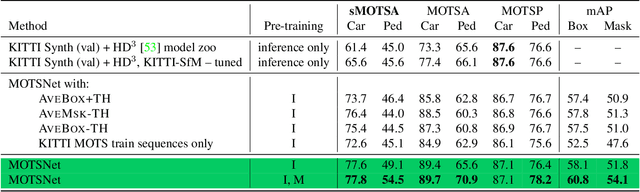

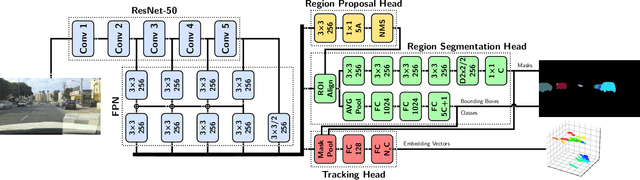

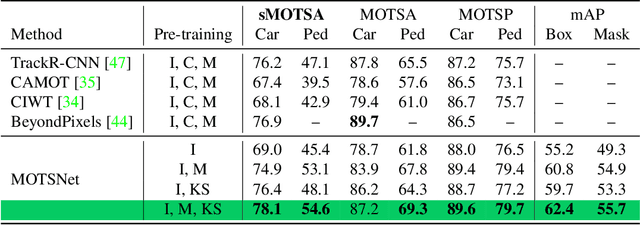

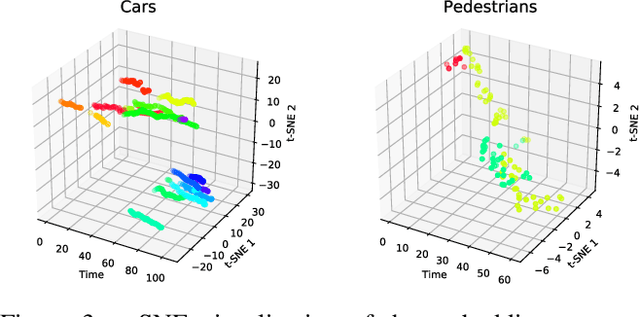

Abstract:In this work we contribute a novel pipeline to automatically generate training data, and to improve over state-of-the-art multi-object tracking and segmentation (MOTS) methods. Our proposed tracklet mining algorithm turns raw street-level videos into high-fidelity MOTS training data, is scalable and overcomes the need of expensive and time-consuming manual annotation approaches. We leverage state-of-the-art instance segmentation results in combination with optical flow obtained from models also trained on automatically harvested training data. Our second major contribution is MOTSNet - a deep learning, tracking-by-detection architecture for MOTS - deploying a novel mask-pooling layer for improved object association over time. Training MOTSNet with our automatically extracted data leads to significantly improved sMOTSA scores on the novel KITTI MOTS dataset (+1.9%/+7.5% on cars/pedestrians). Even without learning from a single, manually annotated MOTS training example we still improve over prior state-of-the-art, confirming the compelling properties of our pipeline. On the MOTSChallenge dataset we improve by +4.1%, further confirming the efficacy of our proposed MOTSNet.

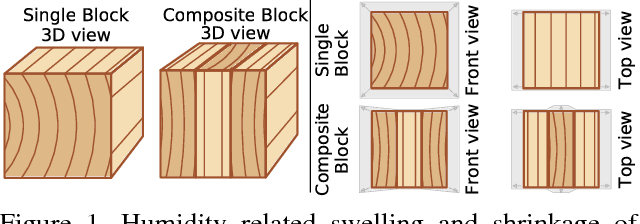

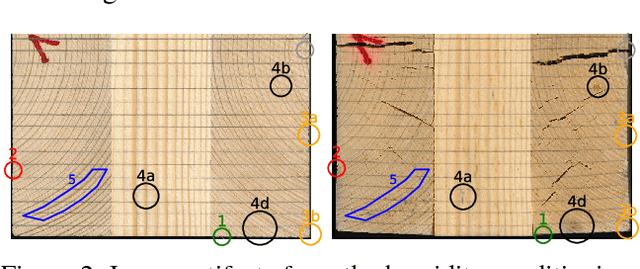

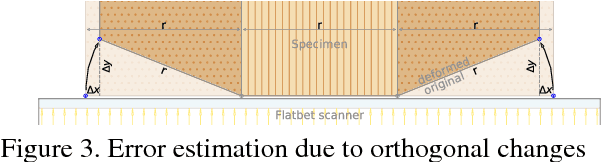

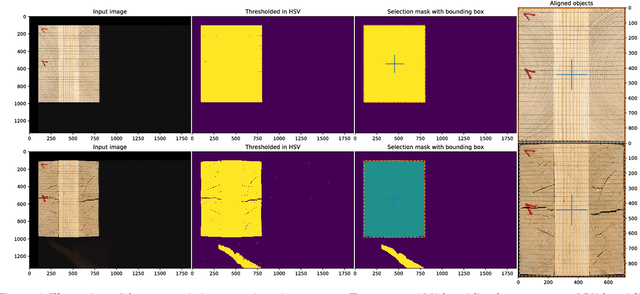

Robust Deformation Estimation in Wood-Composite Materials using Variational Optical Flow

Feb 13, 2018

Abstract:Wood-composite materials are widely used today as they homogenize humidity related directional deformations. Quantification of these deformations as coefficients is important for construction and engineering and topic of current research but still a manual process. This work introduces a novel computer vision approach that automatically extracts these properties directly from scans of the wooden specimens, taken at different humidity levels during the long lasting humidity conditioning process. These scans are used to compute a humidity dependent deformation field for each pixel, from which the desired coefficients can easily be calculated. The overall method includes automated registration of the wooden blocks, numerical optimization to compute a variational optical flow field which is further used to calculate dense strain fields and finally the engineering coefficients and their variance throughout the wooden blocks. The methods regularization is fully parameterizable which allows to model and suppress artifacts due to surface appearance changes of the specimens from mold, cracks, etc. that typically arise in the conditioning process.

* 8 pages, 8 figures, originally published in 23 rd Computer Vision Winter Workshop proceedings 2018 http://cmp.felk.cvut.cz/cvww2018/papers/28.pdf

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge