The Five Elements of Flow

Paper and Code

Dec 23, 2019

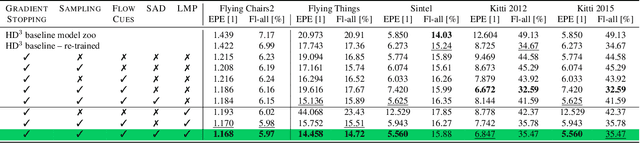

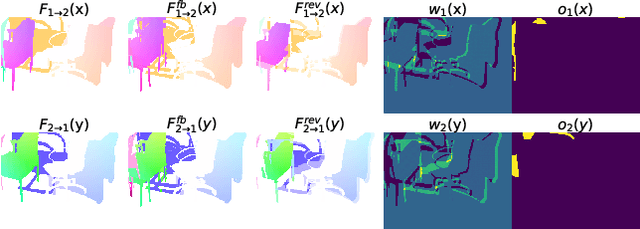

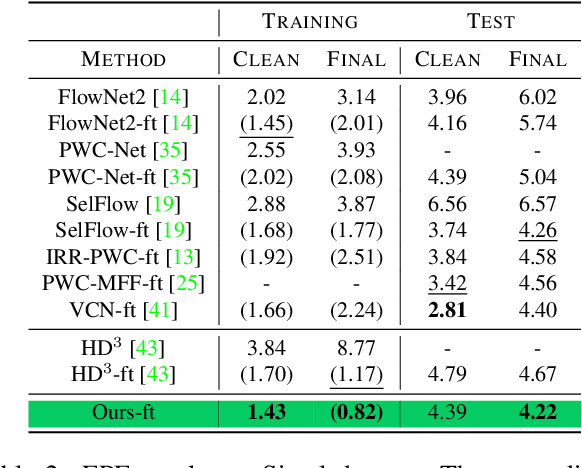

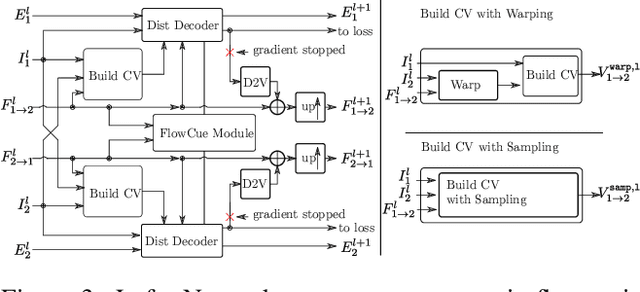

In this work we propose five concrete steps to improve the performance of optical flow algorithms. We carefully reviewed recently introduced innovations and well-established techniques in deep learning-based flow methods including i) pyramidal feature representations, ii) flow-based consistency checks, iii) cost volume construction practices or iv) distillation, and present extensions or alternatives to inhibiting factors we identified therein. We also show how changing the way gradients propagate in modern flow networks can lead to surprising boosts in performance. Finally, we contribute a novel feature that adaptively guides the learning process towards improving on under-performing flow predictions. Our findings are conceptually simple and easy to implement, yet result in compelling improvements on relevant error measures that we demonstrate via exhaustive ablations on datasets like Flying Chairs2, Flying Things, Sintel and KITTI. We establish new state-of-the-art results on the challenging Sintel and Kitti 2015 test datasets, and even show the portability of our findings to different optical flow and depth from stereo approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge