Marko Krizmancic

Decentralized Multi-Robot Formation Control Using Reinforcement Learning

Jun 26, 2023Abstract:This paper presents a decentralized leader-follower multi-robot formation control based on a reinforcement learning (RL) algorithm applied to a swarm of small educational Sphero robots. Since the basic Q-learning method is known to require large memory resources for Q-tables, this work implements the Double Deep Q-Network (DDQN) algorithm, which has achieved excellent results in many robotic problems. To enhance the system behavior, we trained two different DDQN models, one for reaching the formation and the other for maintaining it. The models use a discrete set of robot motions (actions) to adapt the continuous nonlinear system to the discrete nature of RL. The presented approach has been tested in simulation and real experiments which show that the multi-robot system can achieve and maintain a stable formation without the need for complex mathematical models and nonlinear control laws.

Cooperative Aerial-Ground Multi-Robot System for Automated Construction Tasks

Apr 29, 2022

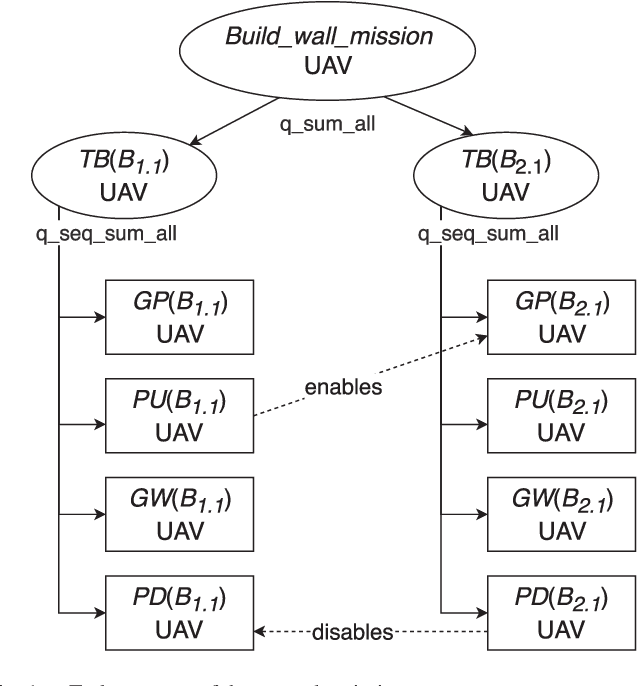

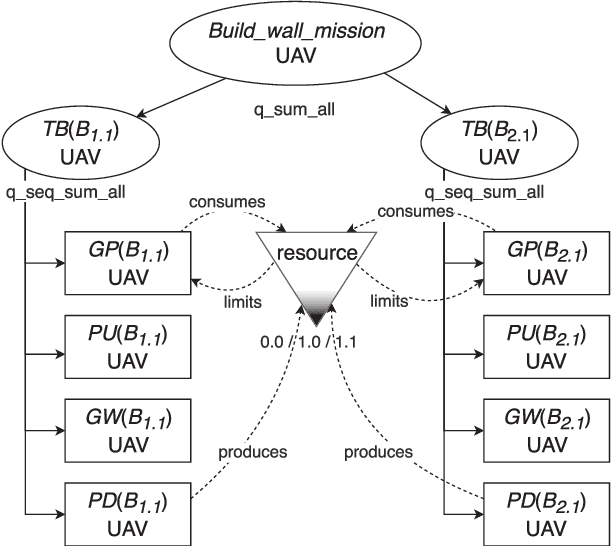

Abstract:In this paper, we study a cooperative aerial-ground robotic team and its application to the task of automated construction. We propose a solution for planning and coordinating the mission of constructing a wall with a predefined structure for a heterogeneous system consisting of one mobile robot and up to three unmanned aerial vehicles. The wall consists of bricks of various weights and sizes, some of which need to be transported using multiple robots simultaneously. To that end, we use hierarchical task representation to specify interrelationships between mission subtasks and employ effective scheduling and coordination mechanism, inspired by Generalized Partial Global Planning. We evaluate the performance of the method under different optimization criteria and validate the solution in the realistic Gazebo simulation environment.

* Also included in conference proceedings of IEEE International Conference on Robotics and Automation (ICRA) 2020

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge