Mark O'Connor

wav2pos: Sound Source Localization using Masked Autoencoders

Aug 28, 2024

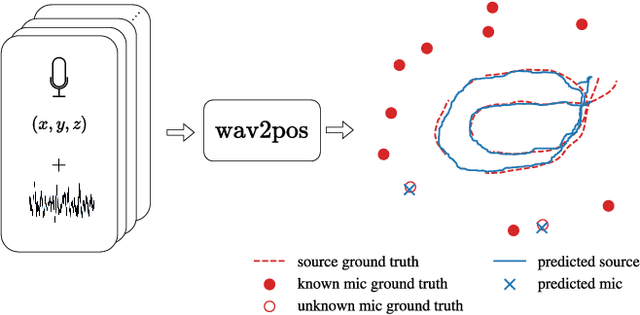

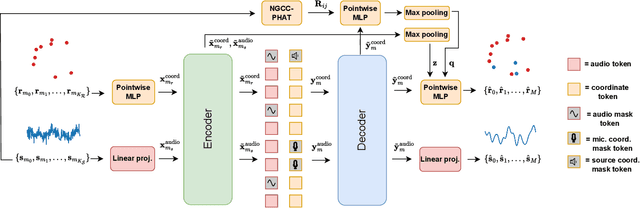

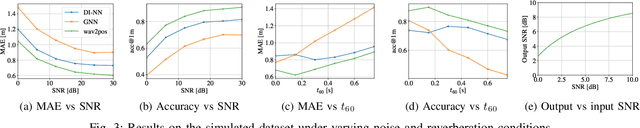

Abstract:We present a novel approach to the 3D sound source localization task for distributed ad-hoc microphone arrays by formulating it as a set-to-set regression problem. By training a multi-modal masked autoencoder model that operates on audio recordings and microphone coordinates, we show that such a formulation allows for accurate localization of the sound source, by reconstructing coordinates masked in the input. Our approach is flexible in the sense that a single model can be used with an arbitrary number of microphones, even when a subset of audio recordings and microphone coordinates are missing. We test our method on simulated and real-world recordings of music and speech in indoor environments, and demonstrate competitive performance compared to both classical and other learning based localization methods.

Extending GCC-PHAT using Shift Equivariant Neural Networks

Aug 09, 2022

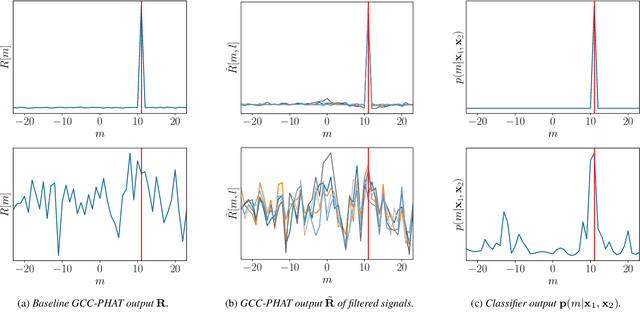

Abstract:Speaker localization using microphone arrays depends on accurate time delay estimation techniques. For decades, methods based on the generalized cross correlation with phase transform (GCC-PHAT) have been widely adopted for this purpose. Recently, the GCC-PHAT has also been used to provide input features to neural networks in order to remove the effects of noise and reverberation, but at the cost of losing theoretical guarantees in noise-free conditions. We propose a novel approach to extending the GCC-PHAT, where the received signals are filtered using a shift equivariant neural network that preserves the timing information contained in the signals. By extensive experiments we show that our model consistently reduces the error of the GCC-PHAT in adverse environments, with guarantees of exact time delay recovery in ideal conditions.

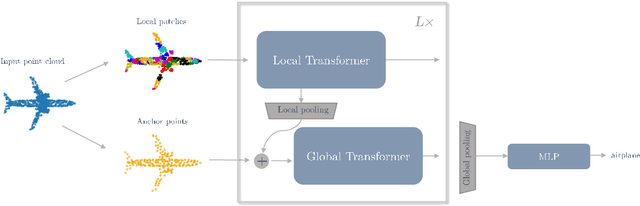

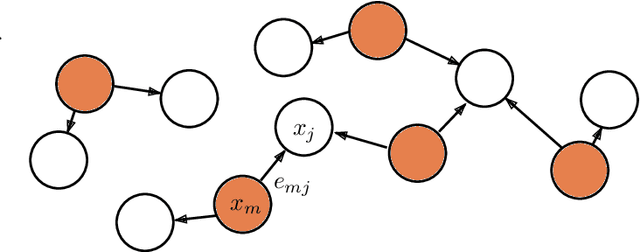

Points to Patches: Enabling the Use of Self-Attention for 3D Shape Recognition

Apr 08, 2022

Abstract:While the Transformer architecture has become ubiquitous in the machine learning field, its adaptation to 3D shape recognition is non-trivial. Due to its quadratic computational complexity, the self-attention operator quickly becomes inefficient as the set of input points grows larger. Furthermore, we find that the attention mechanism struggles to find useful connections between individual points on a global scale. In order to alleviate these problems, we propose a two-stage Point Transformer-in-Transformer (Point-TnT) approach which combines local and global attention mechanisms, enabling both individual points and patches of points to attend to each other effectively. Experiments on shape classification show that such an approach provides more useful features for downstream tasks than the baseline Transformer, while also being more computationally efficient. In addition, we also extend our method to feature matching for scene reconstruction, showing that it can be used in conjunction with existing scene reconstruction pipelines.

Keyword Transformer: A Self-Attention Model for Keyword Spotting

Apr 15, 2021

Abstract:The Transformer architecture has been successful across many domains, including natural language processing, computer vision and speech recognition. In keyword spotting, self-attention has primarily been used on top of convolutional or recurrent encoders. We investigate a range of ways to adapt the Transformer architecture to keyword spotting and introduce the Keyword Transformer (KWT), a fully self-attentional architecture that exceeds state-of-the-art performance across multiple tasks without any pre-training or additional data. Surprisingly, this simple architecture outperforms more complex models that mix convolutional, recurrent and attentive layers. KWT can be used as a drop-in replacement for these models, setting two new benchmark records on the Google Speech Commands dataset with 98.6% and 97.7% accuracy on the 12 and 35-command tasks respectively.

Deep Ordinal Regression with Label Diversity

Jun 29, 2020

Abstract:Regression via classification (RvC) is a common method used for regression problems in deep learning, where the target variable belongs to a set of continuous values. By discretizing the target into a set of non-overlapping classes, it has been shown that training a classifier can improve neural network accuracy compared to using a standard regression approach. However, it is not clear how the set of discrete classes should be chosen and how it affects the overall solution. In this work, we propose that using several discrete data representations simultaneously can improve neural network learning compared to a single representation. Our approach is end-to-end differentiable and can be added as a simple extension to conventional learning methods, such as deep neural networks. We test our method on three challenging tasks and show that our method reduces the prediction error compared to a baseline RvC approach while maintaining a similar model complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge