Mark Greaves

Pacific Northwest National Laboratory

Adversarial Training for EM Classification Networks

Nov 20, 2020

Abstract:We present a novel variant of Domain Adversarial Networks with impactful improvements to the loss functions, training paradigm, and hyperparameter optimization. New loss functions are defined for both forks of the DANN network, the label predictor and domain classifier, in order to facilitate more rapid gradient descent, provide more seamless integration into modern neural networking frameworks, and allow previously unavailable inferences into network behavior. Using these loss functions, it is possible to extend the concept of 'domain' to include arbitrary user defined labels applicable to subsets of the training data, the test data, or both. As such, the network can be operated in either 'On the Fly' mode where features provided by the feature extractor indicative of differences between 'domain' labels in the training data are removed or in 'Test Collection Informed' mode where features indicative of difference between 'domain' labels in the combined training and test data are removed (without needing to know or provide test activity labels to the network). This work also draws heavily from previous works on Robust Training which draws training examples from a L_inf ball around the training data in order to remove fragile features induced by random fluctuations in the data. On these networks we explore the process of hyperparameter optimization for both the domain adversarial and robust hyperparameters. Finally, this network is applied to the construction of a binary classifier used to identify the presence of EM signal emitted by a turbopump. For this example, the effect of the robust and domain adversarial training is to remove features indicative of the difference in background between instances of operation of the device - providing highly discriminative features on which to construct the classifier.

Machine Learning Algorithms for Active Monitoring of High Performance Computing as a Service (HPCaaS) Cloud Environments

Sep 26, 2020

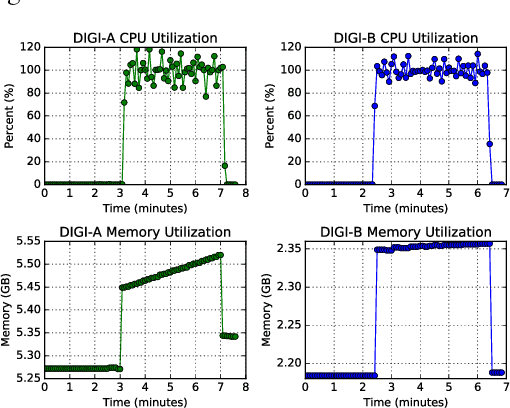

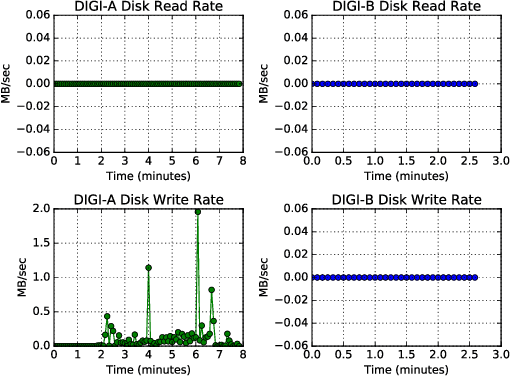

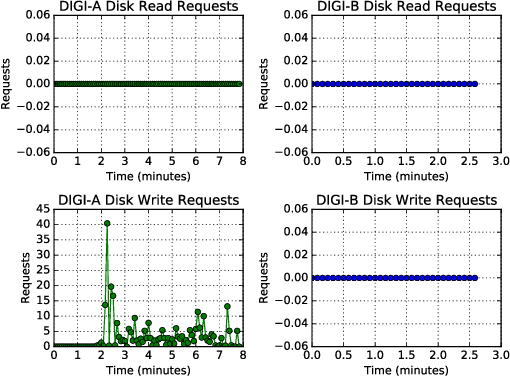

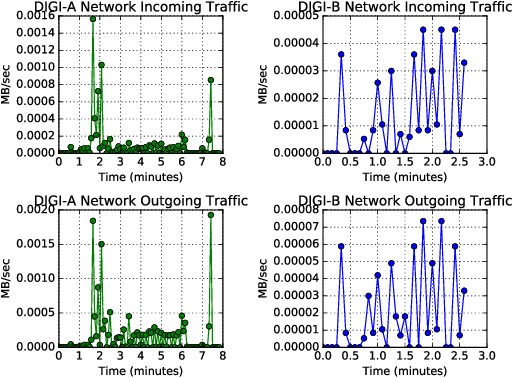

Abstract:Cloud computing provides ubiquitous and on-demand access to vast reconfigurable resources that can meet any computational need. Many service models are available, but the Infrastructure as a Service (IaaS) model is particularly suited to operate as a high performance computing (HPC) platform, by networking large numbers of cloud computing nodes. We used the Pacific Northwest National Laboratory (PNNL) cloud computing environment to perform our experiments. A number of cloud computing providers such as Amazon Web Services, Microsoft Azure, or IBM Cloud, offer flexible and scalable computing resources. This paper explores the viability identifying types of engineering applications running on a cloud infrastructure configured as an HPC platform using privacy preserving features as input to statistical models. The engineering applications considered in this work include MCNP6, a radiation transport code developed by Los Alamos National Laboratory, OpenFOAM, an open source computational fluid dynamics code, and CADO-NFS, a numerical implementation of the general number field sieve algorithm used for prime number factorization. Our experiments use the OpenStack cloud management tool to create a cloud HPC environment and the privacy preserving Ceilometer billing meters as classification features to demonstrate identification of these applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge