Marjan Nourollahi

Proximity-Based Active Learning on Streaming Data: A Personalized Eating Moment Recognition

Mar 29, 2020

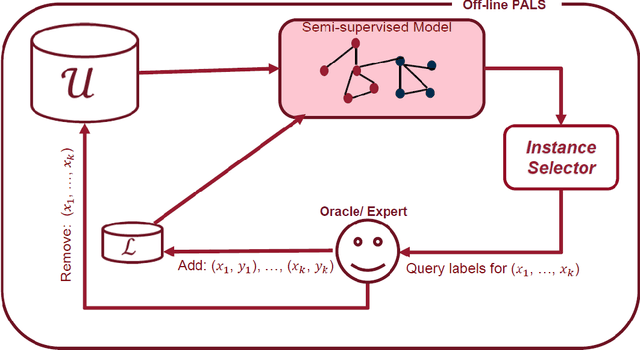

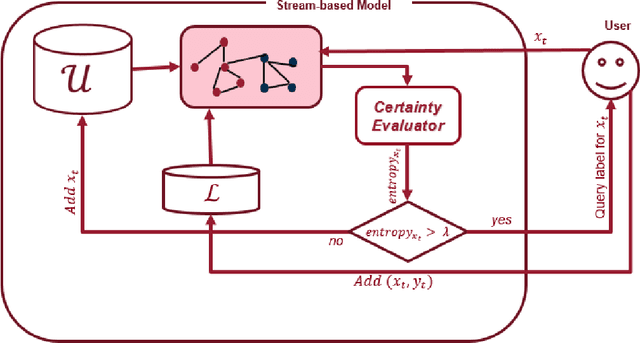

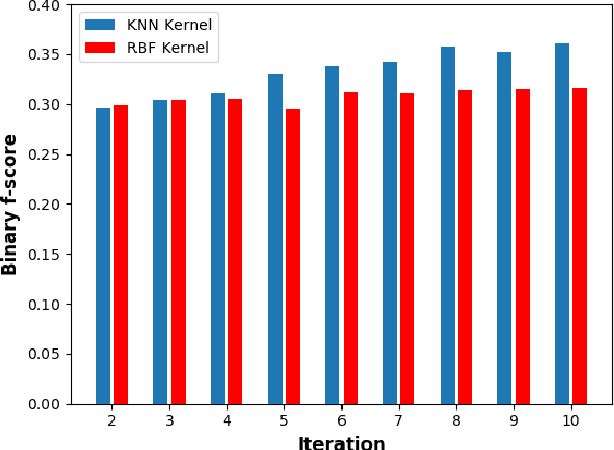

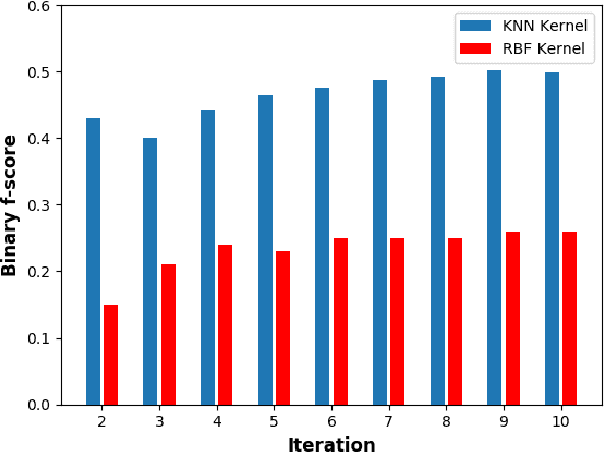

Abstract:Detecting when eating occurs is an essential step toward automatic dietary monitoring, medication adherence assessment, and diet-related health interventions. Wearable technologies play a central role in designing unubtrusive diet monitoring solutions by leveraging machine learning algorithms that work on time-series sensor data to detect eating moments. While much research has been done on developing activity recognition and eating moment detection algorithms, the performance of the detection algorithms drops substantially when the model trained with one user is utilized by a new user. To facilitate development of personalized models, we propose PALS, Proximity-based Active Learning on Streaming data, a novel proximity-based model for recognizing eating gestures with the goal of significantly decreasing the need for labeled data with new users. Particularly, we propose an optimization problem to perform active learning under limited query budget by leveraging unlabeled data. Our extensive analysis on data collected in both controlled and uncontrolled settings indicates that the F-score of PLAS ranges from 22% to 39% for a budget that varies from 10 to 60 query. Furthermore, compared to the state-of-the-art approaches, off-line PALS, on average, achieves to 40% higher recall and 12\% higher f-score in detecting eating gestures.

Resource-Efficient Wearable Computing for Real-Time Reconfigurable Machine Learning: A Cascading Binary Classification

Jul 07, 2019

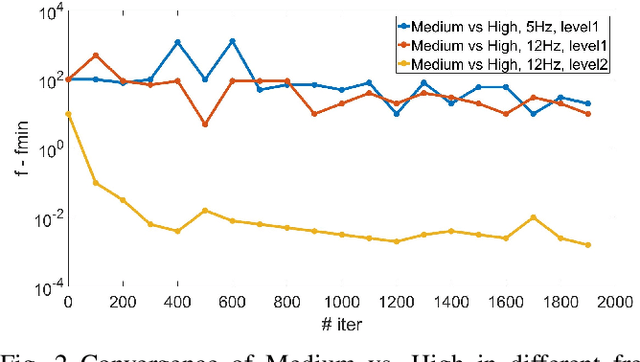

Abstract:Advances in embedded systems have enabled integration of many lightweight sensory devices within our daily life. In particular, this trend has given rise to continuous expansion of wearable sensors in a broad range of applications from health and fitness monitoring to social networking and military surveillance. Wearables leverage machine learning techniques to profile behavioral routine of their end-users through activity recognition algorithms. Current research assumes that such machine learning algorithms are trained offline. In reality, however, wearables demand continuous reconfiguration of their computational algorithms due to their highly dynamic operation. Developing a personalized and adaptive machine learning model requires real-time reconfiguration of the model. Due to stringent computation and memory constraints of these embedded sensors, the training/re-training of the computational algorithms need to be memory- and computation-efficient. In this paper, we propose a framework, based on the notion of online learning, for real-time and on-device machine learning training. We propose to transform the activity recognition problem from a multi-class classification problem to a hierarchical model of binary decisions using cascading online binary classifiers. Our results, based on Pegasos online learning, demonstrate that the proposed approach achieves 97% accuracy in detecting activities of varying intensities using a limited memory while power usages of the system is reduced by more than 40%.

Personalized Human Activity Recognition Using Convolutional Neural Networks

Jan 25, 2018

Abstract:A major barrier to the personalized Human Activity Recognition using wearable sensors is that the performance of the recognition model drops significantly upon adoption of the system by new users or changes in physical/ behavioral status of users. Therefore, the model needs to be retrained by collecting new labeled data in the new context. In this study, we develop a transfer learning framework using convolutional neural networks to build a personalized activity recognition model with minimal user supervision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge