Resource-Efficient Wearable Computing for Real-Time Reconfigurable Machine Learning: A Cascading Binary Classification

Paper and Code

Jul 07, 2019

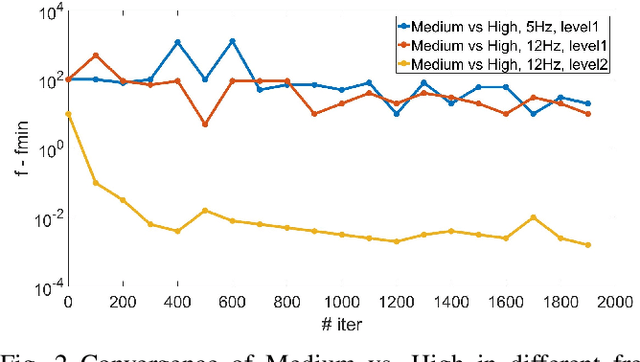

Advances in embedded systems have enabled integration of many lightweight sensory devices within our daily life. In particular, this trend has given rise to continuous expansion of wearable sensors in a broad range of applications from health and fitness monitoring to social networking and military surveillance. Wearables leverage machine learning techniques to profile behavioral routine of their end-users through activity recognition algorithms. Current research assumes that such machine learning algorithms are trained offline. In reality, however, wearables demand continuous reconfiguration of their computational algorithms due to their highly dynamic operation. Developing a personalized and adaptive machine learning model requires real-time reconfiguration of the model. Due to stringent computation and memory constraints of these embedded sensors, the training/re-training of the computational algorithms need to be memory- and computation-efficient. In this paper, we propose a framework, based on the notion of online learning, for real-time and on-device machine learning training. We propose to transform the activity recognition problem from a multi-class classification problem to a hierarchical model of binary decisions using cascading online binary classifiers. Our results, based on Pegasos online learning, demonstrate that the proposed approach achieves 97% accuracy in detecting activities of varying intensities using a limited memory while power usages of the system is reduced by more than 40%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge