Proximity-Based Active Learning on Streaming Data: A Personalized Eating Moment Recognition

Paper and Code

Mar 29, 2020

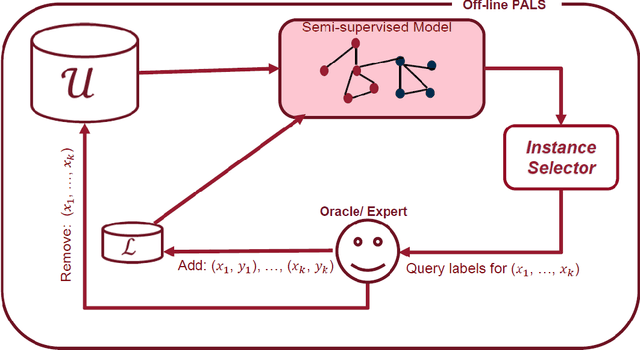

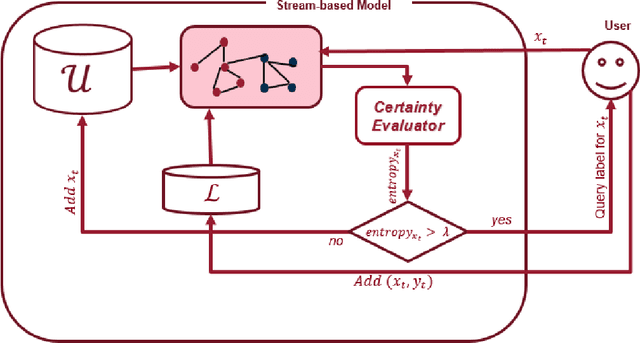

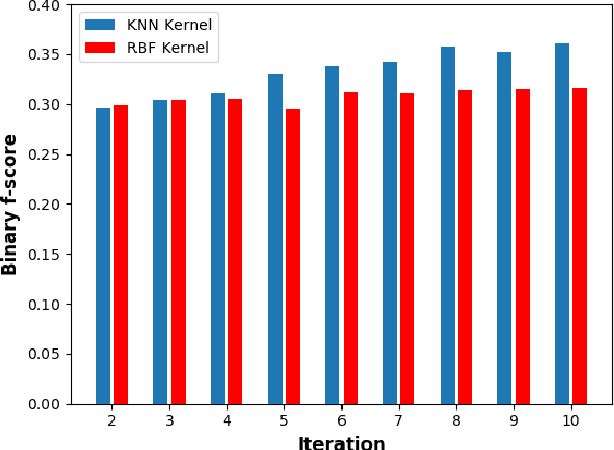

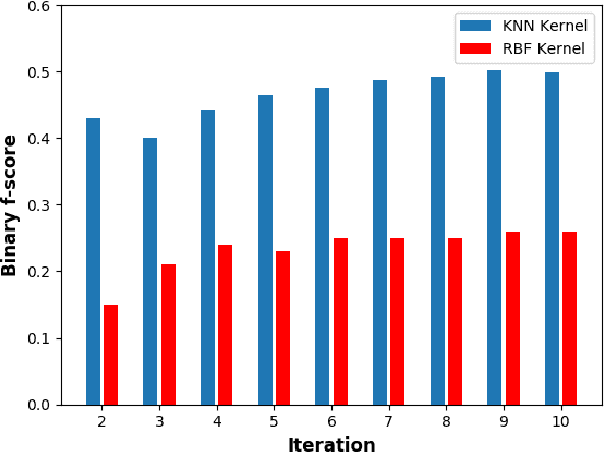

Detecting when eating occurs is an essential step toward automatic dietary monitoring, medication adherence assessment, and diet-related health interventions. Wearable technologies play a central role in designing unubtrusive diet monitoring solutions by leveraging machine learning algorithms that work on time-series sensor data to detect eating moments. While much research has been done on developing activity recognition and eating moment detection algorithms, the performance of the detection algorithms drops substantially when the model trained with one user is utilized by a new user. To facilitate development of personalized models, we propose PALS, Proximity-based Active Learning on Streaming data, a novel proximity-based model for recognizing eating gestures with the goal of significantly decreasing the need for labeled data with new users. Particularly, we propose an optimization problem to perform active learning under limited query budget by leveraging unlabeled data. Our extensive analysis on data collected in both controlled and uncontrolled settings indicates that the F-score of PLAS ranges from 22% to 39% for a budget that varies from 10 to 60 query. Furthermore, compared to the state-of-the-art approaches, off-line PALS, on average, achieves to 40% higher recall and 12\% higher f-score in detecting eating gestures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge