Mariusz Wzorek

Custom Non-Linear Model Predictive Control for Obstacle Avoidance in Indoor and Outdoor Environments

Oct 03, 2024Abstract:Navigating complex environments requires Unmanned Aerial Vehicles (UAVs) and autonomous systems to perform trajectory tracking and obstacle avoidance in real-time. While many control strategies have effectively utilized linear approximations, addressing the non-linear dynamics of UAV, especially in obstacle-dense environments, remains a key challenge that requires further research. This paper introduces a Non-linear Model Predictive Control (NMPC) framework for the DJI Matrice 100, addressing these challenges by using a dynamic model and B-spline interpolation for smooth reference trajectories, ensuring minimal deviation while respecting safety constraints. The framework supports various trajectory types and employs a penalty-based cost function for control accuracy in tight maneuvers. The framework utilizes CasADi for efficient real-time optimization, enabling the UAV to maintain robust operation even under tight computational constraints. Simulation and real-world indoor and outdoor experiments demonstrated the NMPC ability to adapt to disturbances, resulting in smooth, collision-free navigation.

UAV-Based Human Body Detector Selection and Fusion for Geolocated Saliency Map Generation

Aug 29, 2024

Abstract:The problem of reliably detecting and geolocating objects of different classes in soft real-time is essential in many application areas, such as Search and Rescue performed using Unmanned Aerial Vehicles (UAVs). This research addresses the complementary problems of system contextual vision-based detector selection, allocation, and execution, in addition to the fusion of detection results from teams of UAVs for the purpose of accurately and reliably geolocating objects of interest in a timely manner. In an offline step, an application-independent evaluation of vision-based detectors from a system perspective is first performed. Based on this evaluation, the most appropriate algorithms for online object detection for each platform are selected automatically before a mission, taking into account a number of practical system considerations, such as the available communication links, video compression used, and the available computational resources. The detection results are fused using a method for building maps of salient locations which takes advantage of a novel sensor model for vision-based detections for both positive and negative observations. A number of simulated and real flight experiments are also presented, validating the proposed method.

Polygon Area Decomposition Using a Compactness Metric

Oct 08, 2021

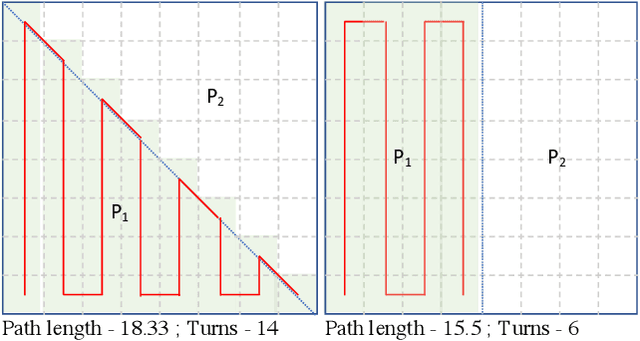

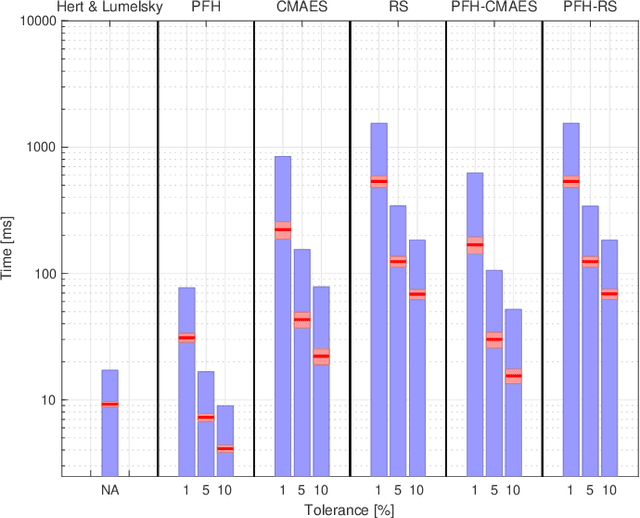

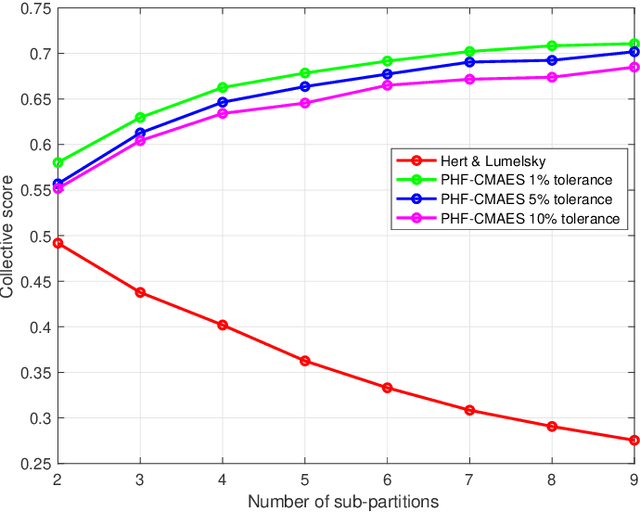

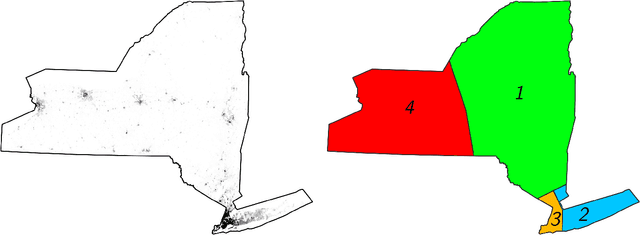

Abstract:In this paper, we consider the problem of partitioning a polygon into a set of connected disjoint sub-polygons, each of which covers an area of a specific size. The work is motivated by terrain covering applications in robotics, where the goal is to find a set of efficient plans for a team of heterogeneous robots to cover a given area. Within this application, solving a polygon partitioning problem is an essential stepping stone. Unlike previous work, the problem formulation proposed in this paper also considers a compactness metric of the generated sub-polygons, in addition to the area size constraints. Maximizing the compactness of sub-polygons directly influences the optimality of any generated motion plans. Consequently, this increases the efficiency with which robotic tasks can be performed within each sub-region. The proposed problem representation is based on grid cell decomposition and a potential field model that allows for the use of standard optimization techniques. A new algorithm, the AreaDecompose algorithm, is proposed to solve this problem. The algorithm includes a number of existing and new optimization techniques combined with two post-processing methods. The approach has been evaluated on a set of randomly generated polygons which are then divided using different criteria and the results have been compared with a state-of-the-art algorithm. Results show that the proposed algorithm can efficiently divide polygon regions maximizing compactness of the resulting partitions, where the sub-polygon regions are on average up to 73% more compact in comparison to existing techniques.

Hastily Formed Knowledge Networks and Distributed Situation Awareness for Collaborative Robotics

Mar 25, 2021

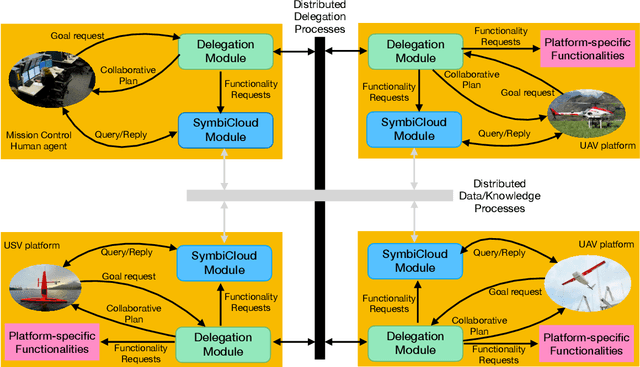

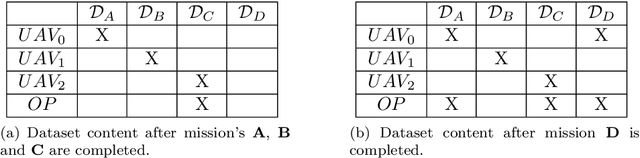

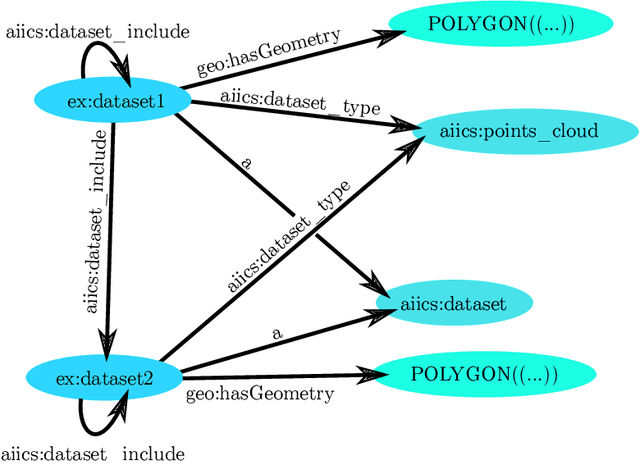

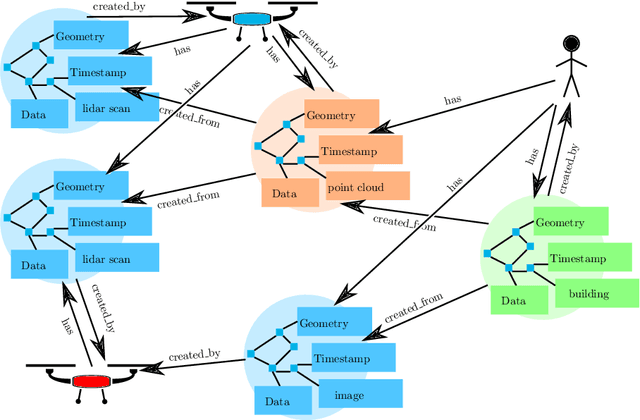

Abstract:In the context of collaborative robotics, distributed situation awareness is essential for supporting collective intelligence in teams of robots and human agents where it can be used for both individual and collective decision support. This is particularly important in applications pertaining to emergency rescue and crisis management. During operational missions, data and knowledge is gathered incrementally and in different ways by heterogeneous robots and humans. We describe this as the creation of \emph{Hastily Formed Knowledge Networks} (HFKNs). The focus of this paper is the specification and prototyping of a general distributed system architecture that supports the creation of HFKNs by teams of robots and humans. The information collected ranges from low-level sensor data to high-level semantic knowledge, the latter represented in part as RDF Graphs. The framework includes a synchronization protocol and associated algorithms that allow for the automatic distribution and sharing of data and knowledge between agents. This is done through the distributed synchronization of RDF Graphs shared between agents. High-level semantic queries specified in SPARQL can be used by robots and humans alike to acquire both knowledge and data content from team members. The system is empirically validated and complexity results of the proposed algorithms are provided. Additionally, a field robotics case study is described, where a 3D mapping mission has been executed using several UAVs in a collaborative emergency rescue scenario while using the full HFKN Framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge