Mariana Leite

Knowledge-driven Answer Generation for Conversational Search

Apr 14, 2021

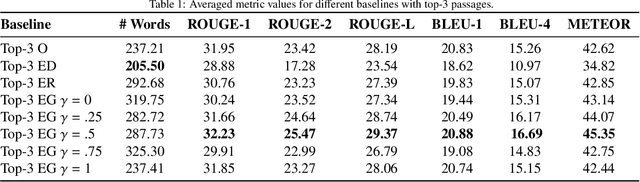

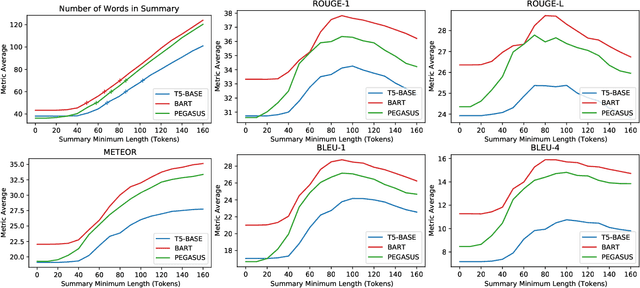

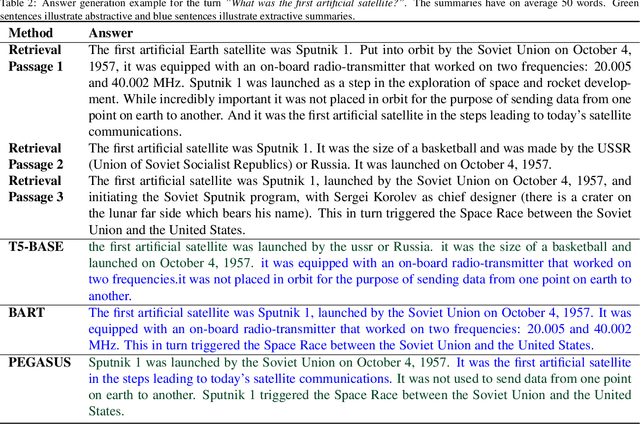

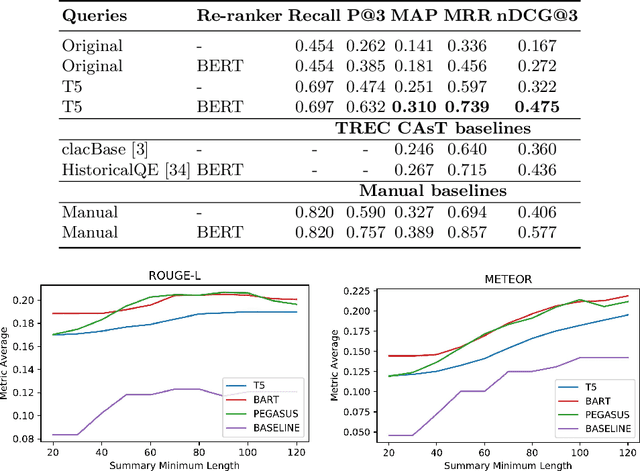

Abstract:The conversational search paradigm introduces a step change over the traditional search paradigm by allowing users to interact with search agents in a multi-turn and natural fashion. The conversation flows naturally and is usually centered around a target field of knowledge. In this work, we propose a knowledge-driven answer generation approach for open-domain conversational search, where a conversation-wide entities' knowledge graph is used to bias search-answer generation. First, a conversation-specific knowledge graph is extracted from the top passages retrieved with a Transformer-based re-ranker. The entities knowledge-graph is then used to bias a search-answer generator Transformer towards information rich and concise answers. This conversation specific bias is computed by identifying the most relevant passages according to the most salient entities of that particular conversation. Experiments show that the proposed approach successfully exploits entities knowledge along the conversation, and outperforms a set of baselines on the search-answer generation task.

Open-Domain Conversational Search Assistant with Transformers

Jan 20, 2021

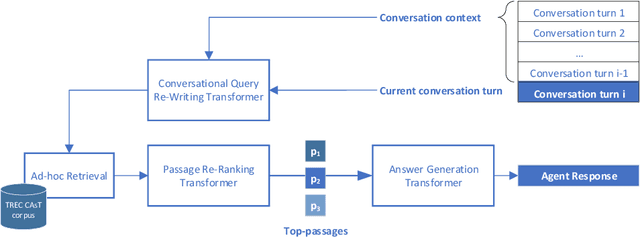

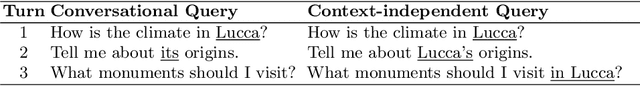

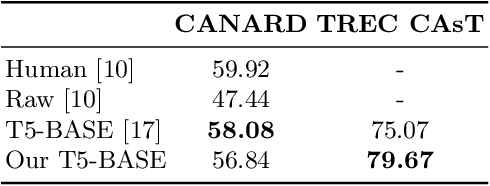

Abstract:Open-domain conversational search assistants aim at answering user questions about open topics in a conversational manner. In this paper we show how the Transformer architecture achieves state-of-the-art results in key IR tasks, leveraging the creation of conversational assistants that engage in open-domain conversational search with single, yet informative, answers. In particular, we propose an open-domain abstractive conversational search agent pipeline to address two major challenges: first, conversation context-aware search and second, abstractive search-answers generation. To address the first challenge, the conversation context is modeled with a query rewriting method that unfolds the context of the conversation up to a specific moment to search for the correct answers. These answers are then passed to a Transformer-based re-ranker to further improve retrieval performance. The second challenge, is tackled with recent Abstractive Transformer architectures to generate a digest of the top most relevant passages. Experiments show that Transformers deliver a solid performance across all tasks in conversational search, outperforming the best TREC CAsT 2019 baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge