Maria Kolos

TRANSPR: Transparency Ray-Accumulating Neural 3D Scene Point Renderer

Sep 06, 2020

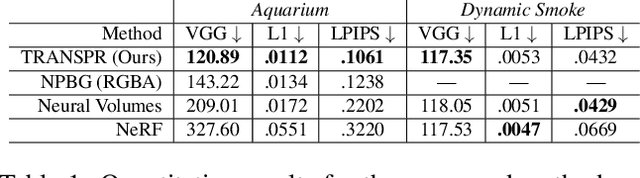

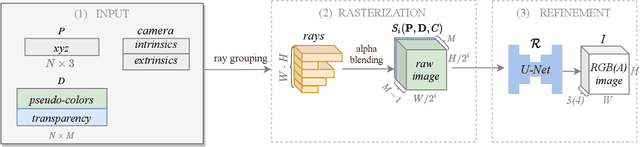

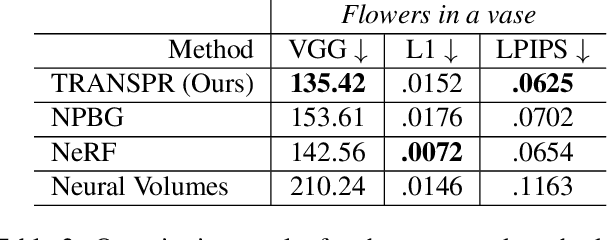

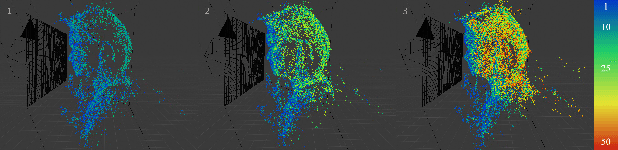

Abstract:We propose and evaluate a neural point-based graphics method that can model semi-transparent scene parts. Similarly to its predecessor pipeline, ours uses point clouds to model proxy geometry, and augments each point with a neural descriptor. Additionally, a learnable transparency value is introduced in our approach for each point. Our neural rendering procedure consists of two steps. Firstly, the point cloud is rasterized using ray grouping into a multi-channel image. This is followed by the neural rendering step that "translates" the rasterized image into an RGB output using a learnable convolutional network. New scenes can be modeled using gradient-based optimization of neural descriptors and of the rendering network. We show that novel views of semi-transparent point cloud scenes can be generated after training with our approach. Our experiments demonstrate the benefit of introducing semi-transparency into the neural point-based modeling for a range of scenes with semi-transparent parts.

Procedural Synthesis of Remote Sensing Images for Robust Change Detection with Neural Networks

May 20, 2019

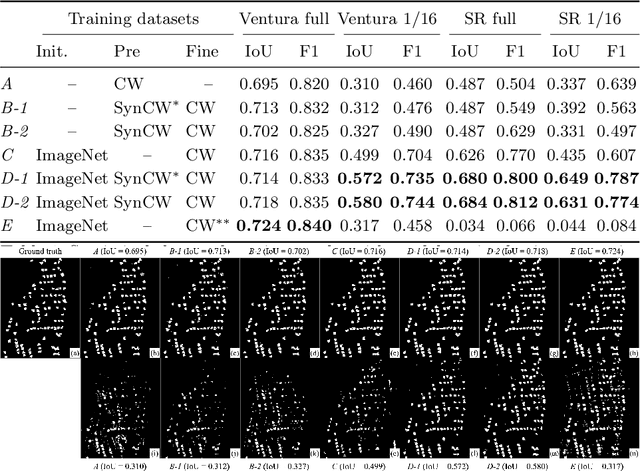

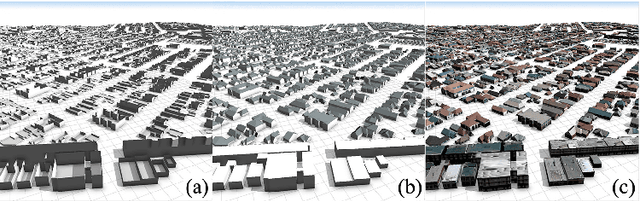

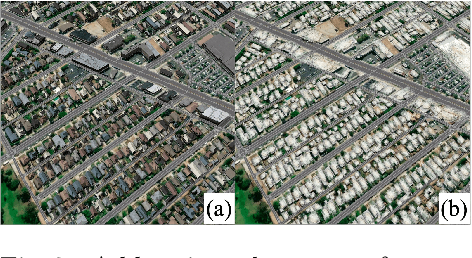

Abstract:Data-driven methods such as convolutional neural networks (CNNs) are known to deliver state-of-the-art performance on image recognition tasks when the training data are abundant. However, in some instances, such as change detection in remote sensing images, annotated data cannot be obtained in sufficient quantities. In this work, we propose a simple and efficient method for creating realistic targeted synthetic datasets in the remote sensing domain, leveraging the opportunities offered by game development engines. We provide a description of the pipeline for procedural geometry generation and rendering as well as an evaluation of the efficiency of produced datasets in a change detection scenario. Our evaluations demonstrate that our pipeline helps to improve the performance and convergence of deep learning models when the amount of real-world data is severely limited.

* 17 pages, 11 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge