Maria Esther Vidal

Context-aware Entity Linking with Attentive Neural Networks on Wikidata Knowledge Graph

Dec 12, 2019

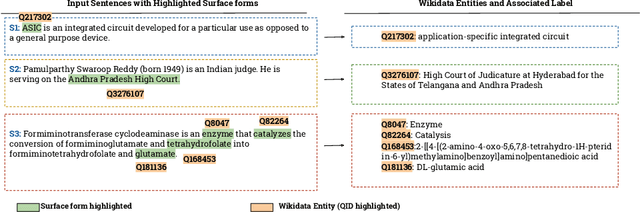

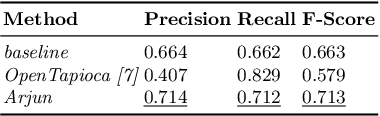

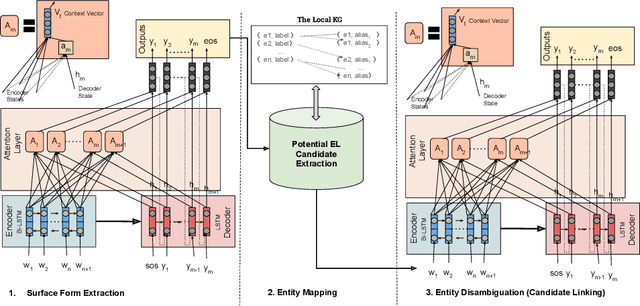

Abstract:The Entity Linking (EL) approaches have been a long-standing research field and find applicability in various use cases such as semantic search, text annotation, question answering, etc. Although effective and robust, current approaches are still limited to particular knowledge repositories (e.g. Wikipedia) or specific knowledge graphs (e.g. Freebase, DBpedia, and YAGO). The collaborative knowledge graphs such as Wikidata excessively rely on the crowd to author the information. Since the crowd is not bound to a standard protocol for assigning entity titles, the knowledge graph is populated by non-standard, noisy, long or even sometimes awkward titles. The issue of long, implicit, and nonstandard entity representations is a challenge in EL approaches for gaining high precision and recall. In this paper, we advance the state-of-the-art approaches by developing a context-aware attentive neural network approach for entity linking on Wikidata. Our approach contributes by exploiting the sufficient context from a Knowledge Graph as a source of background knowledge, which is then fed into the neural network. This approach demonstrates merit to address challenges associated with entity titles (multi-word, long, implicit, case-sensitive). Our experimental study shows $\approx$8\% improvements over the baseline approach, and significantly outperform an end to end approach for Wikidata entity linking. This work, first of its kind, opens a new direction for the research community to pay attention to developing context-aware EL approaches for collaborative knowledge graphs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge