Marco Mellia

The Sweet Danger of Sugar: Debunking Representation Learning for Encrypted Traffic Classification

Jul 22, 2025Abstract:Recently we have witnessed the explosion of proposals that, inspired by Language Models like BERT, exploit Representation Learning models to create traffic representations. All of them promise astonishing performance in encrypted traffic classification (up to 98% accuracy). In this paper, with a networking expert mindset, we critically reassess their performance. Through extensive analysis, we demonstrate that the reported successes are heavily influenced by data preparation problems, which allow these models to find easy shortcuts - spurious correlation between features and labels - during fine-tuning that unrealistically boost their performance. When such shortcuts are not present - as in real scenarios - these models perform poorly. We also introduce Pcap-Encoder, an LM-based representation learning model that we specifically design to extract features from protocol headers. Pcap-Encoder appears to be the only model that provides an instrumental representation for traffic classification. Yet, its complexity questions its applicability in practical settings. Our findings reveal flaws in dataset preparation and model training, calling for a better and more conscious test design. We propose a correct evaluation methodology and stress the need for rigorous benchmarking.

AutoPenBench: Benchmarking Generative Agents for Penetration Testing

Oct 04, 2024

Abstract:Generative AI agents, software systems powered by Large Language Models (LLMs), are emerging as a promising approach to automate cybersecurity tasks. Among the others, penetration testing is a challenging field due to the task complexity and the diverse strategies to simulate cyber-attacks. Despite growing interest and initial studies in automating penetration testing with generative agents, there remains a significant gap in the form of a comprehensive and standard framework for their evaluation and development. This paper introduces AutoPenBench, an open benchmark for evaluating generative agents in automated penetration testing. We present a comprehensive framework that includes 33 tasks, each representing a vulnerable system that the agent has to attack. Tasks are of increasing difficulty levels, including in-vitro and real-world scenarios. We assess the agent performance with generic and specific milestones that allow us to compare results in a standardised manner and understand the limits of the agent under test. We show the benefits of AutoPenBench by testing two agent architectures: a fully autonomous and a semi-autonomous supporting human interaction. We compare their performance and limitations. For example, the fully autonomous agent performs unsatisfactorily achieving a 21% Success Rate (SR) across the benchmark, solving 27% of the simple tasks and only one real-world task. In contrast, the assisted agent demonstrates substantial improvements, with 64% of SR. AutoPenBench allows us also to observe how different LLMs like GPT-4o or OpenAI o1 impact the ability of the agents to complete the tasks. We believe that our benchmark fills the gap with a standard and flexible framework to compare penetration testing agents on a common ground. We hope to extend AutoPenBench along with the research community by making it available under https://github.com/lucagioacchini/auto-pen-bench.

Generic Multi-modal Representation Learning for Network Traffic Analysis

May 04, 2024

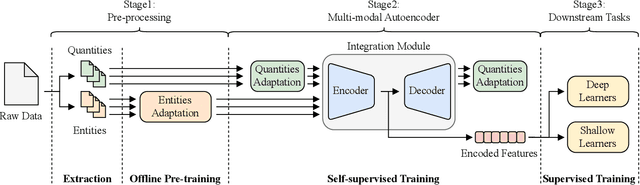

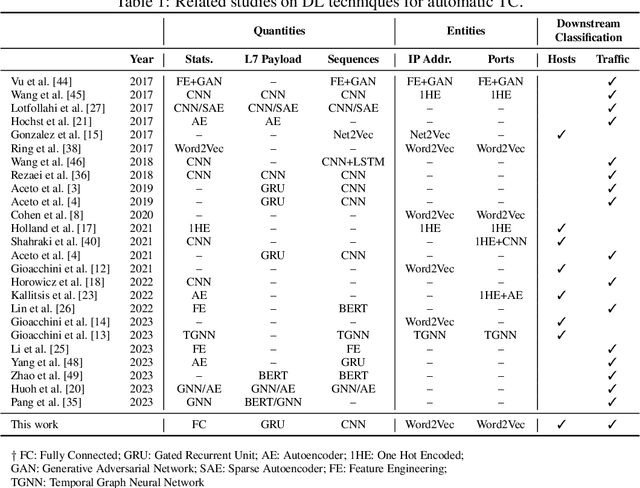

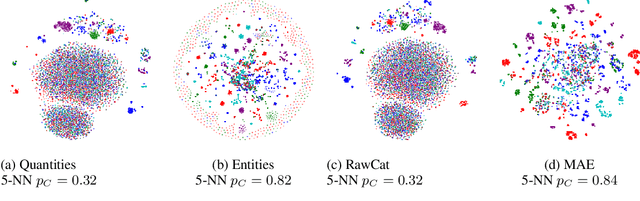

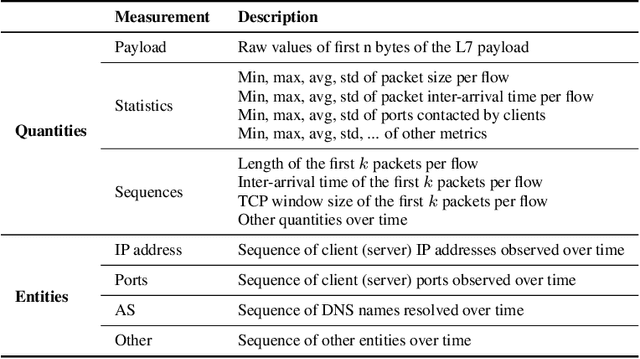

Abstract:Network traffic analysis is fundamental for network management, troubleshooting, and security. Tasks such as traffic classification, anomaly detection, and novelty discovery are fundamental for extracting operational information from network data and measurements. We witness the shift from deep packet inspection and basic machine learning to Deep Learning (DL) approaches where researchers define and test a custom DL architecture designed for each specific problem. We here advocate the need for a general DL architecture flexible enough to solve different traffic analysis tasks. We test this idea by proposing a DL architecture based on generic data adaptation modules, followed by an integration module that summarises the extracted information into a compact and rich intermediate representation (i.e. embeddings). The result is a flexible Multi-modal Autoencoder (MAE) pipeline that can solve different use cases. We demonstrate the architecture with traffic classification (TC) tasks since they allow us to quantitatively compare results with state-of-the-art solutions. However, we argue that the MAE architecture is generic and can be used to learn representations useful in multiple scenarios. On TC, the MAE performs on par or better than alternatives while avoiding cumbersome feature engineering, thus streamlining the adoption of DL solutions for traffic analysis.

Benchmarking Evolutionary Community Detection Algorithms in Dynamic Networks

Jan 11, 2024

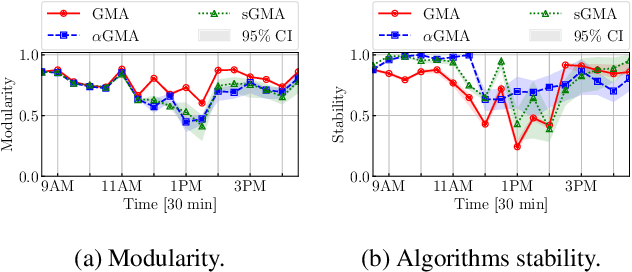

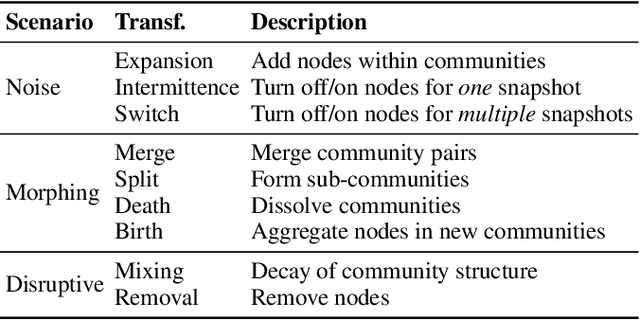

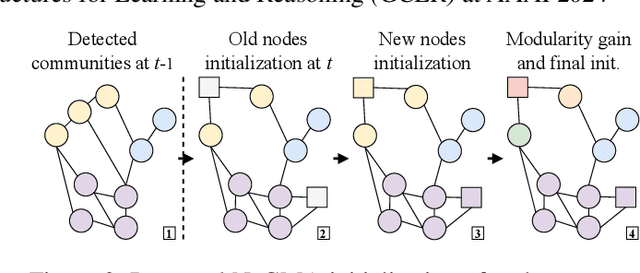

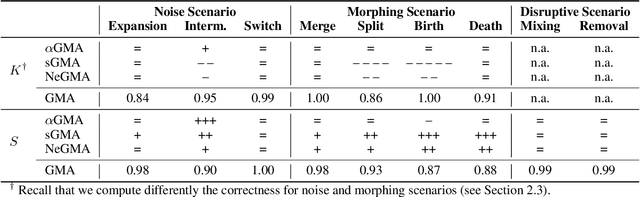

Abstract:In dynamic complex networks, entities interact and form network communities that evolve over time. Among the many static Community Detection (CD) solutions, the modularity-based Louvain, or Greedy Modularity Algorithm (GMA), is widely employed in real-world applications due to its intuitiveness and scalability. Nevertheless, addressing CD in dynamic graphs remains an open problem, since the evolution of the network connections may poison the identification of communities, which may be evolving at a slower pace. Hence, naively applying GMA to successive network snapshots may lead to temporal inconsistencies in the communities. Two evolutionary adaptations of GMA, sGMA and $\alpha$GMA, have been proposed to tackle this problem. Yet, evaluating the performance of these methods and understanding to which scenarios each one is better suited is challenging because of the lack of a comprehensive set of metrics and a consistent ground truth. To address these challenges, we propose (i) a benchmarking framework for evolutionary CD algorithms in dynamic networks and (ii) a generalised modularity-based approach (NeGMA). Our framework allows us to generate synthetic community-structured graphs and design evolving scenarios with nine basic graph transformations occurring at different rates. We evaluate performance through three metrics we define, i.e. Correctness, Delay, and Stability. Our findings reveal that $\alpha$GMA is well-suited for detecting intermittent transformations, but struggles with abrupt changes; sGMA achieves superior stability, but fails to detect emerging communities; and NeGMA appears a well-balanced solution, excelling in responsiveness and instantaneous transformations detection.

* Accepted at the 4th Workshop on Graphs and more Complex structures for Learning and Reasoning (GCLR) at AAAI 2024

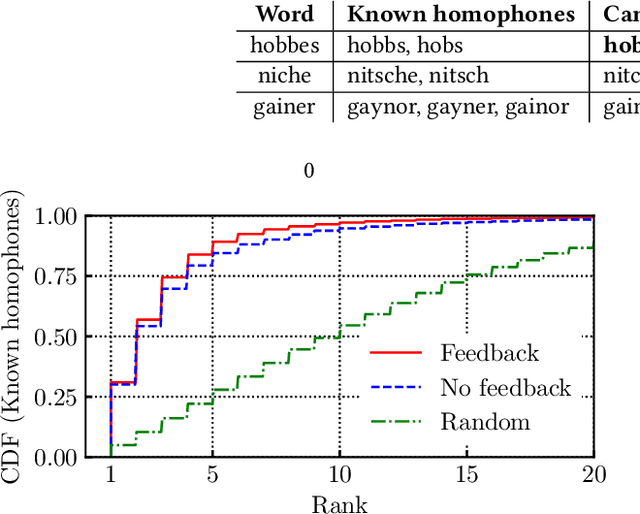

Sound-skwatter (Did You Mean: Sound-squatter?) AI-powered Generator for Phishing Prevention

Oct 10, 2023

Abstract:Sound-squatting is a phishing attack that tricks users into malicious resources by exploiting similarities in the pronunciation of words. Proactive defense against sound-squatting candidates is complex, and existing solutions rely on manually curated lists of homophones. We here introduce Sound-skwatter, a multi-language AI-based system that generates sound-squatting candidates for proactive defense. Sound-skwatter relies on an innovative multi-modal combination of Transformers Networks and acoustic models to learn sound similarities. We show that Sound-skwatter can automatically list known homophones and thousands of high-quality candidates. In addition, it covers cross-language sound-squatting, i.e., when the reader and the listener speak different languages, supporting any combination of languages. We apply Sound-skwatter to network-centric phishing via squatted domain names. We find ~ 10% of the generated domains exist in the wild, the vast majority unknown to protection solutions. Next, we show attacks on the PyPI package manager, where ~ 17% of the popular packages have at least one existing candidate. We believe Sound-skwatter is a crucial asset to mitigate the sound-squatting phenomenon proactively on the Internet. To increase its impact, we publish an online demo and release our models and code as open source.

LogPrécis: Unleashing Language Models for Automated Shell Log Analysis

Jul 17, 2023Abstract:The collection of security-related logs holds the key to understanding attack behaviors and diagnosing vulnerabilities. Still, their analysis remains a daunting challenge. Recently, Language Models (LMs) have demonstrated unmatched potential in understanding natural and programming languages. The question arises whether and how LMs could be also useful for security experts since their logs contain intrinsically confused and obfuscated information. In this paper, we systematically study how to benefit from the state-of-the-art in LM to automatically analyze text-like Unix shell attack logs. We present a thorough design methodology that leads to LogPr\'ecis. It receives as input raw shell sessions and automatically identifies and assigns the attacker tactic to each portion of the session, i.e., unveiling the sequence of the attacker's goals. We demonstrate LogPr\'ecis capability to support the analysis of two large datasets containing about 400,000 unique Unix shell attacks. LogPr\'ecis reduces them into about 3,000 fingerprints, each grouping sessions with the same sequence of tactics. The abstraction it provides lets the analyst better understand attacks, identify fingerprints, detect novelty, link similar attacks, and track families and mutations. Overall, LogPr\'ecis, released as open source, paves the way for better and more responsive defense against cyberattacks.

Recommendation Systems in Libraries: an Application with Heterogeneous Data Sources

Mar 21, 2023

Abstract:The Reading&Machine project exploits the support of digitalization to increase the attractiveness of libraries and improve the users' experience. The project implements an application that helps the users in their decision-making process, providing recommendation system (RecSys)-generated lists of books the users might be interested in, and showing them through an interactive Virtual Reality (VR)-based Graphical User Interface (GUI). In this paper, we focus on the design and testing of the recommendation system, employing data about all users' loans over the past 9 years from the network of libraries located in Turin, Italy. In addition, we use data collected by the Anobii online social community of readers, who share their feedback and additional information about books they read. Armed with this heterogeneous data, we build and evaluate Content Based (CB) and Collaborative Filtering (CF) approaches. Our results show that the CF outperforms the CB approach, improving by up to 47\% the relevant recommendations provided to a reader. However, the performance of the CB approach is heavily dependent on the number of books the reader has already read, and it can work even better than CF for users with a large history. Finally, our evaluations highlight that the performances of both approaches are significantly improved if the system integrates and leverages the information from the Anobii dataset, which allows us to include more user readings (for CF) and richer book metadata (for CB).

Understanding mobility in networks: A node embedding approach

Nov 11, 2021

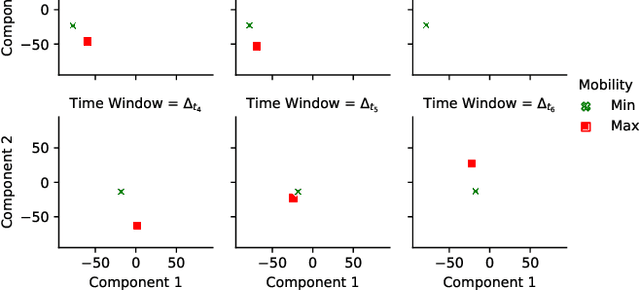

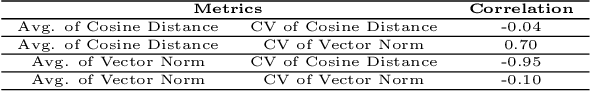

Abstract:Motivated by the growing number of mobile devices capable of connecting and exchanging messages, we propose a methodology aiming to model and analyze node mobility in networks. We note that many existing solutions in the literature rely on topological measurements calculated directly on the graph of node contacts, aiming to capture the notion of the node's importance in terms of connectivity and mobility patterns beneficial for prototyping, design, and deployment of mobile networks. However, each measure has its specificity and fails to generalize the node importance notions that ultimately change over time. Unlike previous approaches, our methodology is based on a node embedding method that models and unveils the nodes' importance in mobility and connectivity patterns while preserving their spatial and temporal characteristics. We focus on a case study based on a trace of group meetings. The results show that our methodology provides a rich representation for extracting different mobility and connectivity patterns, which can be helpful for various applications and services in mobile networks.

RL-IoT: Reinforcement Learning to Interact with IoT Devices

May 21, 2021

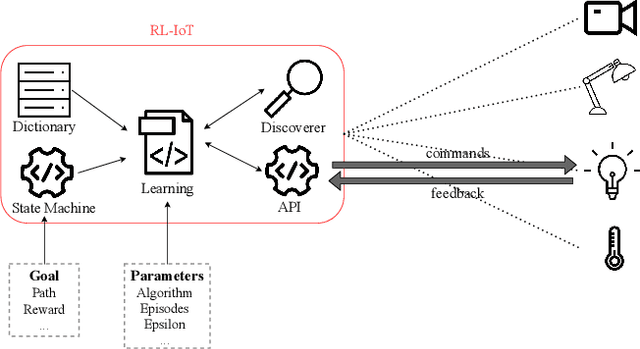

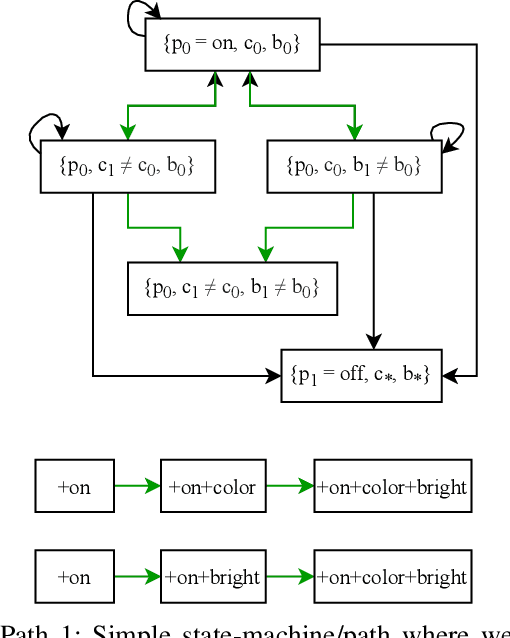

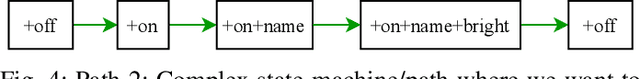

Abstract:Our life is getting filled by Internet of Things (IoT) devices. These devices often rely on closed or poorly documented protocols, with unknown formats and semantics. Learning how to interact with such devices in an autonomous manner is the key for interoperability and automatic verification of their capabilities. In this paper, we propose RL-IoT, a system that explores how to automatically interact with possibly unknown IoT devices. We leverage reinforcement learning (RL) to recover the semantics of protocol messages and to take control of the device to reach a given goal, while minimizing the number of interactions. We assume to know only a database of possible IoT protocol messages, whose semantics are however unknown. RL-IoT exchanges messages with the target IoT device, learning those commands that are useful to reach the given goal. Our results show that RL-IoT is able to solve both simple and complex tasks. With properly tuned parameters, RL-IoT learns how to perform actions with the target device, a Yeelight smart bulb in our case study, completing non-trivial patterns with as few as 400 interactions. RL-IoT paves the road for automatic interactions with poorly documented IoT protocols, thus enabling interoperable systems.

EXPLAIN-IT: Towards Explainable AI for Unsupervised Network Traffic Analysis

Mar 03, 2020

Abstract:The application of unsupervised learning approaches, and in particular of clustering techniques, represents a powerful exploration means for the analysis of network measurements. Discovering underlying data characteristics, grouping similar measurements together, and identifying eventual patterns of interest are some of the applications which can be tackled through clustering. Being unsupervised, clustering does not always provide precise and clear insight into the produced output, especially when the input data structure and distribution are complex and difficult to grasp. In this paper we introduce EXPLAIN-IT, a methodology which deals with unlabeled data, creates meaningful clusters, and suggests an explanation to the clustering results for the end-user. EXPLAIN-IT relies on a novel explainable Artificial Intelligence (AI) approach, which allows to understand the reasons leading to a particular decision of a supervised learning-based model, additionally extending its application to the unsupervised learning domain. We apply EXPLAIN-IT to the problem of YouTube video quality classification under encrypted traffic scenarios, showing promising results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge